2022 was a banner year for AI with breakthroughs in DALL-E2, ChatGPT and Stable Diffusion. The momentum in AI developments is going to keep accelerating in 2023.

As companies continue to invest billions of dollars in AI, it is more important than ever to adopt responsible AI to minimize risks. Establishing a responsible AI framework is key to address AI regulations, compliance, ethics and fairness, and build trust into AI.

In 2022, we made major strides on the Fiddler AI Observability platform to help customers to accelerate their MLOps and build towards responsible AI:

- Delivered giga-level scalability to support complex models that require larger training datasets

- Upgraded our user experience to be seamless and intuitive

- Launched our patent-pending cluster-based algorithm technique to accurately monitor natural language processing and computer vision models

- Became HIPAA compliant to support healthcare, medical, and other agencies under HIPAA accelerate their AI initiatives

- Announced availability on AWS GovCloud to help US government agencies build their responsible AI frameworks

To welcome 2023, we are thrilled to announce further major updates to the Fiddler AI Observability platform to continue our mission of helping more companies reap business value from AI by adopting model monitoring to deploy more ML models and forging a path to responsible AI.

Free trial now available

Through our 14-day free trial beta, we are giving more Machine Learning (ML) and Data Science (DS) leaders and practitioners access to the Fiddler MPM platform to leverage the value of model monitoring, analytics, and explainable AI. Trial users will get started in minutes with our quick start guides, product tour, and ‘how-to’ videos. In the trial environment, users can experience a variety of use cases, from customer churn to lending approvals to fraud detection. Users can gain rich insights into local and global level explanations, and understand model behaviors using our surrogate models.

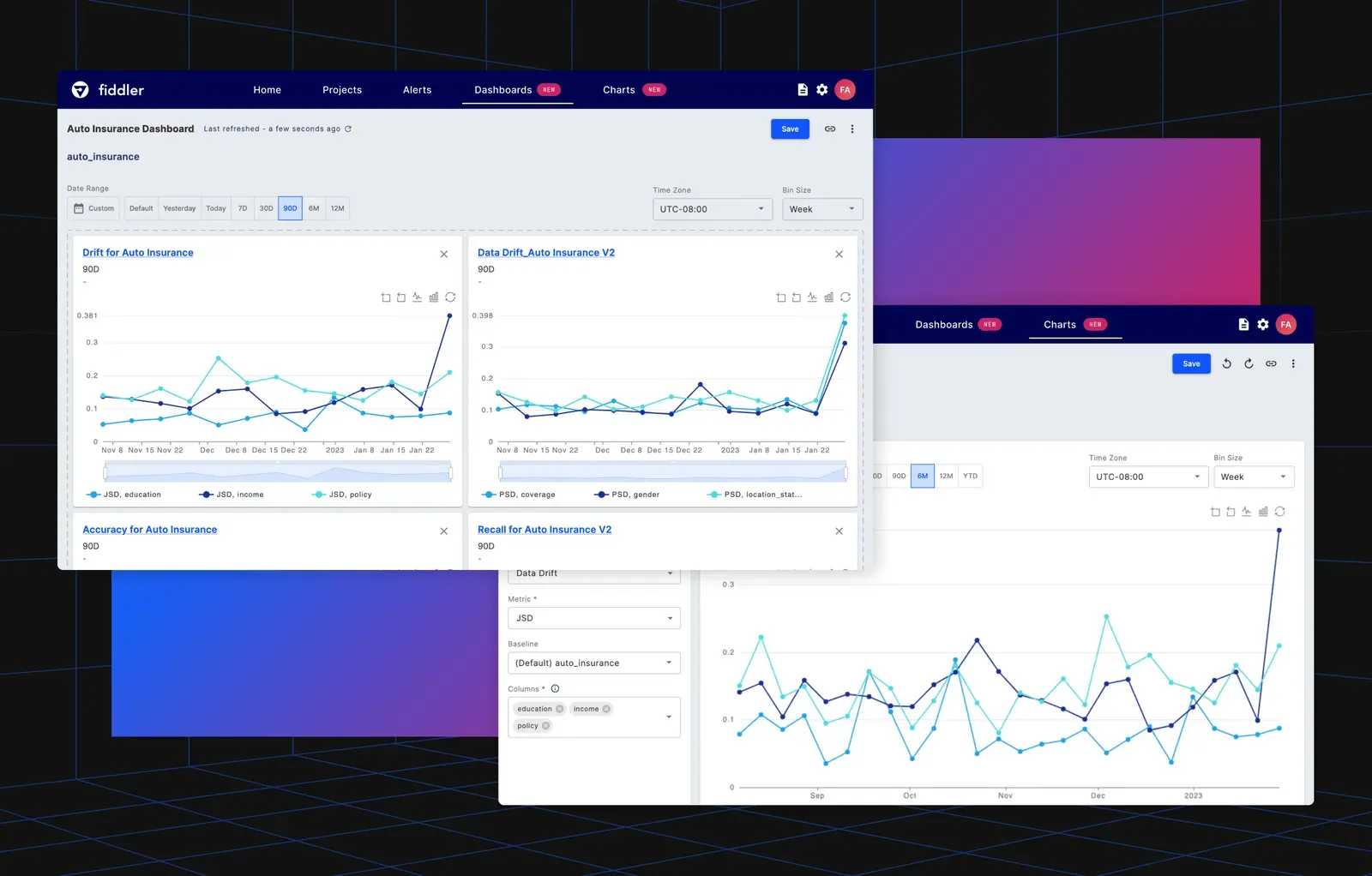

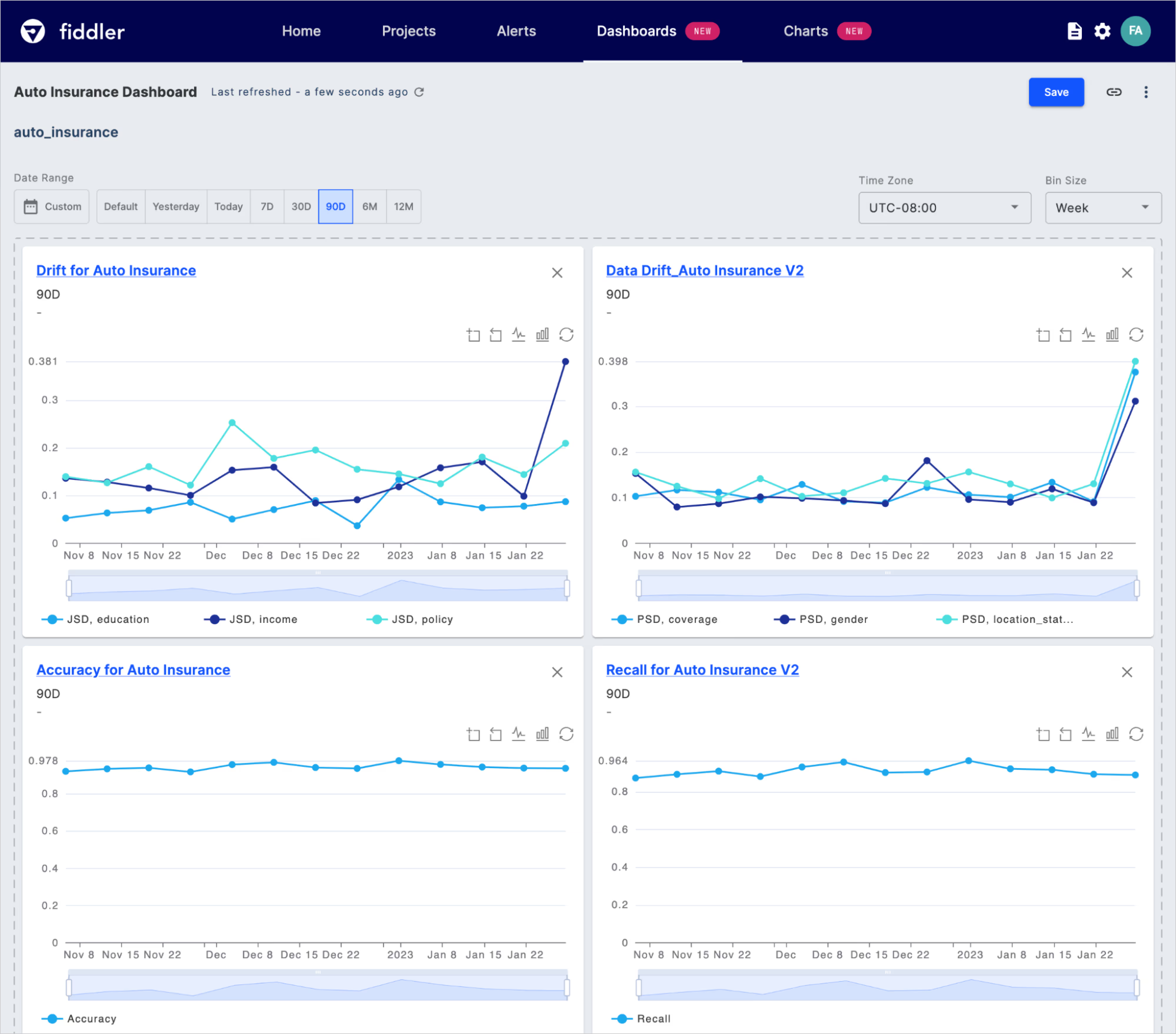

Improve collaboration and alignment with flexible dashboards and reports

Insightful dashboards on model monitoring will break down barriers between MLOps and DS teams and business stakeholders. As a collection of reports, dashboards help ML/DS and business teams gain a deeper understanding of models’ performance and show their impact on business KPIs.

Customizable reports help data science teams plot multiple monitoring metrics, including model performance, drift, data integrity, and traffic metrics. As many as 6 metric queries and up to 20 columns, consisting features, predictions, targets and metadata, can be plotted and analyzed for one or more models — all in a one report. With this level of granularity, DS and ML teams gain deeper context and understand the correlation amongst monitoring metrics and how they impact model behavior.

Teams have the flexibility to adjust baseline datasets or use production data as the baseline. A comparison amongst multiple baselines can be performed to understand how different baselines — data shifts due seasonality or geography for example — may influence model drift and model behavior.

Through shareable dashboards with custom reports, ML teams can enjoy improved model governance by 1) allowing model validators to evaluate and validate models to ensure they meet certain criteria before deployment and 2) for regulators to conduct routine audits.

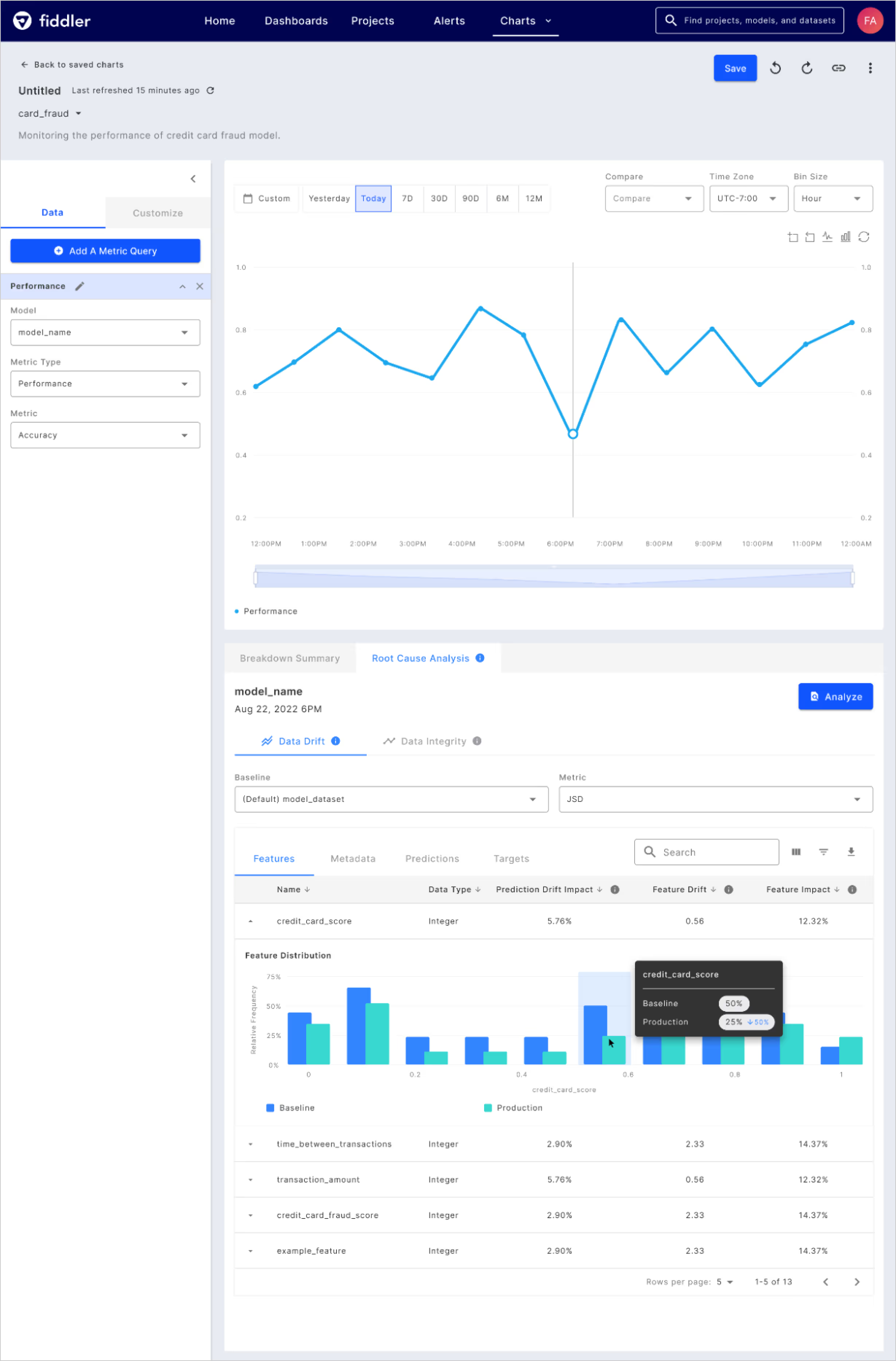

Pinpoint model performance issues with rich diagnostics

We have re-imagined the way ML teams can experience and analyze models to truly close the feedback loop in their MLOps lifecycle and continuously improve model outcomes. By implementing rich diagnostics with a human-centered design, ML teams can get to issue resolution quickly from alerts to root cause analysis.

Data scientists can draw contextual information from deep and rich diagnostics helping them be more prescriptive about improving model predictions. Through root cause analysis, ML practitioners are informed about the stage of the ML lifecycle they should revisit to improve their models. They could go as far as wrangling new data to create a completely new hypothesis to solve the business challenge, modify features and labels, or simply retrain the model with an updated baseline dataset.

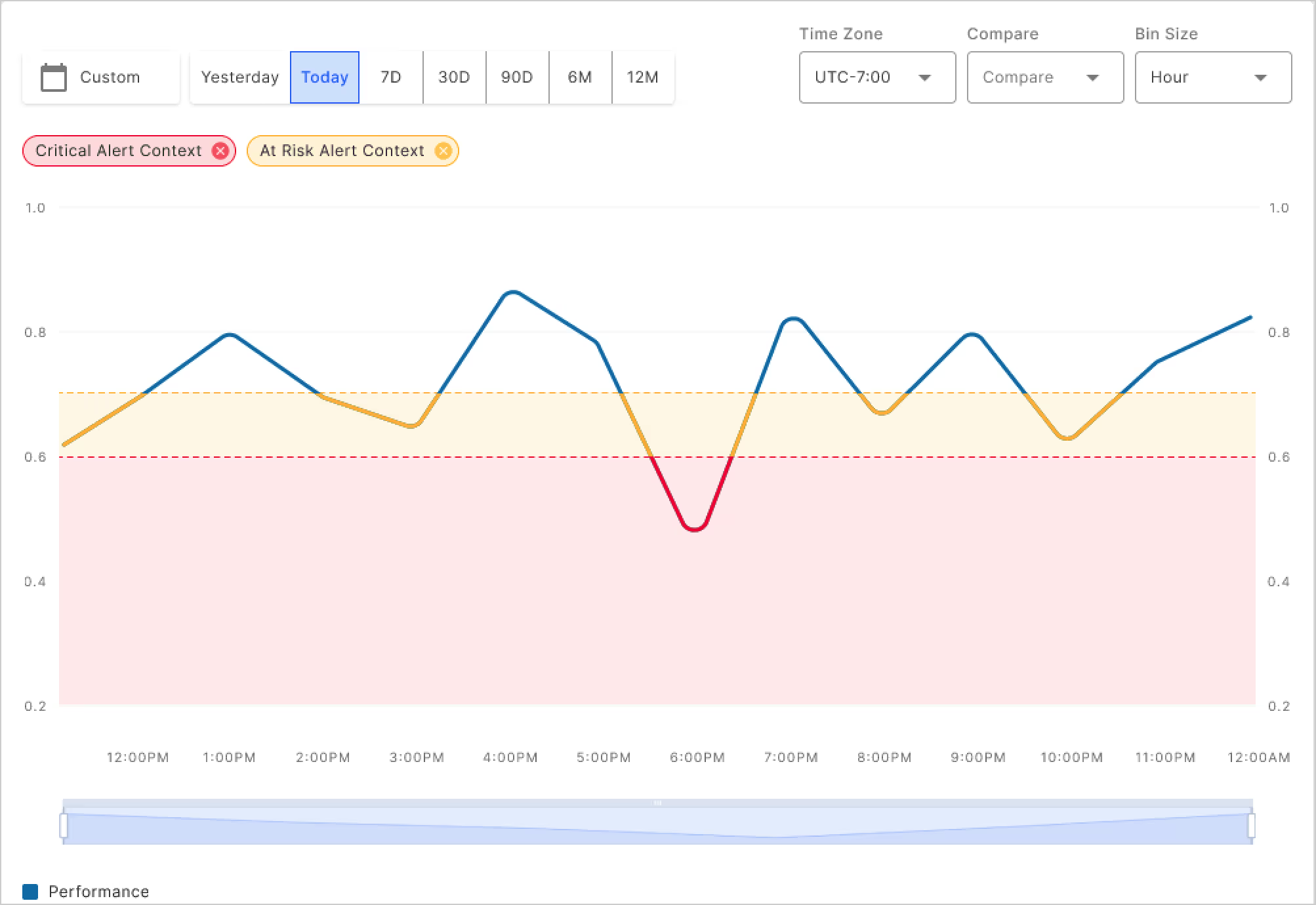

Get early warnings on performance issues with powerful alerts

Fiddler’s powerful and flexible model monitoring alerts enable ML teams to prioritize and troubleshoot issues that have the highest impact on business-critical projects. Alerts are fully customizable and tracked in a unified alerts dashboard. ML teams have a pulse on their models’ health and get early warning signals to prevent model underperformance or model drift caused by even the slightest shift in data distribution. When an alert notification comes through, ML teams can quickly analyze the severity of the issue, pinpoint exactly where the underperformance happened and perform root cause analysis to discover the underlying cause of the issue.

Deeper understanding of unstructured models’ drift with rich UMAP visualizations

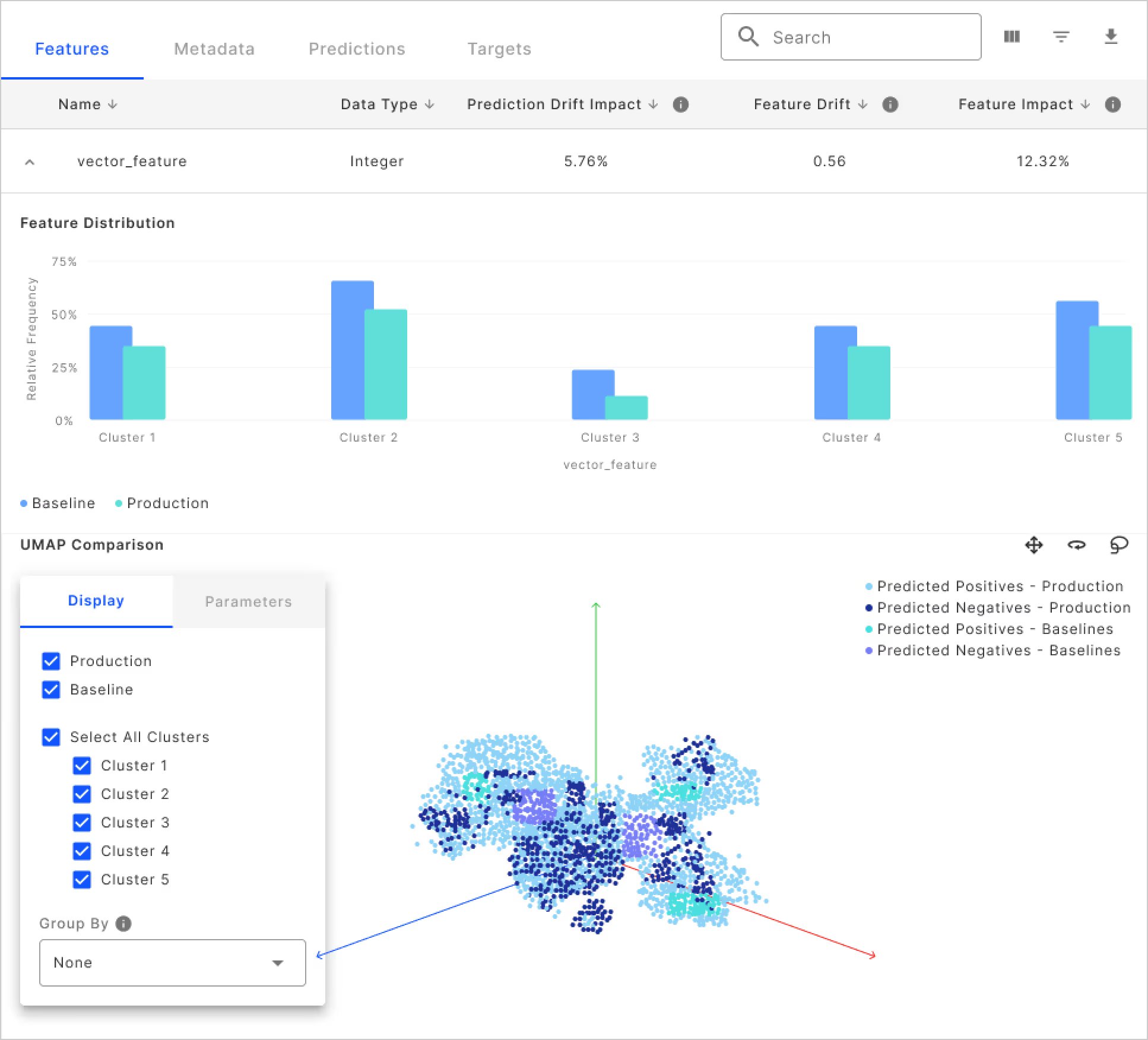

Last year’s groundbreaking advances in AI, specifically on LLMs, have spurred more companies to launch advanced ML projects powered by unstructured models. We continue to enhance and build new monitoring capabilities for unstructured models. Our customers can now visualize where and how drift happened in their natural language processing and computer vision models using Fiddler’s interactive 3D UMAP visualizer. ML practitioners can view the drift that has happened and zoom into any problem area by clicking on a particular data point on the UMAP to open up and view the actual image that has drifted.

Pricing: meeting you where you are

Just like machine learning models, pricing can be opaque especially in this high-growth market. As a mission-driven company helping teams achieve responsible AI by ensuring model outcomes are fair and trustworthy, we believe that our pricing should be in the same vein — grounded in transparency.

The objective of our pricing is two fold:

- Make pricing as simple and frictionless as possible so that our customers only pay for what they need

- Meet our customers where they are on their AI journey and grow with them as they scale AI across the organization

Learn more about our pricing methodology.

We look forward to continuing bolstering our MPM platform as the year of AI unfolds. Request a demo.