The Fiddler AI Observability platform delivers the best interpretability methods available by combining top explainable AI principles, including Shapley Values and Integrated Gradients, with proprietary explainable AI methods. Obtain fast model explanations and understand your ML model predictions quickly with Fiddler Shap, an explainable method born from our award-winning AI research.

Hear from our Data Science team about different Explainable AI concepts:

To ensure continuous transparency, Fiddler automates documentation of explainable AI projects and delivers prediction explanations for future model governance and review requirements. You’ll always know what’s in your training data, models, or production inferences.

You can deploy AI governance and model risk management processes effectively with Fiddler.

By proactively identifying and addressing deep-rooted model bias or issues with Fiddler, you not only safeguard against costly fines and penalties but also significantly reduce the risk of negative publicity. Stay ahead of AI disasters and maintain brand reputation.

It’s important to understand model performance and behavior before putting anything into production, which requires complete context and visibility into model behaviors — from training to production.

When areas of low performance or potential issues are rooted out before they are seen, the customer experience is improved, leading to higher Net Promoter Scores (NPS) and stronger customer recommendations.

Zoom in to the details

Perform counterfactual analysis in real-time.

Get faster explanations than just perturbation-based explanations.

Explain complex recursive inputs for fine-grained attributions.

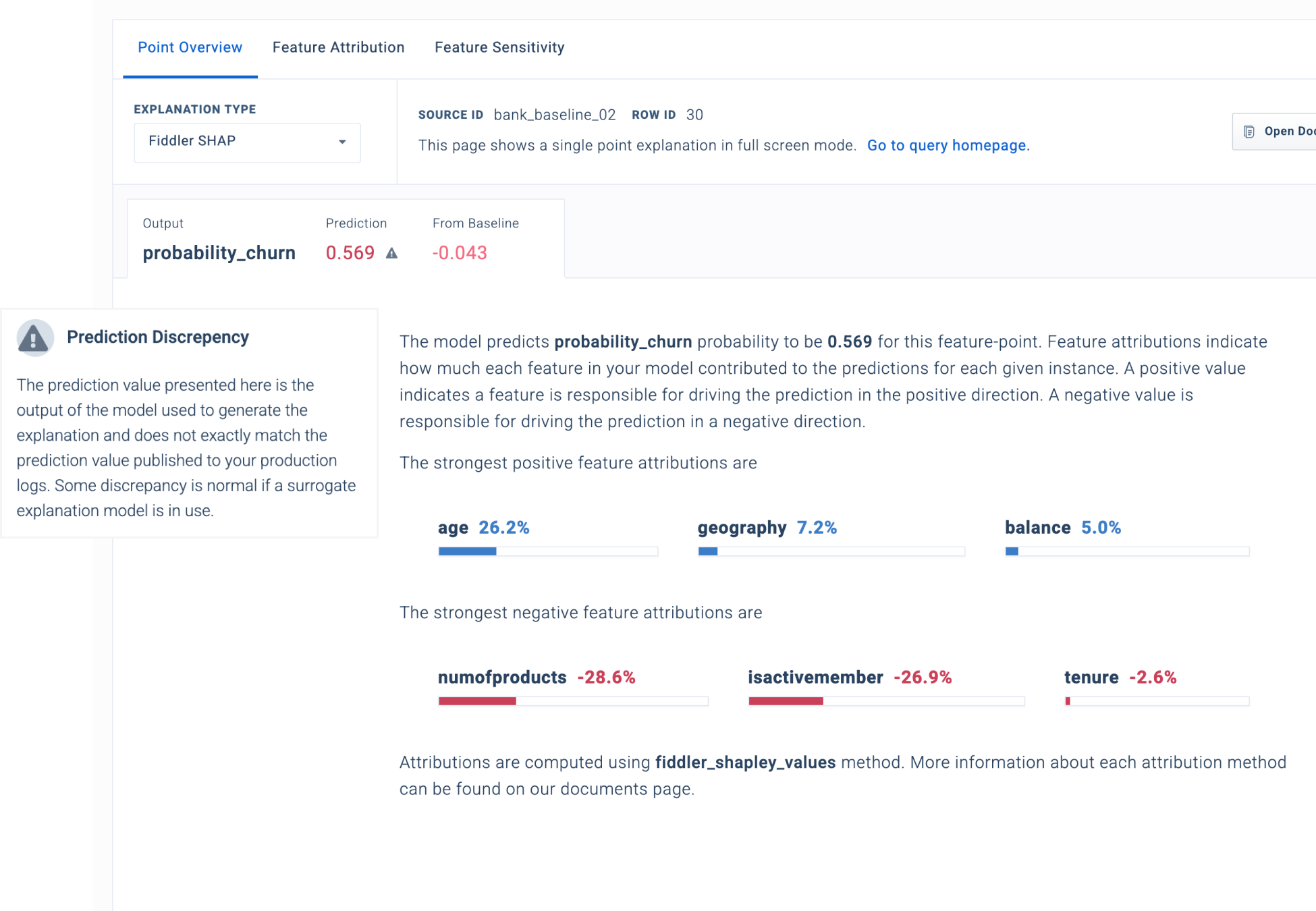

Increase your model’s transparency and interpretability using SHAP values, including our award-winning Fiddler SHAP metric.

Run deep learning models, including NLP and CV models, faster and comprehend how data features contribute to data skew and model predictions.

Gain a better understanding of your model’s predictions by changing any value and studying the impact on scenario outcomes.

Understand how each feature you select contributes to the model’s predictions (global) and uncover the root cause of an individual issue (local).

Improve the interpretability of your models before they go into production by using automatically generated surrogate models.

Bring your own customized explainers specific to your use case, via APIs, to our patented UI.

Explainable AI (XAI) refers to techniques and methods that make machine learning model decisions transparent and understandable to humans. It helps users interpret how AI models generate predictions, ensuring trust and accountability.

Explainable AI (XAI) is crucial for increasing transparency, trust, and fairness in AI systems. It helps engienering leaders, developers, and data scientists understand model decisions, reducing risks associated with bias, errors, and compliance violations.

XAI improves model accountability, builds user trust, and helps organizations detect and mitigate biases. It also enhances regulatory compliance, facilitates debugging, and helps increase the adoption of AI-driven decisions.

By making AI decision-making transparent and interpretable, XAI reassures developers and data scientists that models are functioning fairly and accurately. It provides justifications for predictions, making AI systems more accountable and trustworthy.

Explainable AI (XAI) refers to systems that provide clear, understandable explanations of the machine learning decision-making processes. XAI helps build trust with stakeholders by making the reasoning behind predictions transparent, and interpreting AI-driven insights.

XAI identifies potential biases by analyzing how different input variables impact predictions across various demographic groups. This enables organizations to detect and correct unfair biases, improving AI fairness and inclusivity.