Fiddler for AI Governance, Risk Management, and Compliance (GRC)

Enterprise Oversight and Compliance for Agents and Predictive Applications

As AI advances, regulations such as SR 11-7, HIPAA, and NAIC, and policies such as the EU AI Act, the AI Bill of Rights, OMB M-26-04, and the CA AI Bills continue to emerge, enforcing governance, risk, and compliance standards(GRC). These regulations aim to increase trust and transparency in AI systems and protect consumers from harmful or biased outcomes. By implementing unified observability, guardrails, and governance, enterprises can deploy agents and predictive applications at scale with complete audit trails that meet evolving regulatory requirements.

Enterprises Trust Fiddler for GRC

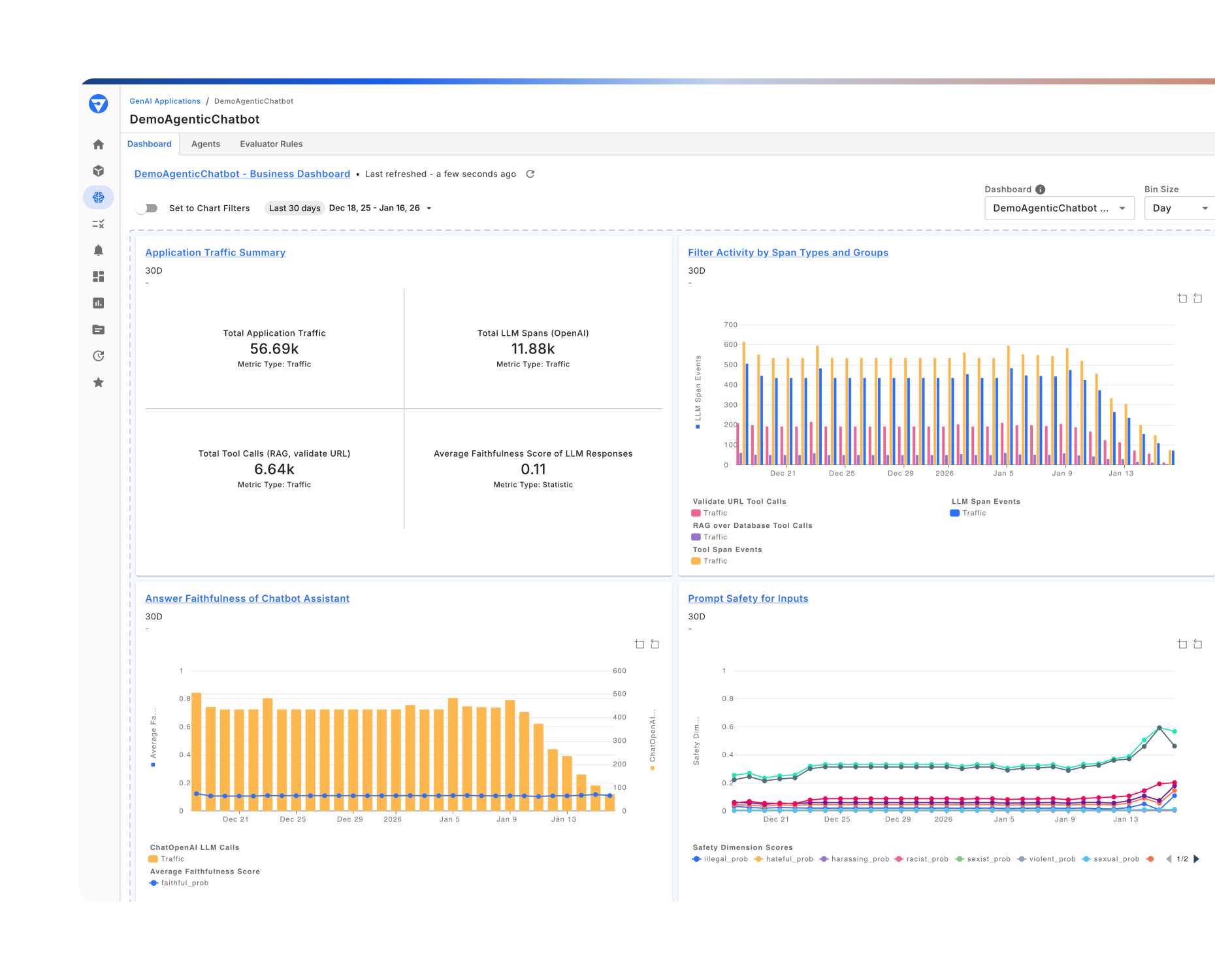

The Fiddler AI Observability and Security platform provides centralized governance and oversight for agents and predictive models across the enterprise, recording every agent behavior, action, model decision, and performance metric to generate the audit evidence and comprehensive audit trails needed to meet evolving regulatory requirements.

AI Transparency, Audit Trails, and Documentation

Generate comprehensive audit trails that maintain complete accountability across every decision, action, evaluation, and policy outcome.

- Provide audit evidence aligned with enterprise governance and regulatory review requirements (GDPR, HIPAA, NAIC, SR 11-7)

- Maintain comprehensive documentation that enhances accountability and transparency across all AI deployments

- Support compliance with deep diagnostics that reveal root causes of agent failures and model degradation

Learn how Integral Ad Science scales transparent and compliant AI products using Fiddler.

AI Risk Identification and Mitigation

Proactively assess and mitigate risks to prevent negative impacts on end-users and the enterprise.

- Build a robust model risk management (MRM) framework with greater model transparency to meet periodic reviews, including those by the Federal Reserve and OCC’s SR 11-7 guidelines

- Detect and mitigate risks across agents and predictive models; toxic outputs, PII/PHI leakage, drift, bias, privacy breaches, and unfair outcomes

- Enforce policies, enterprise rules, and approval workflows with guardrails, and use real-time alerts to trigger immediate action when issues are identified

Learn how to create custom reports for MRM and compliance reviews in Fiddler.

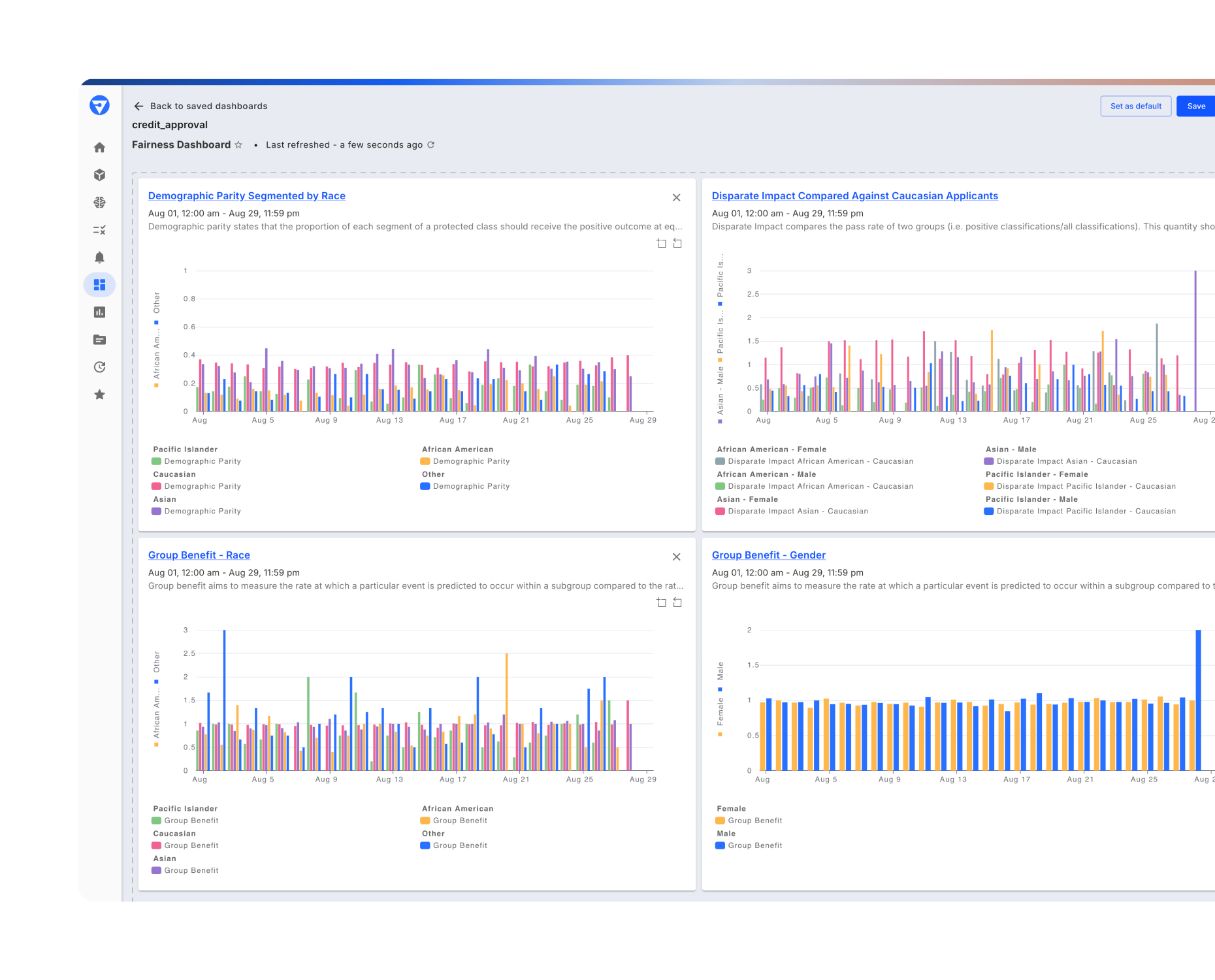

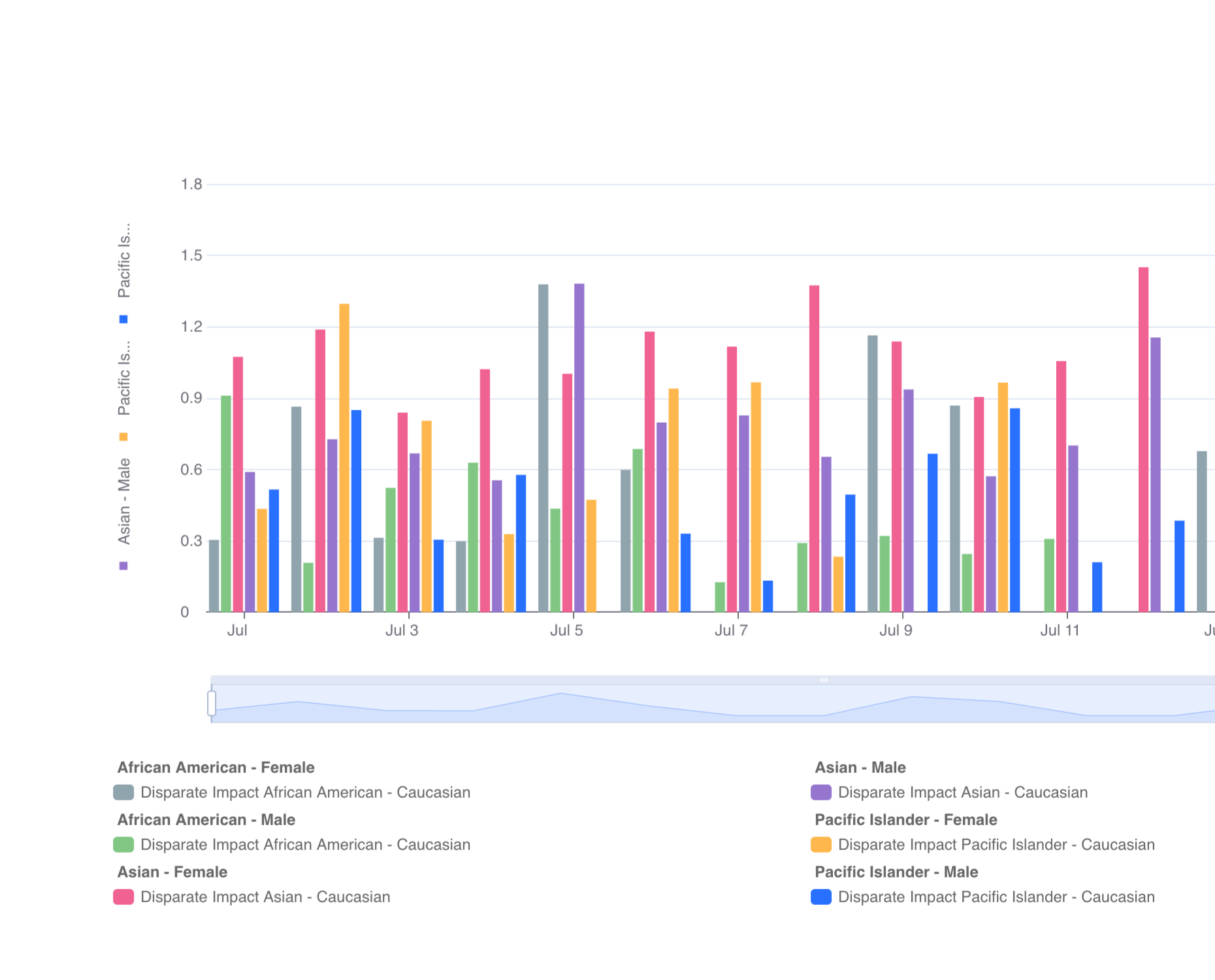

Ethical and Responsible AI Practices

Enforce ethical AI practices that deliver transparent, trustworthy, and equitable outcomes.

- Implement human-in-the-loop approvals for sensitive or high-risk decisions

- Prevent bias and unfair outcomes across protected attributes to minimize compliance challenges, legal risks, and reputation damage

- Intervene, pause, reroute, or escalate actions when agent behavior or model predictions deviate from ethical standards

Explore how Fiddler tracks fairness and bias in agents and predictive applications.