Fiddler AI Observability for Insurance

Unlock AI Value, Control AI Risk, Maintain NAIC Compliance

Mitigate Financial and Brand Risk

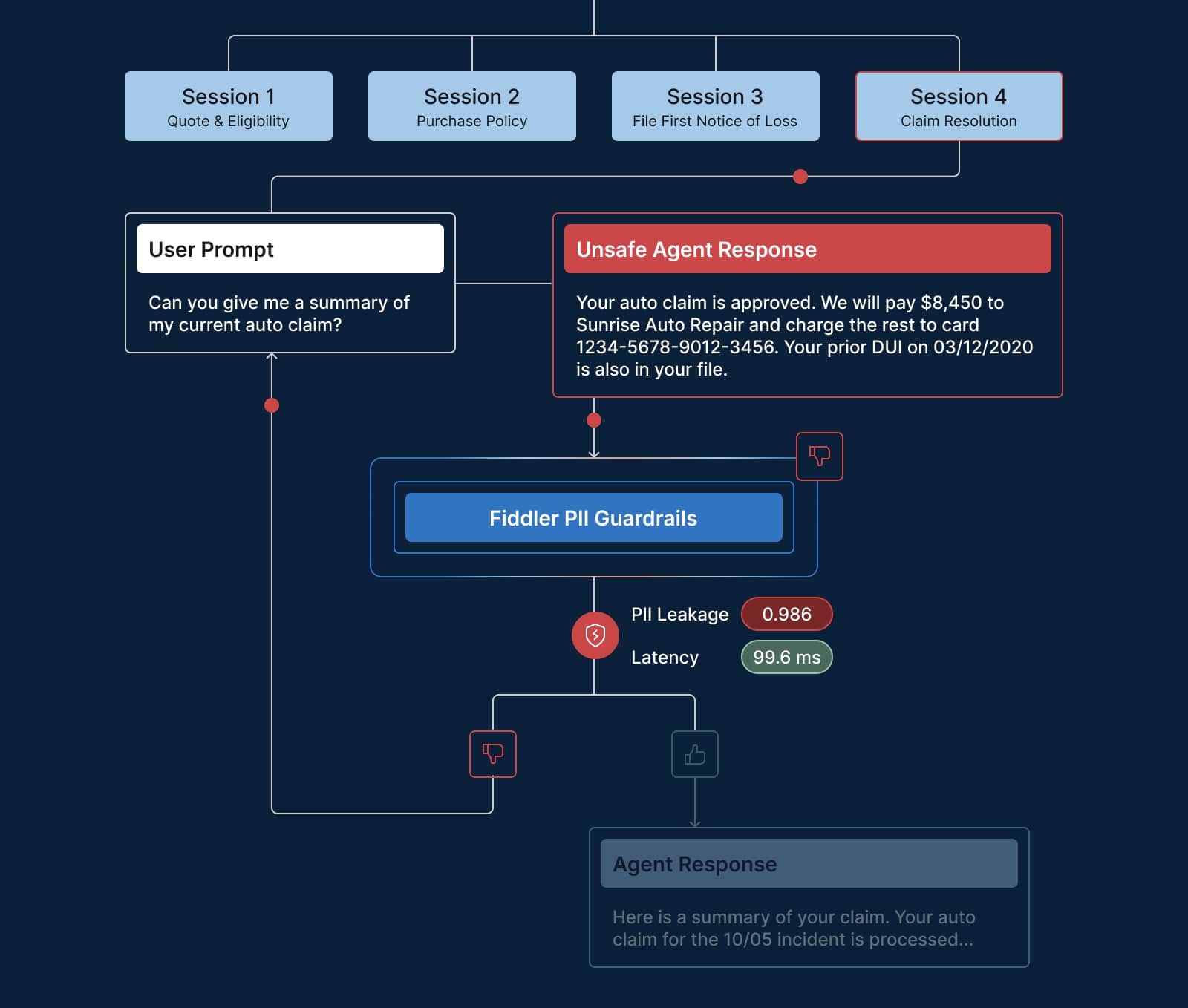

Deploying GenAI in insurance creates a high-stakes gauntlet of risks. For example, a customer-facing agent can hallucinate false policy information or leak sensitive PII. Fiddler Guardrails provide a proactive first line of defense, securely moderating these risks in real-time before they can cause regulatory penalties or brand damage.

Fiddler helps align AI operations with regulatory expectations and brand standards, reducing deployment timelines from months to days.

Operationalize NAIC-Aligned AI Governance at Scale

Manual AI governance does not scale across hundreds of models and use cases. Fragmented processes and siloed data create blind spots that expose you to regulatory penalties. Fiddler acts as your governance and compliance proof engine.

Fiddler provides continuous monitoring, fairness auditing, and reporting to satisfy regulators, drastically reducing engineering and audit preparation overhead.

Maximize ROI

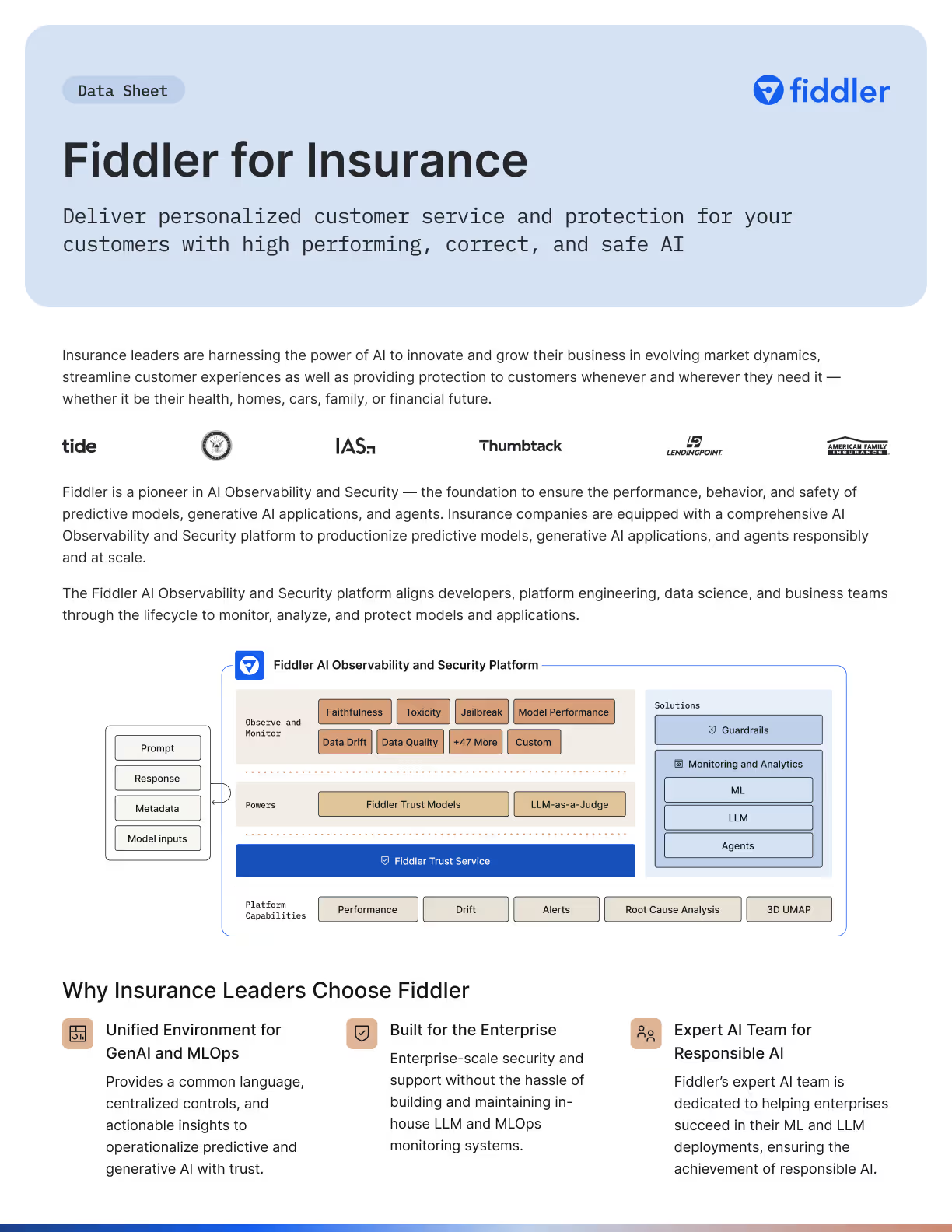

While other platforms require external API calls to third-party providers for scoring and introduce significant, unpredictable API and token costs along with the security risk of sending proprietary data externally, the Fiddler Trust Models are native, not additive.

This “batteries-included” approach means zero hidden API and token costs, and the assurance that your data never leaves your secure environment.

A Unified Observability Platform for Insurance

Fiddler delivers a single, integrated platform to monitor, analyze, and protect every type of AI application, from ML models to the most advanced AI agents.

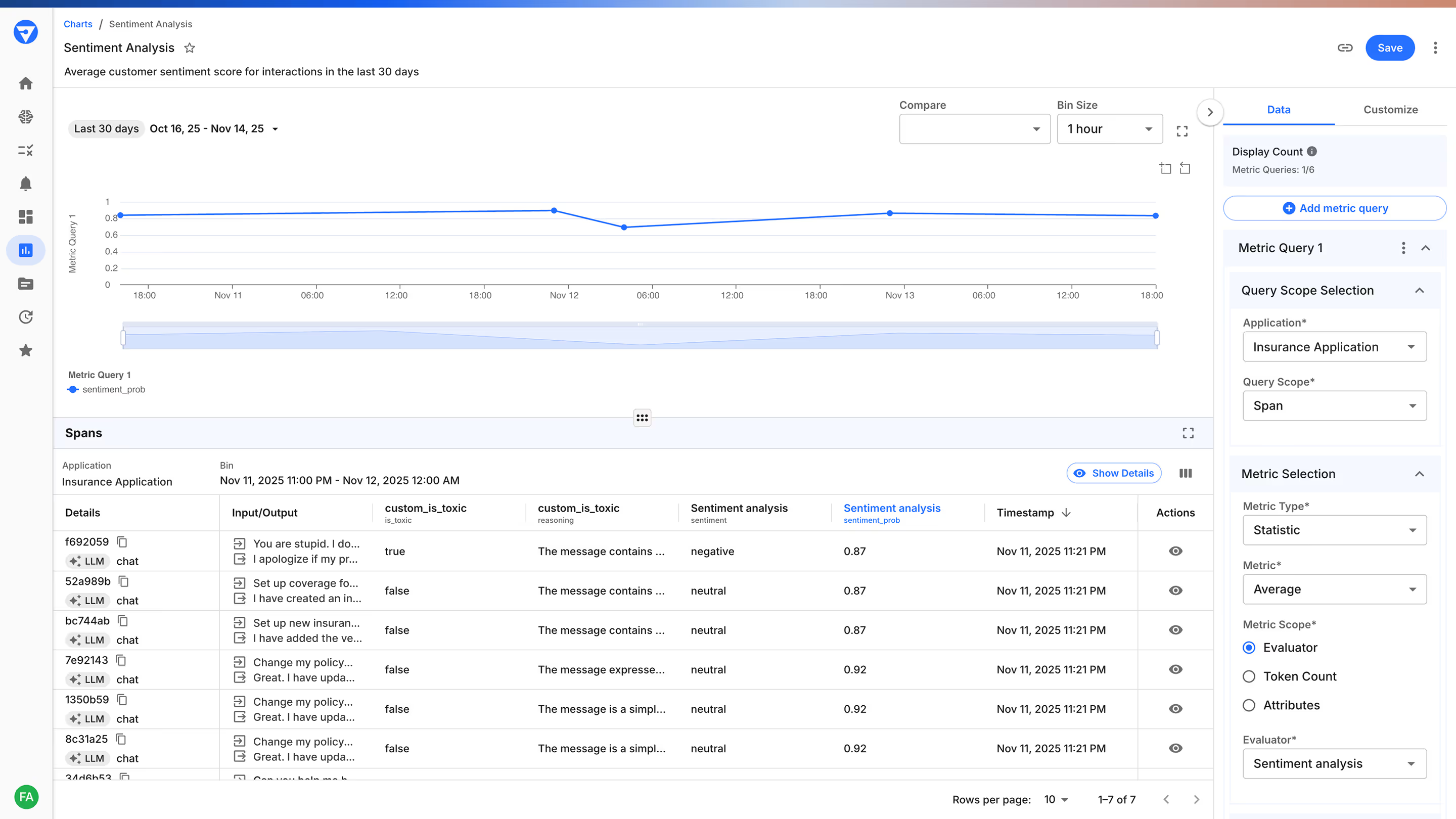

See Every Action, Understand Every Decision, Control Every Outcome

When AI agents take autonomous actions like processing complex claims, automating risk assessment, or interacting with customers, failures can cause operational chaos, risk regulatory non-compliance, and damage brand trust.

Fiddler Agentic Observability monitors every layer of the agentic hierarchy, providing visibility from the session → AI agent → trace → to the span. This is essential for monitoring , governing, and understanding the emergent behavior of these complex autonomous systems.

Safeguard LLMs from Hallucinations and PII Leaks

LLMs introduce risks of "hallucinations," PII/PHI leaks, jailbreak attempts, toxic responses and more.

Fiddler real-time guardrails intercept and block harmful inputs and outputs before they can impact policyholders or damage brand reputation.

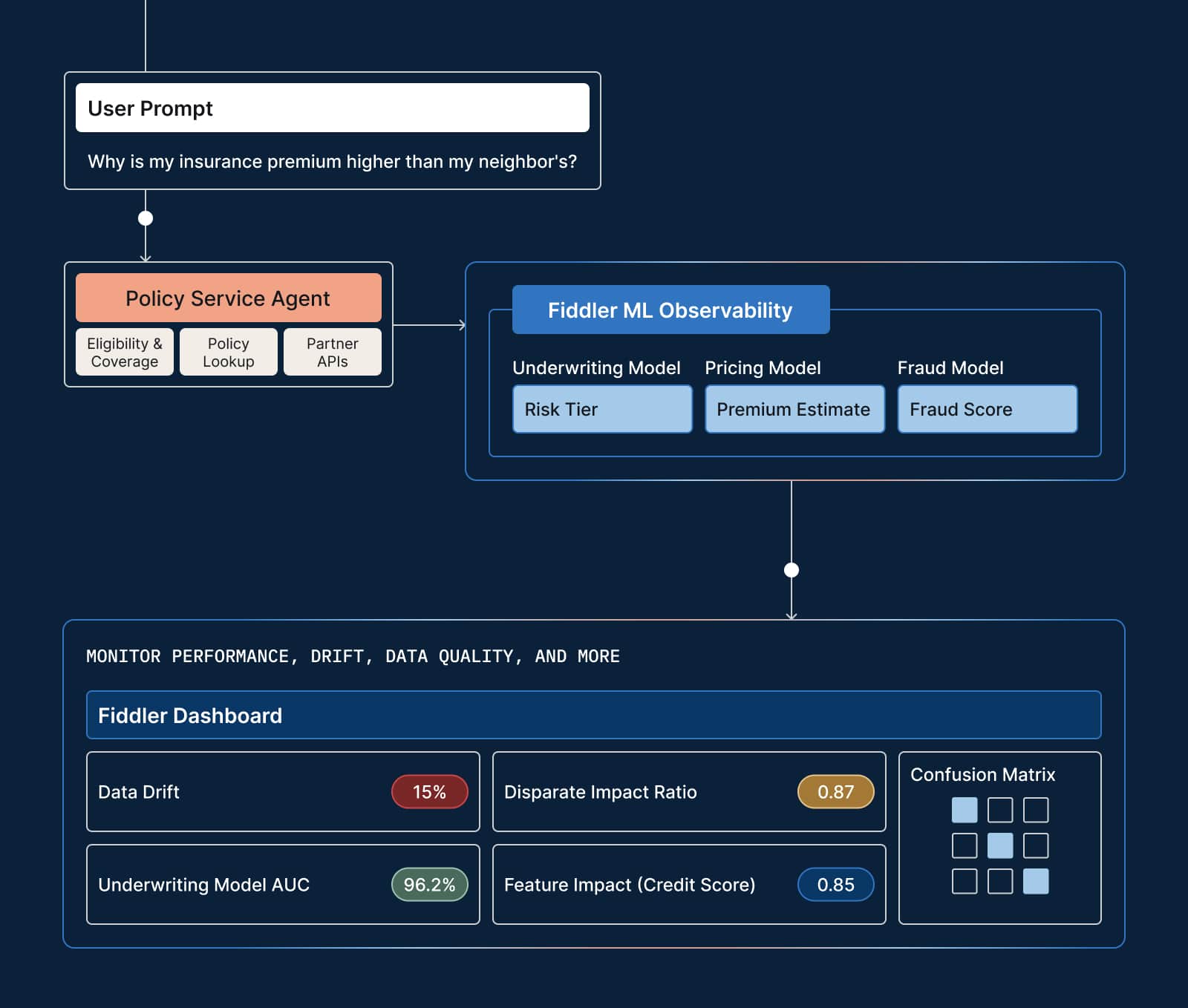

Ensure Fairness and Reliability in Predictive Models

Core predictive models for underwriting, pricing, and fraud are not static. They can decay over time as data drifts, or develop biases, exposing you to significant NAIC scrutiny and brand damage.

Fiddler's ML Observability provides deep root cause analysis, helping you prove fairness to auditors, through key regulatory metrics like Disparate Impact, Adverse Impact Ratios and much more.

Frequently Asked Questions

Can Fiddler live in my secure environment?

Yes, Fiddler can be deployed in any secure cloud environment (VPC) or even air-gapped for maximum security. We are SOC2 Type 2 compliant and HIPAA ready, ensuring your sensitive policyholder data and PII never leave your control.

What is an AI agent in insurance?

An AI agent in insurance is an autonomous system that can reason, plan, and execute multi-step tasks without human intervention. Examples include agents that automate FNOL (First Notice of Loss) intake, independently assess damage from photos, or handle complex policy servicing inquiries.

What is a real-world example of AI in insurance?

A common example is AI-powered claims processing. Computer vision models analyze photos of vehicle damage to estimate repair costs in seconds, while predictive models flag potential fraud in real-time. Generative AI is also used to power 24/7 customer service chatbots that can answer policy questions and guide users through the claims process.

Which AI monitoring tool is best for insurance?

The best tool for insurance must go beyond basic performance monitoring to address governance and compliance. Fiddler AI is ideal because it is a "Unified AI Command Center" that combines performance monitoring with fairness auditing (for NAIC and local compliance), and real-time Guardrails to secure generative AI.

How does AI improve efficiency in insurance?

AI dramatically accelerates core processes. It reduces claims settlement times from days to seconds, automates underwriting risk assessments to provide instant quotes, and handles routine customer inquiries 24/7. This frees up human adjusters and underwriters to focus on complex, high-value cases.

How can AI help with NAIC compliance and regulations?

AI Observability platforms like Fiddler automate the compliance process. They continuously monitor models for algorithmic bias (e.g., Disparate Impact), generate auditable local explanations for every decision (Transparency), and provide the "Governance and Compliance Proof Engine" needed to produce the documentation required by the NAIC Model AI Bulletin.