Today, we’re pleased to announce our $30M Series C, led by RPS Ventures, bringing our total funding to $100M. The significance of this milestone lies in what it enables: the continued development of a new category at the foundation of the AI stack. We’re fortunate to be partnered with long-term investors, including Insight Partners, Lightspeed Ventures, Lux Capital, Dallas Venture Capital, ISAI Cap Ventures, Dentsu Ventures, Mozilla Ventures, BGV, LDV Partners, E12 Ventures, and LG Ventures, who share our belief that enduring infrastructure is built patiently, over years, not quarters.

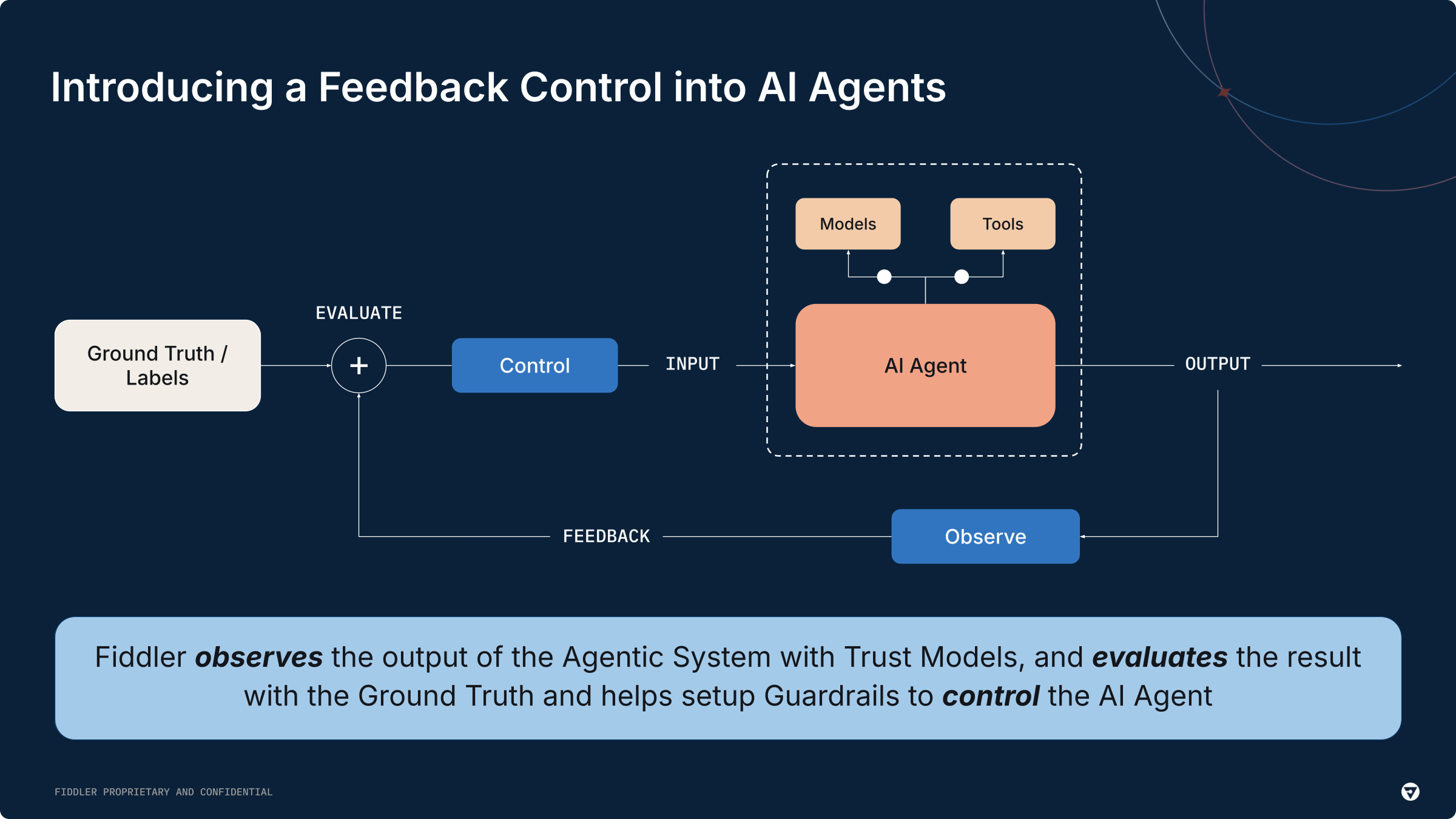

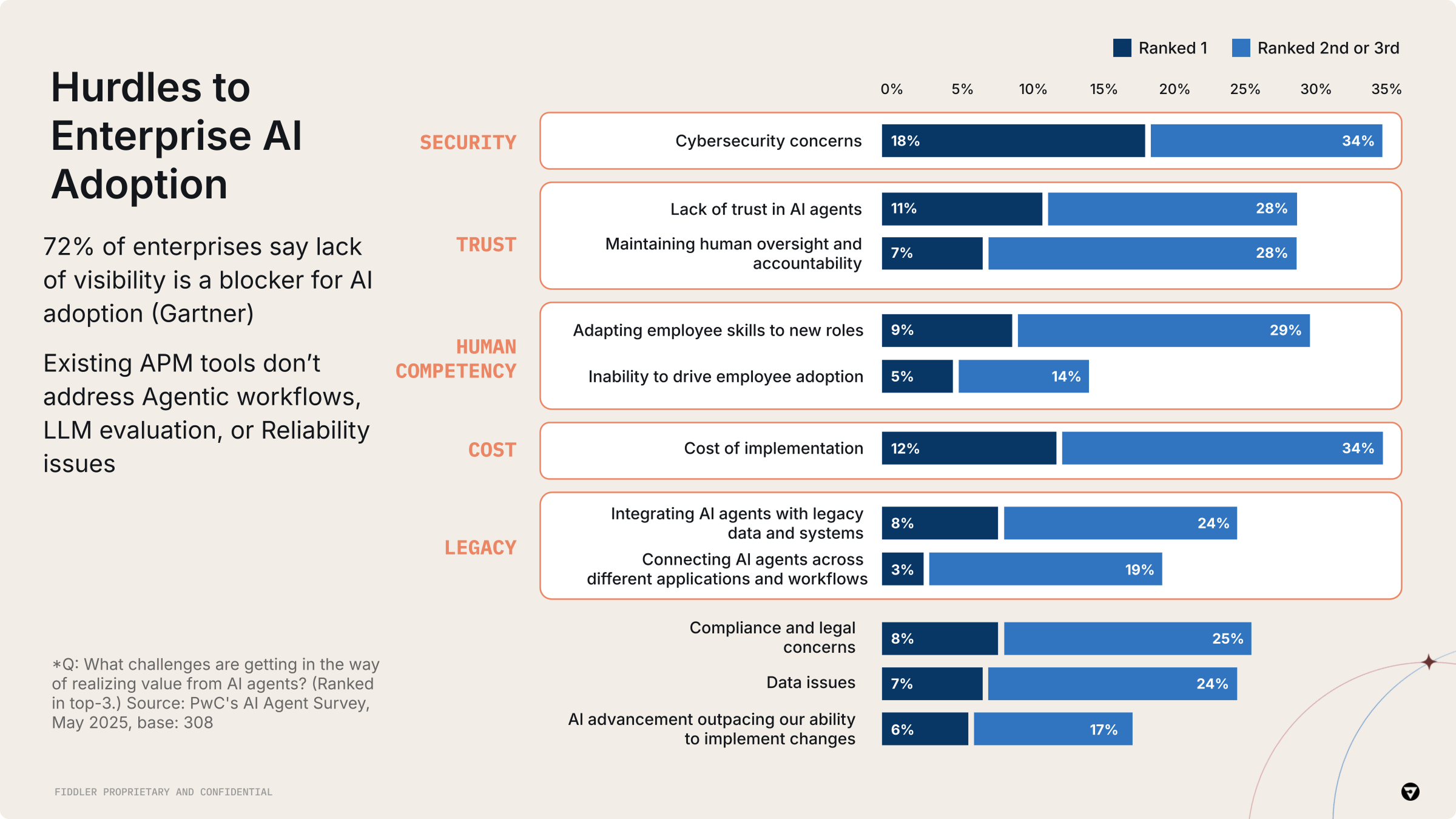

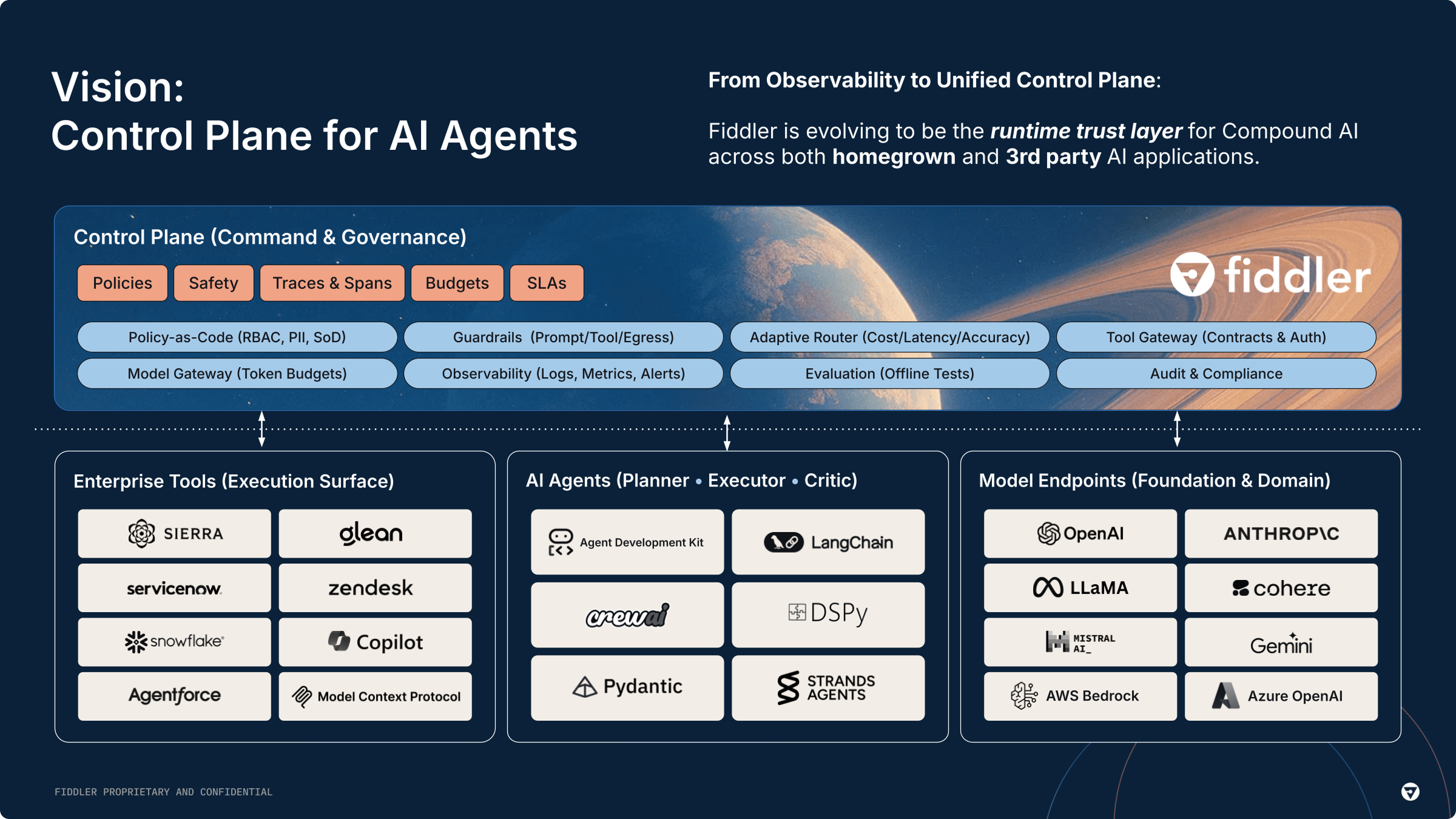

For years, we’ve been working toward a singular thesis: as AI systems move from experimentation to production and from copilots to autonomous agents, trust becomes the bottleneck. Making AI systems operable, governable, and trustworthy at scale is no longer optional; it is the prerequisite for adoption. This journey has led us to an inevitable conclusion: The Control Plane for AI.

Our Journey

Fiddler started with a very specific pain that both Amit and I experienced firsthand: machine learning models were making decisions that mattered, and teams could not explain those decisions. So we built the industry-first enterprise-grade AI explainability solution for understanding model behavior. Today that is deployed at scale at some of the largest institutions on the planet, including the US Navy.

Explainability helped teams understand individual model decisions. But in production, model behavior changes over time — inputs drift, data pipelines evolve, models get retrained. A system that was explainable on Monday can behave differently by Friday, often without a clear reason.

That led us to build the industry-first data-drift observability solution, tracking ML systems [1] the way engineers track services: monitoring health, change, and anomalies over time, and in doing so, defined a new AI category of AI Observability.

As Fortune 500 companies began deploying AI agents, observation stopped being enough. These systems adapt and learn through feedback and reflection. Customers needed confidence in not just what happened, but in what wouldn’t happen again.

That’s when it became clear: visibility is not the same thing as control.

For a long time, “productionizing AI” followed a familiar ritual. Pick a dataset. Train a model. Run offline evaluation. Deploy behind an endpoint. Monitor latency and drift. Retrain. Repeat. Many companies extracted real value this way, largely because the architecture was still simple enough to understand with a handful of metrics and a good on-call rotation.

The new generation of AI systems does not fit that mental model.

We have quietly crossed a line from a model to a system. And systems don’t become reliable because they are clever. They become reliable because they are governed. When governance becomes necessary, a Control Plane always emerges.

Agents are Distributed Systems Wearing a Prompt

The first hard truth is that agents are distributed systems wearing a prompt. There’s a tendency to describe agentic AI as “LLMs, but doing multiple steps.” That’s a comforting simplification, because it suggests all we need to do is better prompting and a few more evaluations to get it right. But if you look at how agents actually work, the architecture is more complex than that.

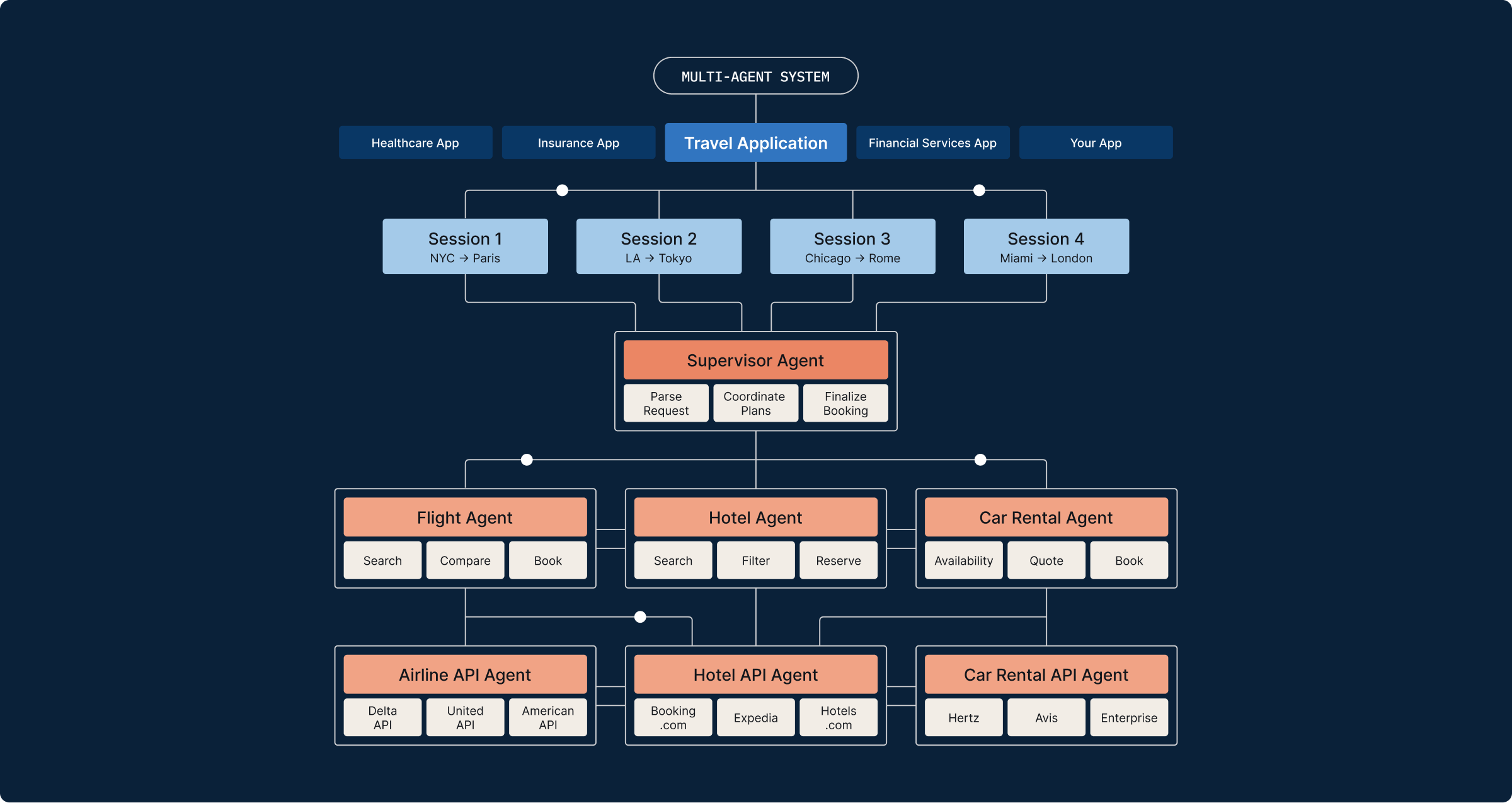

Below is an example of a Travel Application with a complex Agent Hierarchy.

An agent is not a single inference; it’s a loop. A policy-driven loop that decides what to do next based on partial observations, internal state, and tool outputs. Tool use, retrieval, planning, retries, routing, reflection, and multi-agent collaboration are all now default building blocks of this agent loop.

What we call an “AI application” is now a workflow engine whose decisions are partially stochastic and partially policy-constrained.

If this sounds like a distributed system, it’s because it is. A single user request can fan out into retrieval calls, tool calls, model calls across providers, and state updates that persist across sessions. Every one of those steps can fail, time out, return stale data, or return a subtly wrong answer that still looks plausible.

And in agentic systems, plausibility is not safety. Plausibility is a failure mode.

The moment you introduce tool use and memory, you inherit the classic distributed systems problems: partial failure, retries, consistency, time, and state. The only difference is that the control flow is now a probabilistic policy expressed in natural language.

This is no longer “just a model”, but an end-to-end system.

Observability Gives You Eyes, Not Authority

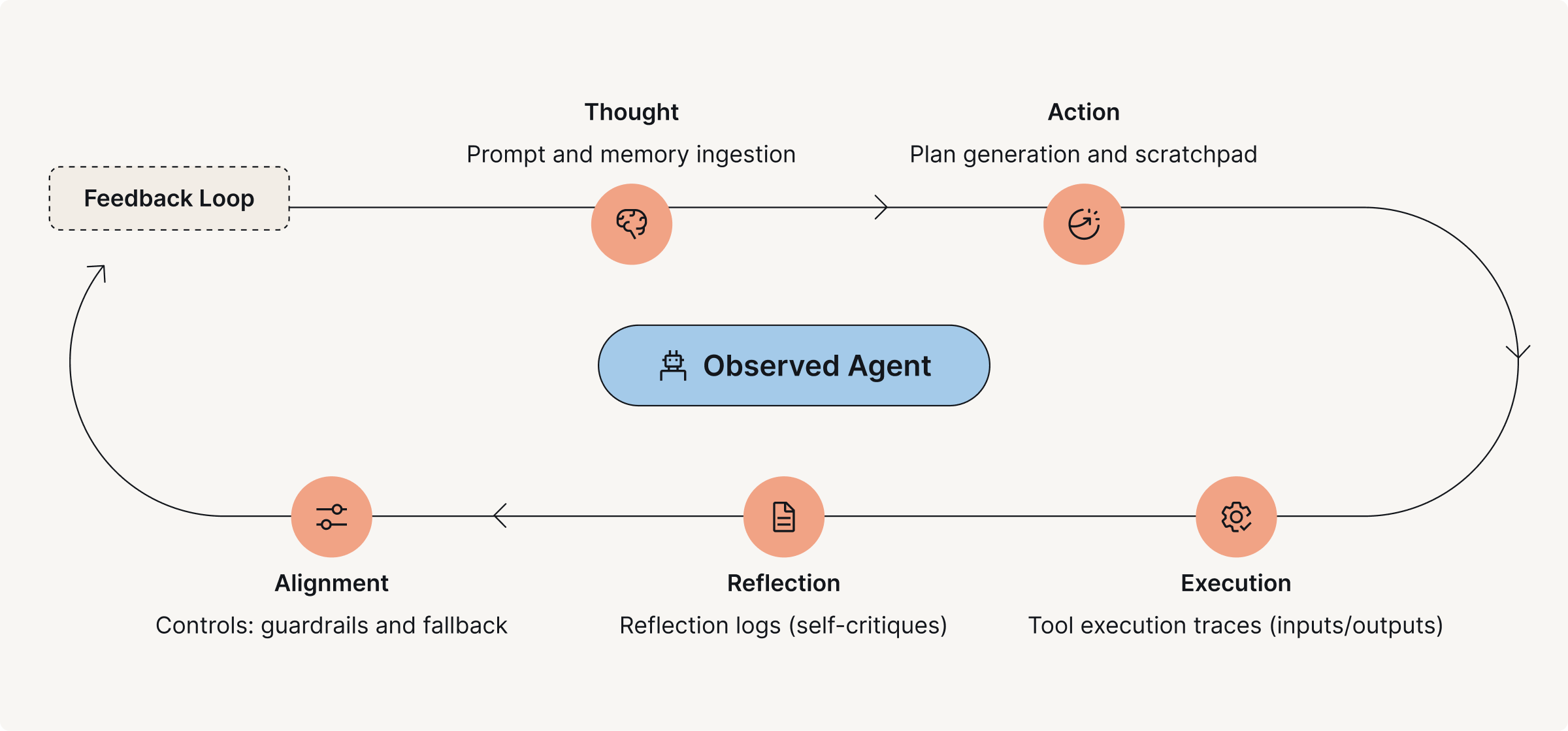

The second hard truth is that observability is necessary, but it is not control. When systems get complex, the first instinct is always instrumentation. Distributed tracing became foundational because it gives you a causal graph, a way to understand how an outcome came to be.

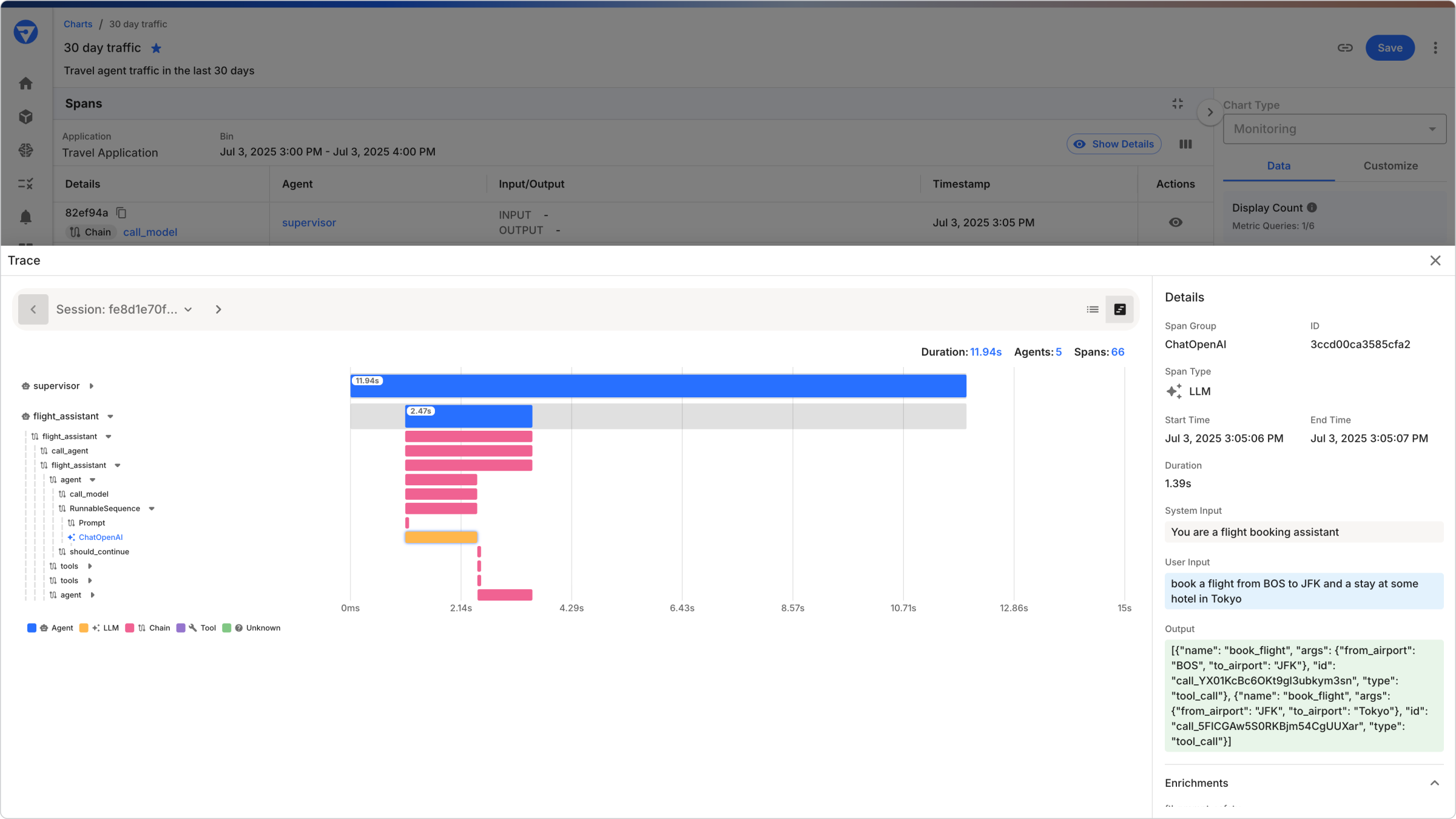

As agentic workflows emerged, it became obvious that tool calls and model calls naturally form trees of spans. The industry is converging on standardized telemetry for prompts, responses, tool usage, model identity, and cost for exactly this reason.

But tracing only tells you what happened. It does not tell you what should be allowed to happen.

You can have pristine traces and still ship a system that violates policy, leaks sensitive data, produces decisions no one can justify, or burns GPU budget without bounds.

In mature platforms, we never confuse observability with control.

Observability is your senses. Control is your nervous system.

Without Control, You Incur The Trust Tax

Organizations start building AI, and once demos reach production, it only takes one incident — an overconfident response, a hallucinated rationale, a misfired tool call, or an unintended data exposure, a prompt-injection attack for them to lose reputation/regulatory/customer trust.

This leads to the Trust Tax — teams scramble to do manual reviews, human-in-the-loop approvals, and ad hoc evaluations before every release. Risk controls appear piecemeal with logging pipelines, policy checks, incident trackers, offline eval jobs, and ad-hoc escalation paths. From here, the outcome is predictable. Organizations start questioning the ROI of AI and struggle to cross the chasm that the MIT report claims — 95% of Gen AI pilots fail [2].

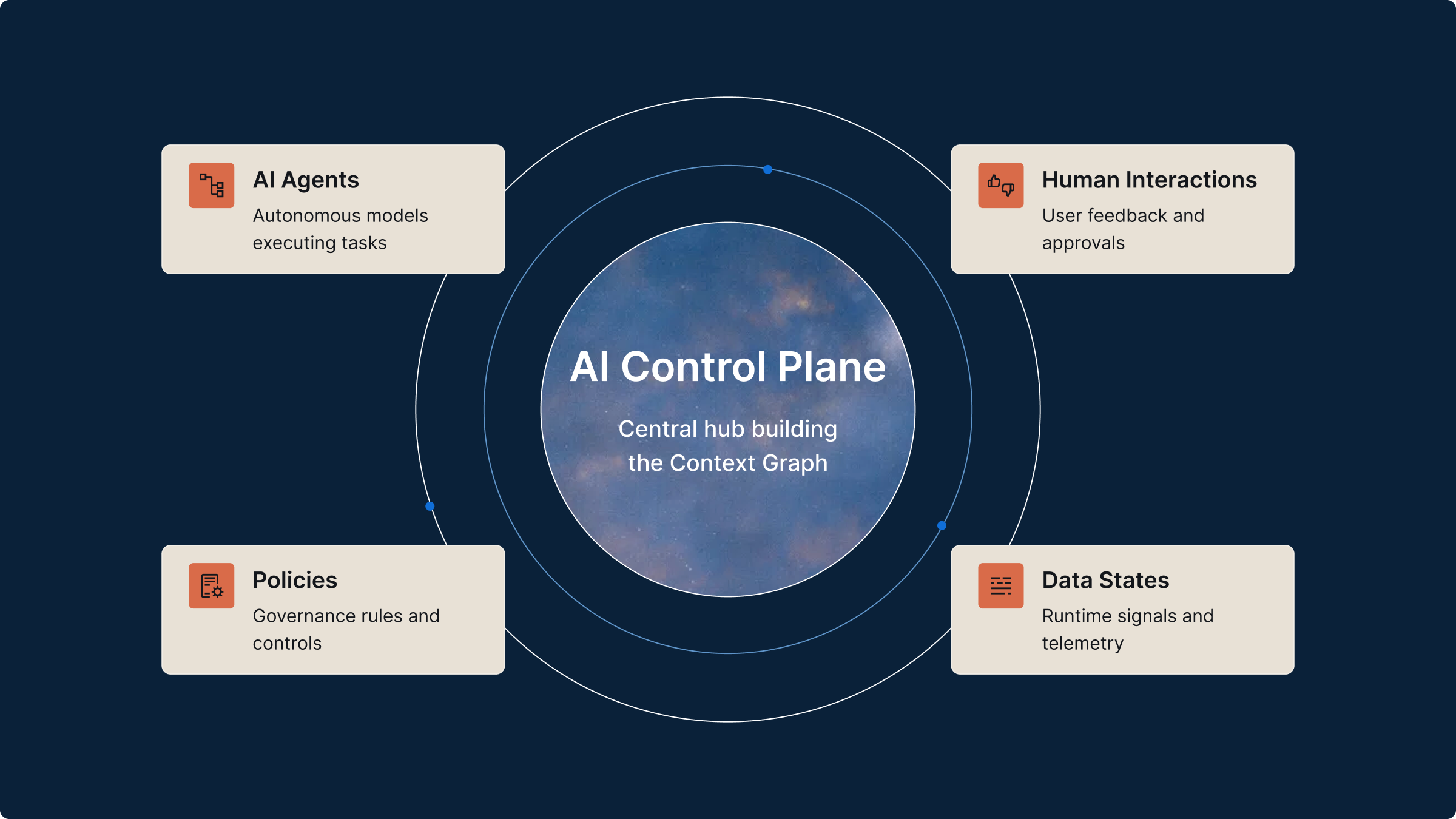

What an AI Control Plane Actually Controls

At scale, agentic systems require four forms of control, each addressing a distinct failure mode.

- Causal Control: You need to reconstruct what happened as a chain of decisions and calls, not a blob of text. This is why the industry is converging on traces and shared semantic conventions for GenAI: a common vocabulary [3] for describing agent behavior across frameworks and vendors.

- Policy Control: Control must execute in the loop, not after the fact. Post-hoc policies produce reports; runtime policies shape behavior, blocking unsafe tool calls, redacting sensitive outputs, enforcing verification, or routing high-risk actions through additional checks.

- Measurement Control: Evaluation can’t collapse into vibes. While LLM-as-a-judge scales, its limits are well documented [4]: bias, inconsistency, and prompt sensitivity. A true control plane treats evaluation as a system that is calibrated, monitored for drift, and grounded in feedback loops, not a single “does this look good?” call.

- Economic Control: Agents are financially unbounded. Unlike a single model call, agents can trigger cascading retries, tools, and secondary models. If cost isn’t governed, it will govern you.

In practice, a control plane means standardized telemetry, reliable evaluation, continuous monitoring, enforceable policy, and auditable governance, not as separate tools, but as a single, coherent layer.

AI Control Plane: The Regulation Gold Standard

There’s a reason why Control Planes resonate in regulated industries. Regulators need your AI system to be accountable, not your model to be brilliant. For example, compliance frameworks emphasize this quite consistently:

- NIST’s AI Risk Management Framework [5] is explicitly lifecycle-oriented, organized around continuous governance: GOVERN, MAP, MEASURE, MANAGE. In plain terms, this is control-plane thinking; define policy, map systems and risks, measure continuously, and manage mitigations over time.

- ISO/IEC 42001 [6] follows the same direction, treating AI governance as an ongoing management system rather than a one-time audit.

- The EU AI Act [7], particularly for high-risk systems, reinforces this posture through requirements for record-keeping, logging, traceability, and post-market monitoring. Regardless of evolving timelines, the direction of compliance pressure is clear: continuous oversight, not point-in-time documentation.

This is precisely what a Control Plane provides: a single source of truth for behavior, policy, and evidence.

The Shift that Makes it Inevitable

Once you treat an agent as a distributed system, a Control Plane becomes an operational requirement.

Teams can delay this with point solutions: prompt libraries, eval harnesses, tool gateways, security filters, dashboards, and incident trackers. Each addresses a symptom, but none provides system-level control.

At scale, every mature platform converges on the same needs:

- A single system of record

- A shared vocabulary for behavior

- Enforceable policy at runtime

- And verifiable evidence of what occurred

The mistake is assuming control comes after scale. In practice, organizations either build it intentionally or absorb the Trust Tax (fragmented tooling, manual processes, and escalating operational risk etc) or, in the worst case fail to deliver AI products.

This pattern is well established in traditional deterministic software. Logs [8] became universal once software systems required an ordered state. Kubernetes [9] and Observability control planes [10] emerged once environments became too complex to manage manually. AI will follow the same path. Enterprises are deploying fleets of probabilistic systems that act autonomously. Scaling them safely requires continuous governance with policy, behavior, and evidence managed through a control plane.

Over time, an AI Control Plane does more than govern behavior; it creates a system of record. By sitting in the execution path, it captures the decision traces of AI systems: what context was gathered, which policies applied, where exceptions were granted, and why a particular action was allowed. Persisted over time, these traces naturally form a Context Graph [11 — a living, queryable system of record of how decisions were made across systems and workflows. This is what many are now pointing to as AI’s next trillion-dollar opportunity.

To conclude, AI isn’t replacing software. It’s introducing a new kind of software that changes at runtime. And that new agentic software requires control by design.

If your AI systems are NOT moving beyond demos, we can unblock them, help you ship them, and make them governable in production. Request a demo.

And if you’re excited by the challenge of building foundational AI infrastructure, we’re hiring!

References

[1] Stop flying blind and monitor your AI: https://www.fiddler.ai/blog/explainable-monitoring-stop-flying-blind-and-monitor-your-ai

[2] MIT Finds 95% Of GenAI Pilots Fail Because Companies Avoid Friction: https://www.forbes.com/sites/jasonsnyder/2025/08/26/mit-finds-95-of-genai-pilots-fail-because-companies-avoid-friction/

[3] Open Telemetry for Generative AI: https://opentelemetry.io/blog/2024/otel-generative-ai/

[4] A Survey on LLM-as-a-Judge: https://arxiv.org/abs/2411.15594

[5] Artificial Intelligence Risk Management Framework: https://nvlpubs.nist.gov/nistpubs/ai/nist.ai.100-1.pdf

[6] ISO 42001: https://www.iso.org/standard/42001

[7] EU AI Act: https://ai-act-service-desk.ec.europa.eu/en/ai-act/article-12

[8] The Log: What every software engineer should know about real-time data's unifying abstraction: https://engineering.linkedin.com/distributed-systems/log-what-every-software-engineer-should-know-about-real-time-datas-unifying?utm_source=chatgpt.com

[9] Kubernetes Cluster Architecture: https://kubernetes.io/docs/concepts/architecture/

[10] Observability Control Plane: https://chronosphere.io/platform/control-plane/

[11] Context Graph: https://foundationcapital.com/context-graphs-ais-trillion-dollar-opportunity/