On December 11, 2025, the Office of Management and Budget issued Memorandum M-26-04, implementing Executive Order 14319 on Increasing Public Trust in Artificial Intelligence Through Unbiased AI Principles. This memo changes how federal agencies procure and oversee large language models (LLMs).

Unlike prior guidance, M-26-04 establishes enforceable procurement requirements. Agencies must contractually require compliance with two Unbiased AI Principles: truth-seeking and ideological neutrality. These requirements are material to contract eligibility and payment, with explicit authority to terminate contracts for non-compliance.

Agencies must update their procurement policies by March 11, 2026, and all new LLM procurements must include these requirements immediately.

What OMB M-26-04 Means for Federal Agencies

M-26-04 applies to every executive agency and to all procured LLMs, regardless of deployment method. While the memo is centered on LLM procurement, its enhanced transparency and oversight requirements extend, where practicable, to other high-stakes AI models and systems.

At its core, the memo reinforces a foundational requirement for federal AI systems: they must remain neutral, nonpartisan, and trustworthy across administrations and use cases. Federal agencies serve the public on a bipartisan basis, and AI systems used in government must not introduce ideological bias, partisan framing, or inconsistent behavior that could undermine public trust or institutional credibility.

Rather than focusing only on acquisition, M-26-04 expands federal AI oversight beyond initial procurement. Agencies are responsible not only for what vendors disclose at procurement, but for how models even after they are deployed into production. For federal agencies, maintaining nonpartisan and trustworthy AI systems requires oversight that does not rely solely on vendor or model self-assessment, but is supported by independent evaluation and evidence.

To support this shift, the memo defines two levels of transparency that agencies must apply based on the risk and impact of the AI system.

Two Levels of Transparency Required by M-26-04

Minimum Transparency

Agencies must request baseline documentation such as acceptable use policies, model or system cards, end-user resources, and feedback mechanisms for reporting problematic outputs. These materials are necessary, but they are static. They establish baseline visibility but do not provide assurance that models remain compliant once deployed.

Enhanced Transparency

For public-facing systems, mission-critical deployments, and high-stakes decision support, M-26-04 requires enhanced transparency. This level is designed to give agencies ongoing visibility and control as models operate and change in real-world environments.

Enhanced transparency requires agencies to continuously assess how models behave under ambiguity or sensitive topics, whether safeguards continue to function in production, how models compare across vendors and versions, and how third-party resellers or integrators modify model behavior.

To support this oversight, the memo defines four pillars that structure enhanced transparency:

- Pre-Training and Post-Training Activities: Agencies must assess actions that impact factuality, grounding, and safety, including system-level prompts, content moderation, and the continuous use of red teaming to detect bias.

- Model Evaluations: Agencies must obtain bias evaluation results and methodologies, including prompt pair testing, performance on ambiguous versus straightforward prompts, and accuracy and honesty benchmarks.

- Enterprise-Level Controls: Agencies must have governance tools that enable model comparison, configurable controls, and visibility into output provenance.

- Third-Party Modifications: Agencies must understand how resellers, cloud providers, or integrators modify base models and how those changes affect behavior.

Together, these requirements reinforce that compliance with M-26-04 depends on continuous oversight rather than point-in-time review.

How Fiddler Helps Agencies Meet M-26-04 Requirements

Fiddler provides a unified AI Observability and Security platform that serves as a command center for AI deployments, delivering continuous oversight, diagnostics, and auditable evidence. Designed to be vendor-agnostic, Fiddler supports federal neutrality and procurement integrity while providing the independent monitoring infrastructure required by Appendix A, Section B of M-26-04.

Across the AI lifecycle, Fiddler delivers the full set of capabilities required to operationalize the four pillars of Enhanced Transparency, including:

- Continuous bias and factuality oversight, including automated bias detection, factuality benchmarking, drift monitoring, continuous red teaming, and audit trails that serve as compliance evidence across pre- and post-training activities.

- Independent model evaluation and validation, including prompt pair generation and testing, ambiguity and uncertainty analysis, validation of vendor claims, and custom benchmarking/metrics customized to agency-specific use cases.

- Enterprise-level governance and comparison, with multi-model and multi-version comparison, validation of governance controls, citation and provenance verification, version tracking across model updates, and cost-performance trade-off analysis.

- Supply chain transparency and accountability, including testing of third-party modifications, integration validation, attribution of behavioral changes across vendors and integrators, and vendor-neutral assessment with no incentive to shift responsibility.

Fiddler has been deemed awardable for the second consecutive year through the Chief Digital and Artificial Intelligence Office (CDAO) Tradewinds procurement program, reflecting continued government confidence in Fiddler’s technology and readiness for mission use.

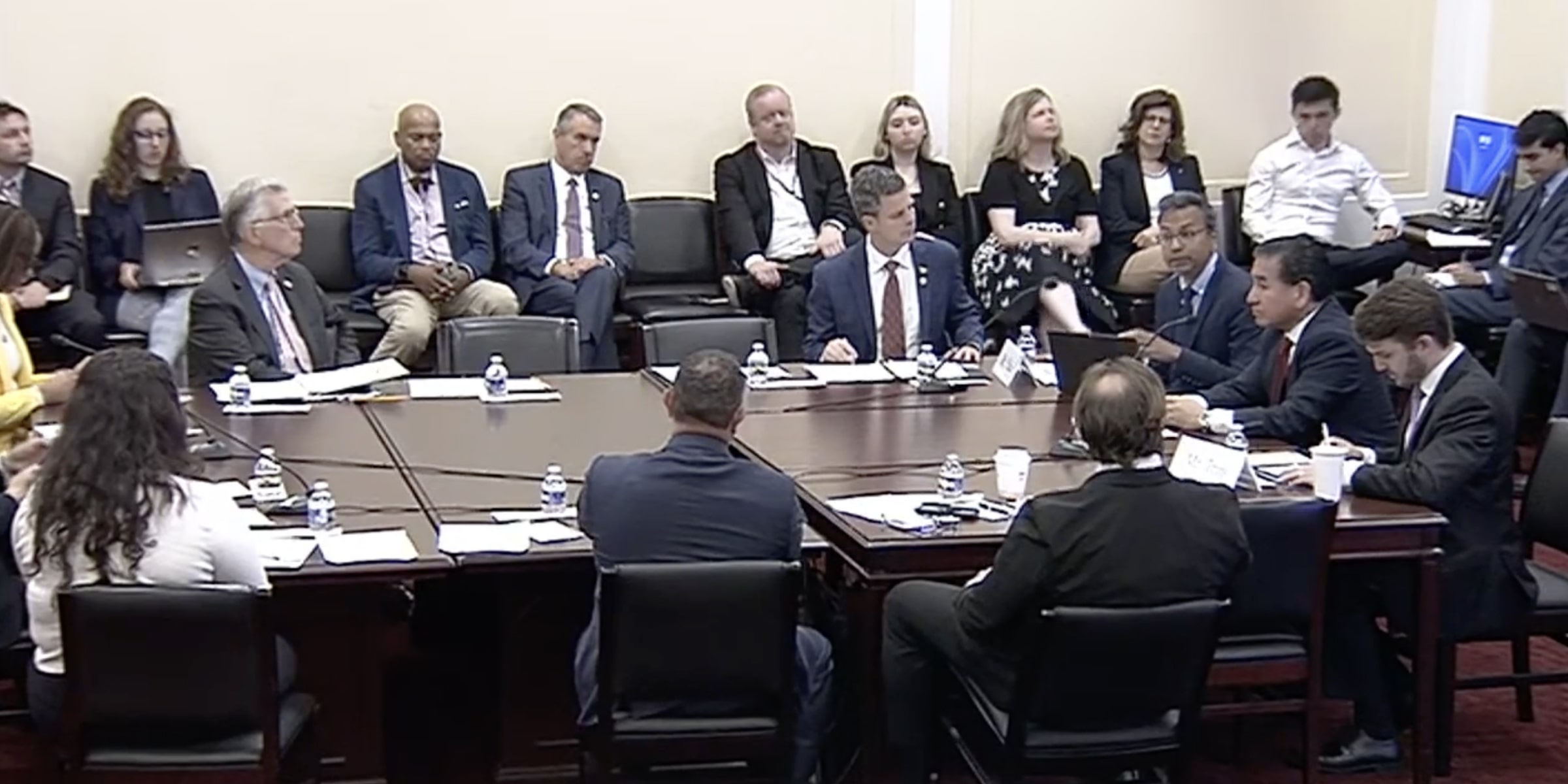

Fiddler has also engaged directly with policymakers, including participating in the bipartisan House Subcommittee on Cybersecurity, Information Technology, and Government Innovation roundtable, “Artificial Intelligence in the Real World,” where Fiddler discussed how AI observability and security enable trustworthy, transparent, and accountable federal AI systems while protecting public trust and national security.

Our experience supporting the Department of War and the Defense Innovation Unit (DIU), including work at the US Navy that resulted in a DIU Success Memo, demonstrates our ability to operate in high-stakes government environments where trust, accountability, and mission outcomes matter. We work with agencies wherever they are in their AI journey, helping them operationalize scalable, safe, and high-performing AI systems while maintaining public trust through unbiased assessments that support truth-seeking and ideological neutrality.

Talk with our team about operationalizing M-26-04 requirements for mission-critical AI deployments.