Maintaining high-performing ML models is increasingly important as more are built into high-stakes applications. However, models can decay in silence and stop working as intended when they ingest production data that is different from the data they were trained on.

A recent study by Harvard, MIT, The University of Monterrey and Cambridge states that 91% of ML models degrade over time. The authors of the study conclude that temporal model degradation or AI aging remains a challenge for organizations using ML models to advance real-life applications. This challenge stems from the fact that models are trained to meet a specific quality level before they can be deployed, but that model quality or performance isn’t maintained with further updating or retraining once they are in production.

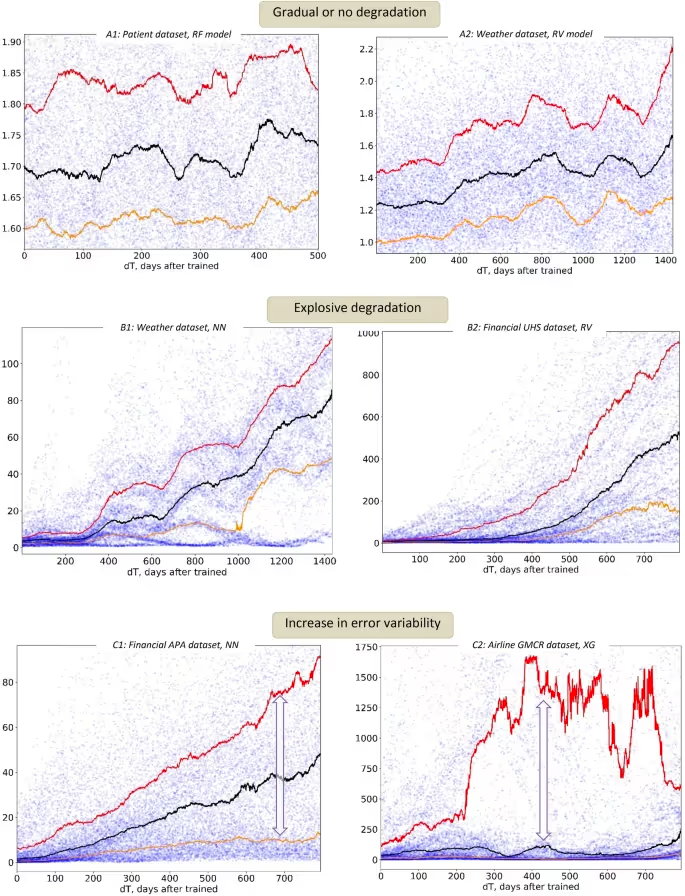

The authors of the study conducted a series of experiments using 32 datasets from 4 industries on 4 standard models (linear, ensembles, boosted, and neural networks), and observed temporal model degradation in 91% of cases. The observations from these experiments are:

- AI aging in models changes relative to the time of their training

- Temporal degradation can be driven by many factors like model hyperparameter choices and the size of the training sets

- Different models with the same dataset can demonstrate different degradation patterns (as shown below)

- Models do not remain static even when they initially achieve high-accuracy

It’s alarming that 91% of models degrade over time, especially when people rely on models to make critical decisions from medical diagnosis/treatment to financial loans. So how can ML teams identify, resolve, and prevent model degradation early?

AI Observability Delivers High-Performing ML with a Continuous Feedback Loop

The majority of the ML work is done in pre-production, but post-production is just as critical. MLOps teams spend most of their time exploring data, identifying key features, and building and training models to address business problems. It is very rare for models to get revisited after they are launched into the wild. And once business teams say that something is wrong with the models' predictions, it’s too late to analyze what went wrong or why models degraded.

It’s critical for teams to design their MLOps lifecycle to create a culture that forces data scientists and ML practitioners to close the gap between pre-production and post-production phases by obtaining production insights for model retraining. Since models are not preserved in their trained state due to data changes in a production environment, ML teams must routinely pay close attention to how production models perform to identify and resolve issues quickly, like AI model degradation, that impact model behaviors.What’s more, ML teams have pivoted from long-cycle model development to agile model development by continuously monitoring model performance so they can update models to evolve with the changing data.

Incorporating an AI Observability platform that spans from pre-production to production helps create a continuous MLOps feedback loop. To build out a robust MLOps framework, ML teams need an AI Observability platform that has:

Pre-production: Addressing AI Aging and Model Degradation Early:

- Model Monitoring:

Identify and define the model metrics that tie into business KPIs. Then monitor training models as if they were already in production by feeding training models 30% of the dataset first and then feeding the remaining 70% of the data over a period of time. By doing so, ML teams can see how the model would perform in a production environment while measuring the defined model metrics.

- Model Validation:

Models need to be validated for bias and transparency to ensure they meet business objectives before they go into production. Teams can validate models by ensuring they meet requirements for performance, model bias, and other metrics defined by the business. Having a 360° view of models, using explainable AI and fairness, before they are deployed is an important step, especially for teams with compliance and model risk requirements.

In production: Detecting ML Degradation through Continuous Monitoring:

- Model Monitoring:

Models need to be continuously monitored to see how they perform compared to training pre-production. Monitoring goes beyond simply measuring metrics; ML teams need to also monitor for data drift, data integrity, and traffic. Data drift provides insights on how production data is shifting compared to training data, which can alter model behavior.

Sometimes poor model performance has nothing to do with data or model quality, and won’t require changes in hyperparameters or even model retraining. Monitoring for data integrity issues is commonly overlooked when ML teams troubleshoot for model issues. When data pipelines are not regularly maintained, broken data pipelines, missing data, or data violations can impact model performance.

- Flexible Alerts:

Model monitoring can be done the right way by implementing flexible and customizable alerts that give ML teams early warnings on high-priority model issues without causing alert fatigue. Get notified as soon as performance spikes or dips on business-critical issues by setting model monitoring alerts on specific metrics for data drift, performance, data integrity and traffic, and their specified thresholds. Stay ahead of model issues before the business gets impacted.

- Rich Model Diagnostics with Root Cause Analysis:

Understanding model behavior goes beyond simply measuring metrics. Whenever metrics dip, ML teams need to find the culprit that caused the model to underperform. It is critical for ML teams to have flexible means to diagnose the root cause of an issue. This means enabling data scientists and ML practitioners to surgically slice and dice the problem area and overlay them with out-of-the-box charts to gain insights on how to resolve the issue, whether it be simply adjusting the model's hyperparameters or retraining the model to evolve with the shifting production environment caused by changes in seasonality, user behavior, or market dynamics.

Incorporating rich model diagnostics with root cause analysis and explainable AI into the MLOps framework creates a continuous feedback loop, and establishes a culture for ML teams to have routine checks for model degradation or abnormal changes in model behavior.

- Custom Dashboards and Reports:

Visualizing the health of models for business applications in a unified, comprehensive dashboard creates a single-source of truth and aligns teams across the organization. Together, teams can monitor and analyze models in real-time to understand how model performance and behavior impact business KPIs, and make better, impactful business decisions that may require models to be updated or retrained to meet business needs.

Prevent Model Degradation with Fiddler

Fiddler is the foundation of robust MLOps, streamlining ML workflows with a continuous feedback loop for better model outcomes. Catch the 91% of models at risk of model degradation before they become a problem. Request a demo today.