With AI adoption surging, forward-thinking enterprises are deploying an increasing number of AI-powered business applications every day. As these algorithms become increasingly intricate, the need for comprehensive model monitoring platforms has become clear. Enterprises have recognized this need, which helps AI Observability vendors further advance best practices to monitor these business critical systems.

As the dust settles, it is clear that two paradigms for AI Observability have emerged — one that focuses on publishing metrics for observation and one that focuses on publishing inferences for observation.

In this blog, we will explore the advantages and disadvantages inherent to these two approaches, and analyze their trade-offs.

Differences between Metrics and Inferences in Machine Learning Monitoring

Monitoring key model metrics over time is a cornerstone of any proper AI Observability platform. Metrics around data quality, data drift, and model performance are essential to measure on an ongoing basis to ensure high performing models. Any AI Observability platform worth its salt will have the “out-of-the-box” ability to surface these basic metrics and alert on them when performance degrades.

The distinction between the two emerging paradigms in the AI Observability space lies not in the metrics organizations need to track, but in how these metrics are calculated.

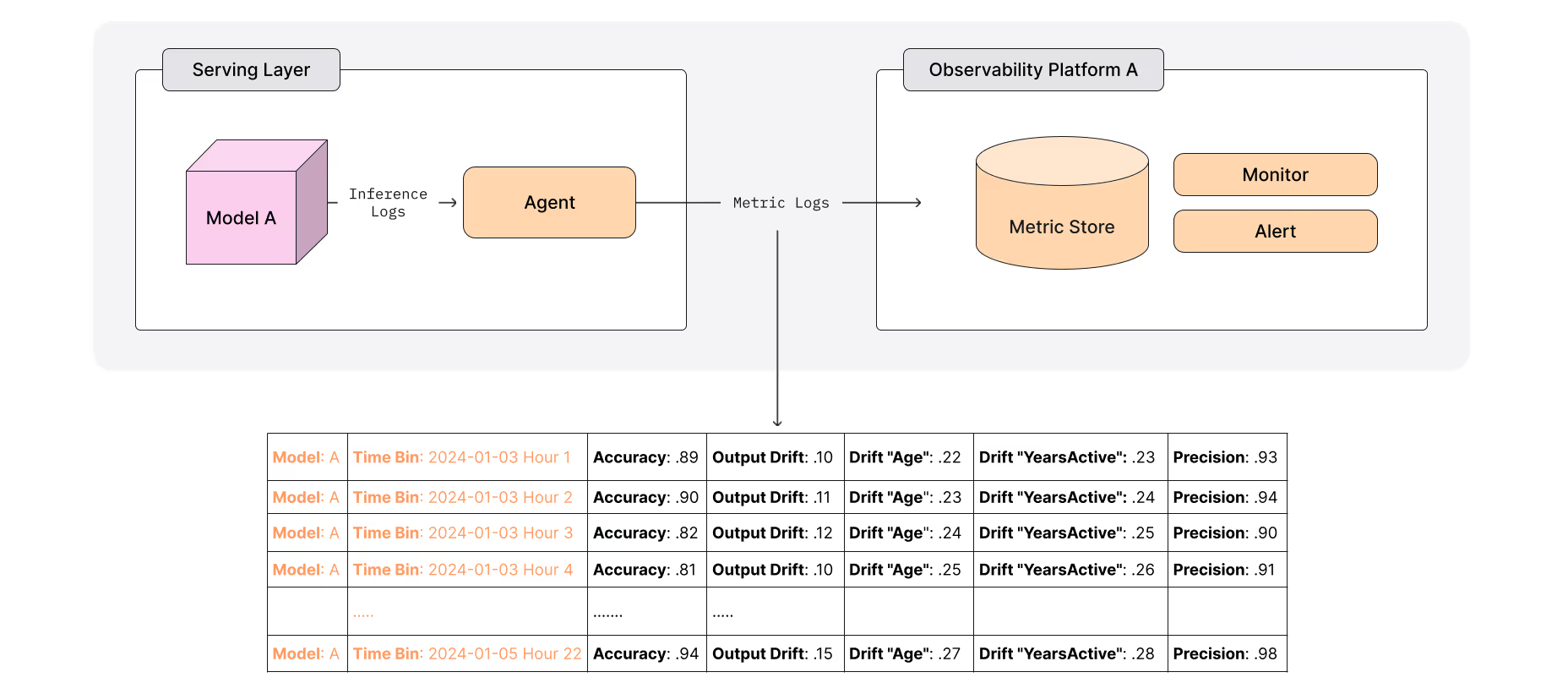

- Observing Metrics: Some vendors opt to compute these model metrics in close proximity to the model itself, subsequently publishing only the aggregated metrics to their observability platform (Figure A)

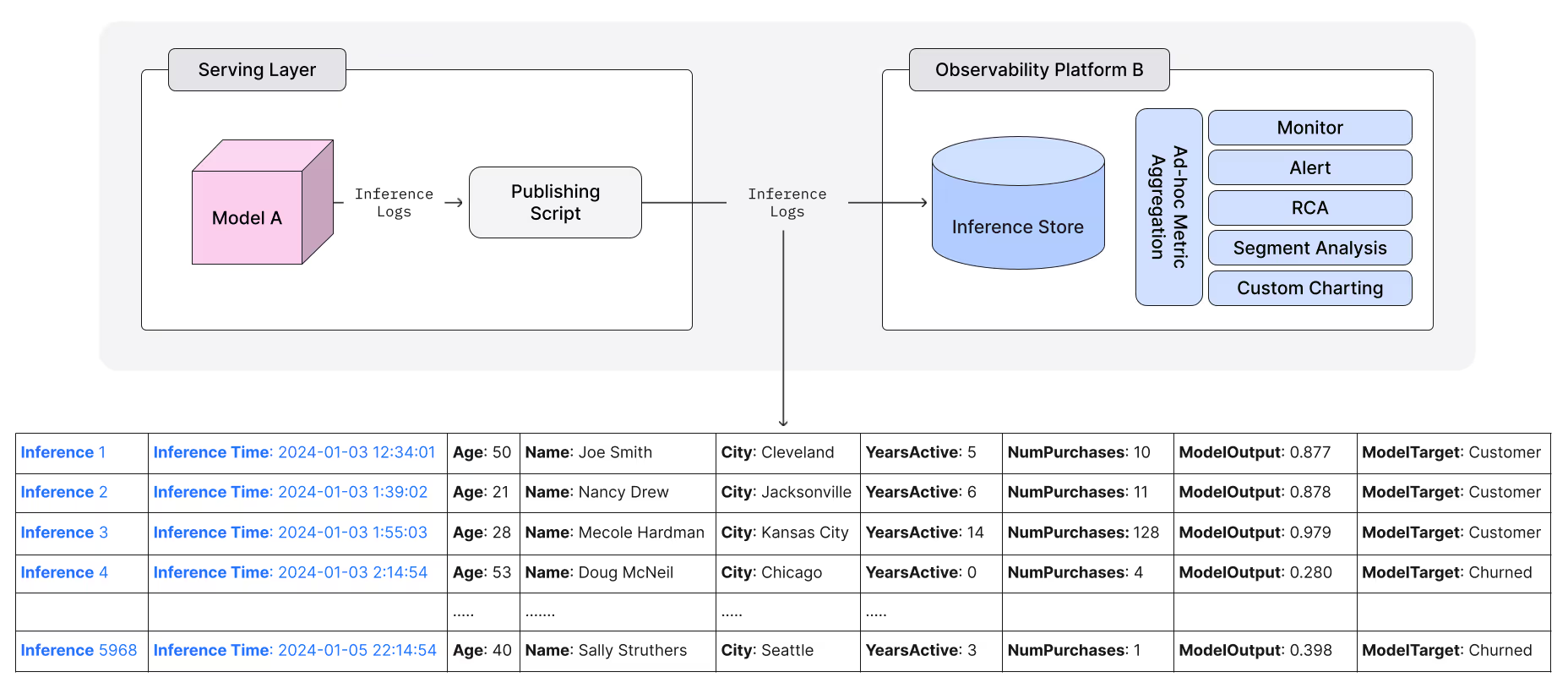

- Observing Inferences: Other vendors prefer to publish both the inputs and outputs recorded in the raw inference logs directly to their observability platform (Figure B)

Let’s look at the two monitoring approaches, and break out their differences using the diagrams below.

Observing Metrics

Figure A demonstrates the process of how AI Observability vendors publish the aggregated metrics to their observability platform. Using either agents that run against the model’s inference logs or using SQL that runs against the model’s inference history table, aggregated metrics are calculated every hour (or some other configurable time bin), and then shipped over to the observability platform to be stored in a table that persists these model metrics over time. This figure depicts not only the data movement but also shows a few sample records of what this pre-aggregated metric data looks like. Note that each row is simply an hourly capture of a model’s performance metrics, like drift and accuracy.

Once the metrics are stored, they can be monitored over time and alerts can be sent whenever key metrics are degrading.

Observing Inferences

By contrast, Figure B demonstrates how other AI Observability vendors have chosen to publish the raw model inference data to their observability platform. Scripts or connectors can be used to publish the raw inputs and outputs of the model inferences either in a batch or streaming fashion. These raw inferences are then persisted with a timestamp in an “inference” table. This figure depicts not only the data movement but also shows a few sample records of what this raw inference data looks like. Note that each row is simply the inputs and outputs of each model inference made.

With the raw inferences now stored, these platforms are now tasked with aggregating the raw inference data to produce the key metrics needed to track model performance through model monitoring tools.

Now that we've detailed the mechanics of both approaches, let’s explore the trade-offs that enterprises need to evaluate to determine which method aligns best with their observability needs.

Benefits of Metrics-Based AI Observability

Abstracting Private Data for Compliance and Security

The first key advantage of observing models via metrics is that PII (personally identifiable information) gets “abstracted away” during the aggregation step. This approach can remove security concerns knowing that PII, or other sensitive data, will not be shipped onto the vendor’s observability platform. With this reduced security concern, AI-forward companies embracing these platforms are more comfortable moving the data onto these platforms without rigorous security audits to be completed. Companies adopting this approach are often more comfortable using the vendor's AI observability platform as a SaaS solution.

Reducing Software and Infrastructure Costs

A second noteworthy advantage stems from the reduction in data footprint within the vendor's observability platform. Pre-aggregating metrics needs less storage space compared to retaining raw inference data. Consequently, this translates to less storage and computational requirements, thereby potentially lowering infrastructure costs. These cost efficiencies are frequently reflected in reduced software costs for customers, further enhancing the attractiveness of adopting such platforms.

Benefits of Inference-Based Model Monitoring

Root Cause Analysis to Fix Model Issues

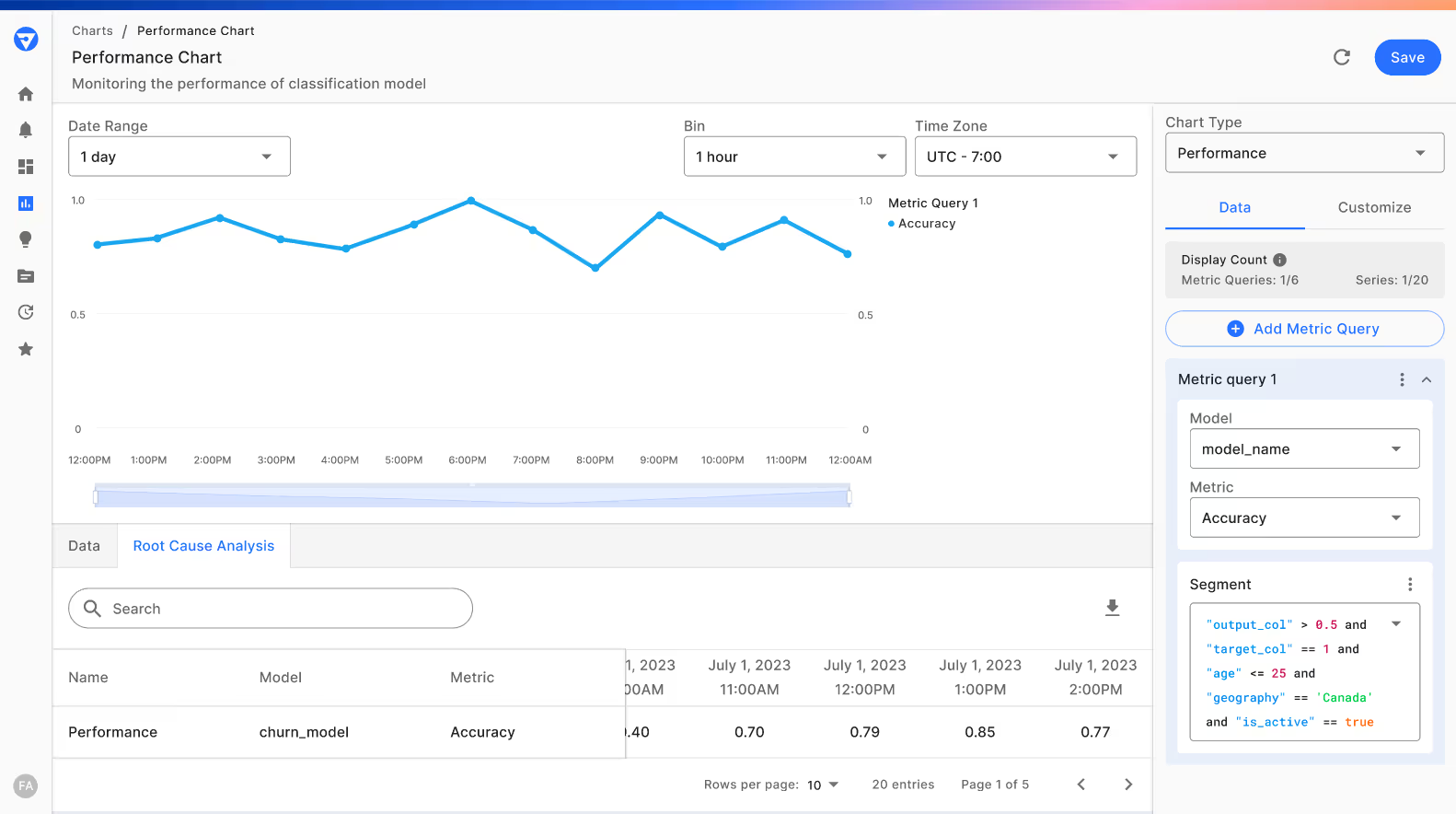

AI Observability platforms, designed for high-fidelity monitoring, use individual references and require metric calculations for effective model behavior monitoring. The key advantage of this approach lies beyond the simple alerts that are triggered after these metrics are calculated. The advantage of this approach truly comes to life when the users want to understand the “why” behind the alert. For example, when a model’s output drifts, leading to reduced accuracy in the model’s outcomes, ML teams must start a root cause analysis to address the issue that triggered the alert.

A series of follow up questions will almost certainly need to be answered to identify the true cause of the model’s degradation:

- Which input is drifting that is causing the output to drift?

- Which cohort is most responsible for the input drift?

- Can I pinpoint the segment that is responsible for bringing the accuracy of the model down?

- Can I run local explanations on inferences in this cohort that show why the model is confused?

With a properly built AI Observability platform, ML teams can analyze the inferences to identify the most problematic cohorts/segments and identify the true root cause of model performance issues.

Time Savings Through Faster Problem Resolution

Stemming from the ability to do powerful root cause analysis directly in the platform, vendors who have built their AI Observability platforms against the raw inferences unlock a tremendous amount of time savings for customers. With all of the model inferences and powerful model analytics tooling in hand, data scientists and other ML practitioners can immediately find the source of the model performance degradation without leaving the platform. Gone are the days of dumping large amounts of data into local notebook environments to do manual investigation — a process that can take days each time a new issue needs to be unearthed.

Monitoring Segmentation and Subpopulations

Inference-based observability also allows model stakeholders to analyze the performance across different monitoring segments. This approach allows for ad-hoc analysis of different model cohorts, enabling stakeholders to plot various cohorts on the same time series charts for comparative performance analysis over time.

Model developers, who often have insights into which cohorts are most challenging for their models, can significantly benefit from this. For example, in a model predicting loan repayment likelihood, applicants on the decision boundary — such as those with low FICO scores but substantial bank balances — may present ambiguous signals. With inference-based observability, these segments can be surfaced on a whim for comparison to other segments. These segments can also be saved for continuous monitoring, and alerts can be defined to trigger when specific metrics of these segments reach problematic levels.

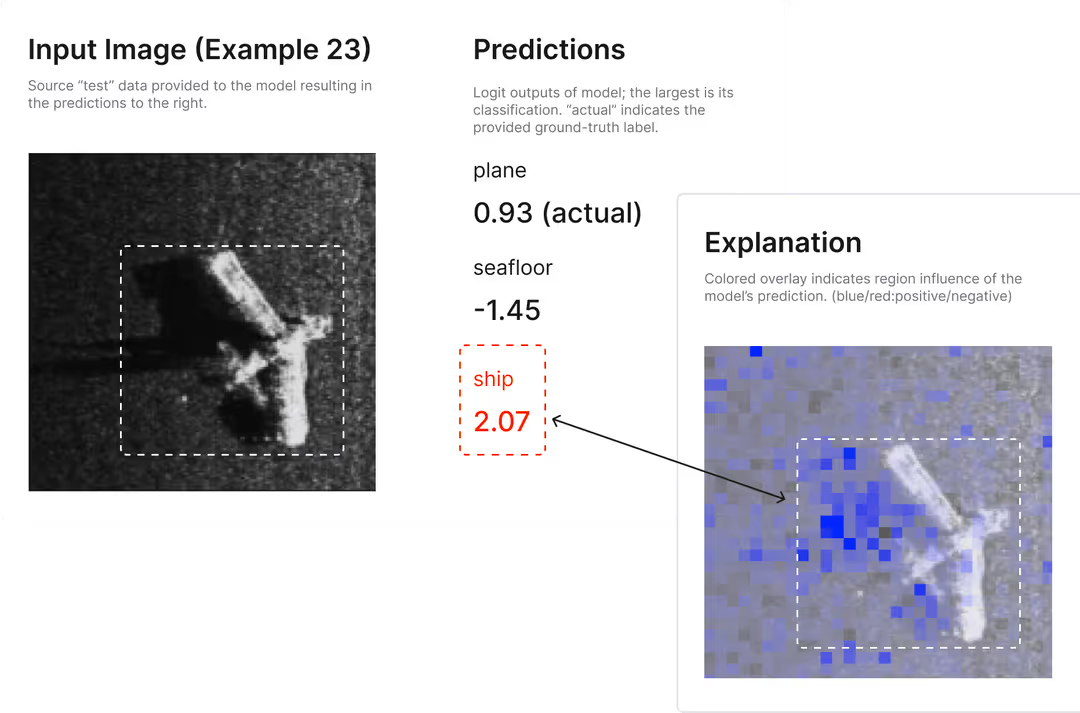

Using Inferences for Local Model Explanations

Using model agnostic explanation techniques like Shapley, LIME and Integrated Gradients can demystify the decision making of these opaque boxes, and help establish human-in-the-loop processes. Model stakeholders can clearly identify the inputs that most significantly influence the predicted output. Additionally, counterfactual or “what if” analysis can be done to see how the model behavior changes with small adjustments to the inputs.

Enhanced Security with Inference-Level Monitoring

Platforms of this mold, which store raw model inference inputs and outputs, can be responsible for storing sensitive information. For this reason, they have to adhere to the strictest security standards in order to have viable SaaS offerings. SOC 2 Type 2 and HIPAA certifications are necessary to ensure customer data is always safe within the AI Observability platform. Most vendors will also support private cloud or air-gapped deployments which alleviate all of these security concerns.

Balancing Metrics and Inferences: Choosing the Right Monitoring Strategy

AI-forward companies must evaluate the comprehensive benefits and costs of these two approaches, beyond just software expenses. Relying solely on aggregated metric observability does not help with root cause analysis which, if conducted manually, becomes a time-intensive process for any organization. Such manual investigations also entail an opportunity cost, diverting teams and resources from other higher-value tasks.

Moreover, leveraging observability through inference, as previously discussed, activates a litany of features that optimize model performance for each segment and across all segments. This pin-pointed optimization not only ensures peak performance but also leads to lower regulatory risk and potentially significant revenue increases for your organization. Ultimately, companies must select a monitoring approach that aligns with their AI strategy, fostering innovation, revenue growth, and the development of a responsible AI-driven business.

Request a demo to learn more about our inference-based model monitoring approach.