What is a model monitoring tool?

5

Min Read

As technology advances, the use of artificial intelligence (AI) and machine learning (ML) have increased. You can find them in the form of self-driving cars, chatbots on company websites, and even recommended advertisements that pop up on the side of the screen after you shop online. Over time, though, these models can experience a host of issues, such as data drift or model bias. This may occur due to any number of factors, including changes in your data lineage, products or policies, customer behavior, or the industry or marketplace itself. Model monitoring helps ML teams stay on top of their model performance to catch any production issues before they become a bigger problem.

Model monitoring is part of machine learning operations (MLOps). What is MLOps? It is a set of best practices meant to guide data science and ML teams in creating, training, and deploying ML models. These approaches increase collaboration between departments and allow for effective model governance.

In this blog, we will focus on the monitoring part of MLOps. Specifically, we will discuss what model monitoring is and which model monitoring tools are available for you to use.

What is monitoring in MLOps?

Model monitoring in MLOps is a critical piece of the lifecycle, where ML teams can observe performance to ensure models act as intended. This can involve validating model performance through a variety of metrics, including:

- Classification metrics

- Regression metrics

- Statistical metrics

- Model drift metrics

- Natural language processing metrics

- Deep learning metrics

Model monitoring also entails finding out when and why an issue occurred, should one arise. Ultimately, this ensures model fairness and is essential for model risk management. With ML monitoring tools, MLOps teams can deploy higher-performing models faster, identify issues before they become problems, and be alerted when re-training is necessary to improve predictions.

What is an MLOps tool related to monitoring?

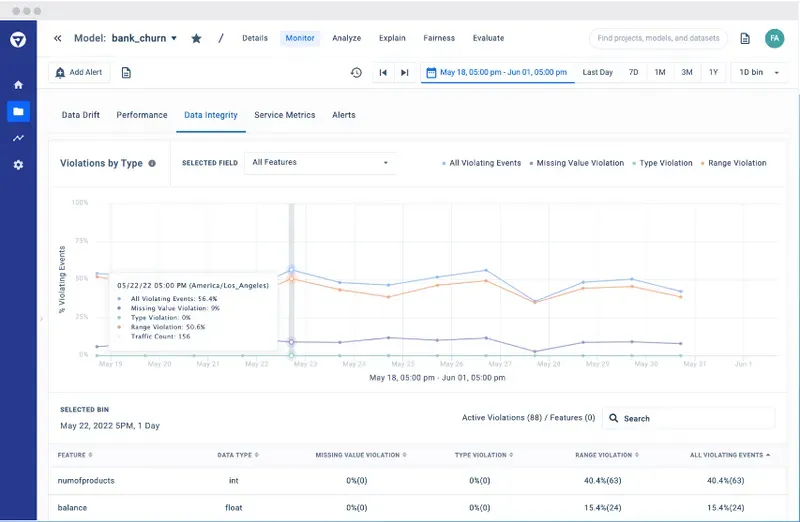

MLOps tools related to monitoring (also called model monitoring tools) make every part of the monitoring process in MLOps easier. Once ML teams deploy their models, model monitoring tools track changes in model performance to ensure there is no drift, analyze model behavior to ensure there is no bias, and send alerts when models need retraining. The image below is an example of a model monitoring tool. In this case, it is tracking the percentage of data integrity violations over time to make sure data integrity is upheld.

If the production data presents any sort of outliers, ML monitoring tools will determine which ones are caused by specific model features. Even when no issues are found, these tools will continue to interpret and analyze the data that your model ingests and outputs to check that it continues to run as best as possible.

How do I choose my tools on MLOps?

If you search for a list of MLOps tools online, you are bound to find several tools for you to choose from. You’ll have to keep several features in mind when considering which ones to use. When deciding which tools to choose from, you should look for the following features to get the most out of your ML models:

- A dashboard that provides deep insights into model behavior and issues.

- A platform that operates at an enterprise level for faster time-to-market. Having an enterprise-level platform will also save you time and money over managing in-house built open source model monitoring tools.

- Real-time alerts that notify you when issues may arise, as well as alerts regarding traffic, errors, and latency.

- Ability to monitor unstructured models such as Natural Language Processing (NLP) and Computer Vision (CV).

Having features like those listed above will boost team collaboration, lower costs associated with finding and resolving issues, and decrease the number of errors your model experiences overall. If you want to learn more about ML model monitoring, try Fiddler today.