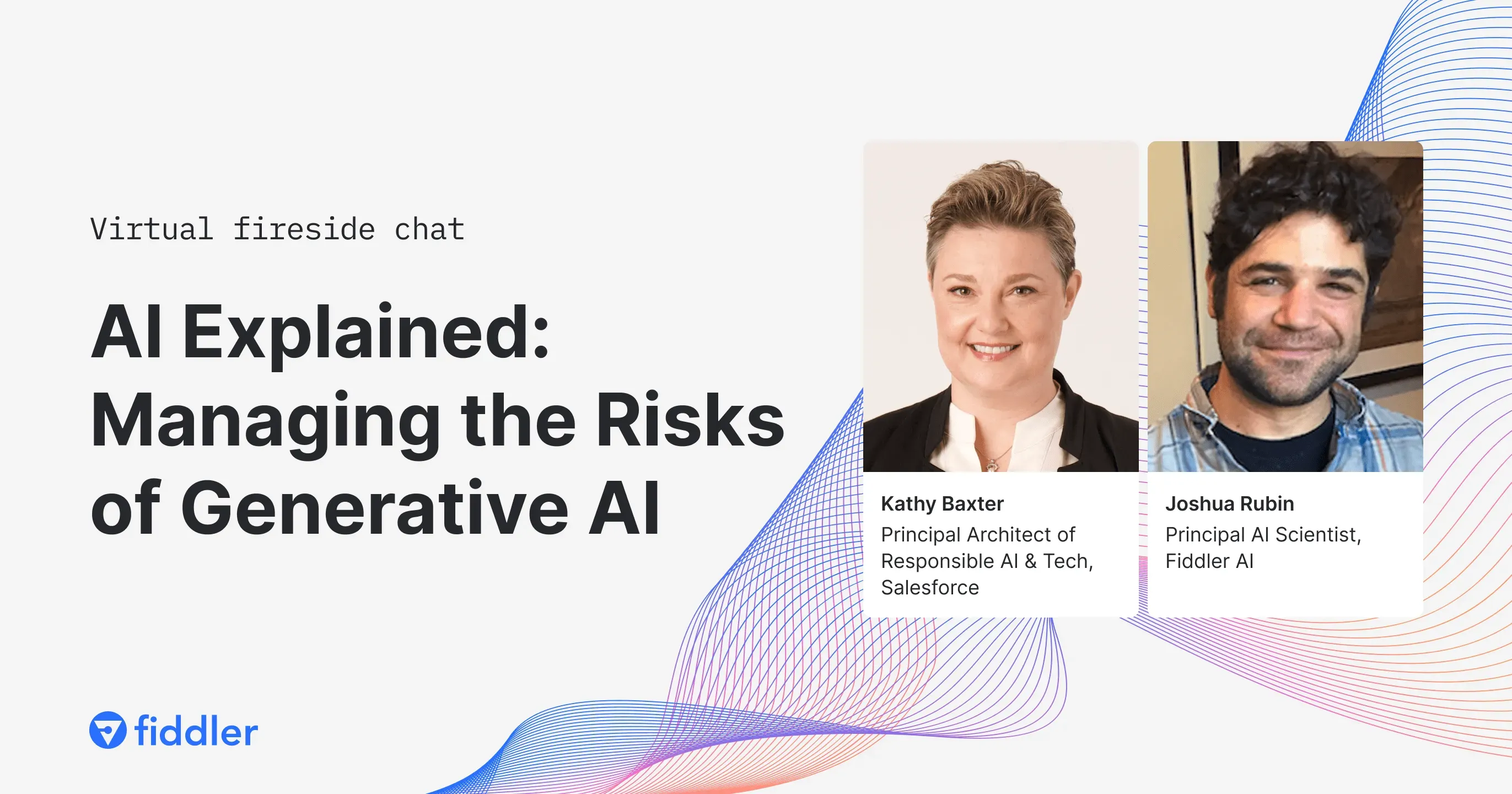

Generative AI is increasingly recognized by organizations as a powerful tool for driving innovation and business growth. However, its wider adoption is often hindered by concerns over ethical implications and unintended consequences that can negatively affect both the organization and its consumers. We had the pleasure to have Kathy Baxter, Principal Architect of Responsible AI & Tech at Salesforce, join our AI Explained: Managing the Risks of Generative AI. She shared valuable insights on ethical AI practices that organizations can adopt to not only minimize potential harms but also expand the societal benefits of AI.

The Importance of Ethical and Responsible AI Practices

Ethical AI is a cornerstone in the foundation of modern AI development and deployment. As AI systems become more complex and influential, the ethical implications surrounding them grow exponentially. The key lies in ensuring that these systems are accurate, fair, and secure, while minimizing biases and toxicity.

- Accuracy and Reliability: The accuracy of an AI system determines its reliability. In critical sectors like healthcare, finance, and legal, where AI decisions can have profound impacts, the margin for error is minimal. Ensuring accuracy means not only improving the technology but also understanding and mitigating the risks of false or misleading outcomes.

- Combating Bias and Toxicity: Bias in AI can be a reflection of the biases inherent in society. It’s crucial to recognize and rectify these biases in AI systems to prevent perpetuating societal inequities. Similarly, guarding against toxicity in AI generated content is vital to maintain the integrity and trustworthiness of AI systems.

Enterprises can use model cards as a transparency tool in AI, designed to provide detailed information about AI models, including training data, performance, known biases, and intended uses. These model cards help AI teams make informed decisions when procuring AI technology and promote ethical and responsible AI practices by enhancing understanding and accountability of AI systems. - Security Measures: With the increasing use of AI in sensitive areas, security cannot be an afterthought. Robust security measures are essential to protect against data breaches and misuse of AI systems. This includes ensuring the AI system’s resilience to attacks and its ability to maintain confidentiality and data integrity.

Collaborative Governance and Regulation

Staying ahead of the advancements in AI, especially generative AI, requires enterprises to adopt an AI safety culture, a comprehensive approach to AI development and deployment, emphasizing leadership, accountability, transparency, and continuous learning to ensure AI systems are safe, reliable, and trustworthy. By adopting an AI safety culture, teams across the enterprise can collaboratively build the right AI governance framework that everyone involved can stand behind.

- Public-Private Partnerships: A symbiotic relationship between the private sector and government agencies is crucial for effective AI governance. These partnerships can lead to the development of comprehensive policies and best practices that are informed by the expertise of both industry leaders and regulatory bodies. This collaboration ensures that regulations keep pace with technological advancements while safeguarding public interest.

- Global Standards and Policies: AI crosses geographical boundaries. Hence, there's a pressing need for global standards and policies that address the universal challenges posed by AI. International collaboration can aid in establishing a set of shared values and principles that guide AI development worldwide.

Enterprises can follow the AI Risk Management Framework (AI RMF) developed by the National Institute of Standards and Technology (NIST). The AI RMF guides enterprises to adopt a well-considered and transparent process to not only improve their AI practices but also contribute to the overall trustworthiness and reliability of AI technologies in the industry. - Sector-Specific Regulations: Different industries face unique challenges when it comes to AI integration. Therefore, sector-specific regulations are necessary to address these unique challenges. Such tailored regulations ensure that AI is used responsibly and ethically in specific contexts, whether it be healthcare, banking, or transportation.

- Adapting to Rapid Technological Changes: The pace at which AI is evolving requires governance frameworks that are adaptable and responsive. Continuous dialogue between technologists, policymakers, and other stakeholders is essential to update and refine AI regulations in line with emerging trends and discoveries.

The Road to AI Literacy and Transparency

As AI increasingly integrates into daily life, it's essential for consumers to not only understand and trust AI interactions but also grasp the underlying principles and practices. This knowledge empowers consumers to make informed decisions and use AI responsibly.

- Understanding AI Technologies: A fundamental challenge in consumer AI literacy is the complexity of the technology. Simplifying and demystifying AI for the general public is essential. This includes educating consumers about how AI systems work, the data they use, and the potential biases they might carry. When consumers have a basic understanding of these concepts, they can interact with AI more effectively and critically.

- Transparency and Trust: Transparency in AI operations builds trust. Consumers should have access to information about how AI systems make decisions, especially when these decisions impact their lives directly. Clear communication about the capabilities and limitations of AI helps set realistic expectations and reduces the potential for misuse or misunderstanding of the technology.

- Empowering Consumers: Empowerment goes beyond understanding; it's about giving consumers control. This can be achieved through features that allow users to customize AI interactions according to their preferences and needs. For instance, options to opt-out of certain data collections or to understand why an AI system made a specific recommendation can significantly enhance user agency.

- Ethical Considerations: Consumers should also be aware of the ethical considerations of AI. This includes understanding the societal impact of AI, such as job displacement concerns, privacy issues, and the potential for AI to reinforce societal biases. An informed public can advocate for ethical AI development and hold companies accountable.

Watch the full AI Explained: Managing the Risks of Generative AI on demand.