We’re excited to announce the Fiddler and Domino partnership! Together, we’re helping companies accelerate the production of AI solutions and streamline their end-to-end MLOps and LLMOps observability.

Complete observability for your Domino models with Fiddler

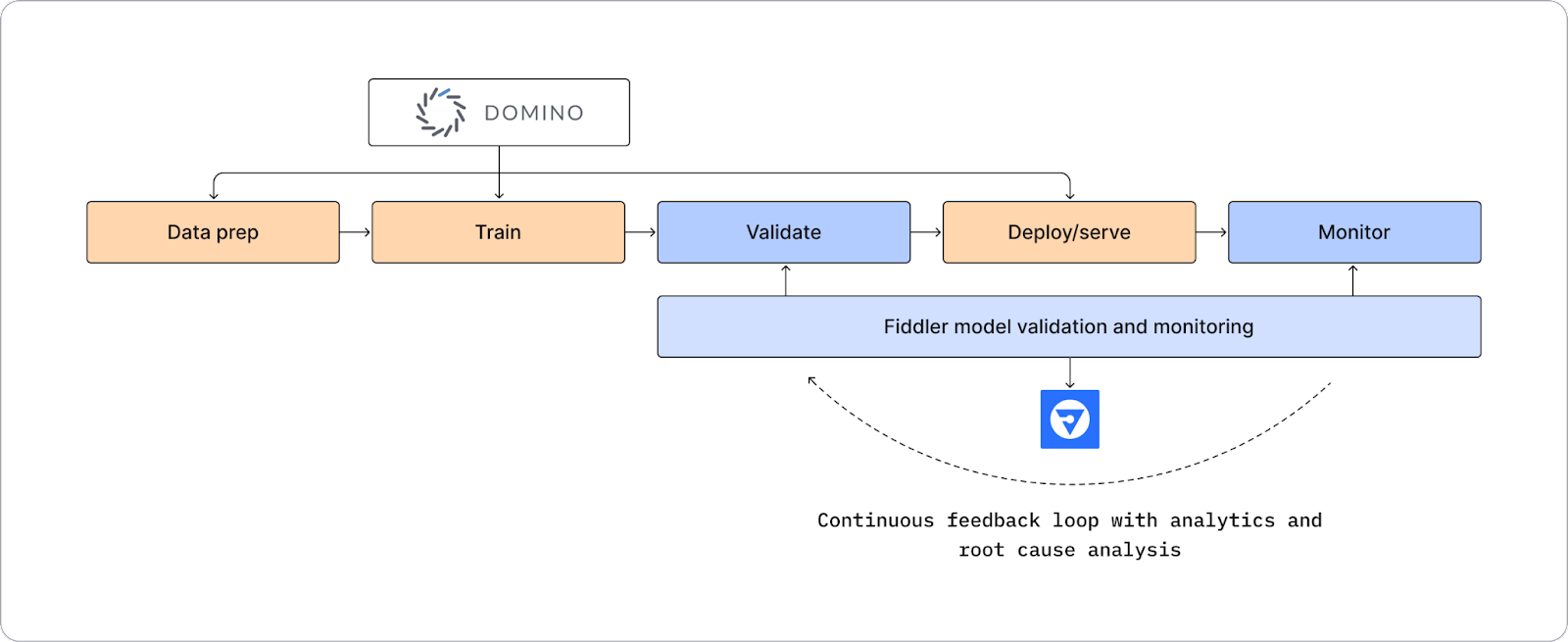

The Domino Platform and the Fiddler AI Observability Platform allow your team to streamline ML and LLMOps workflows. Fiddler creates a continuous feedback loop from pre-production validation to post-production monitoring to ensure your ML models and large language models (LLMs) applications are optimized, high-performing, and safe.

MLOps Observability

Data scientists and AI practitioners in MLOps can explore data and train models in Domino’s Platform. Using Fiddler’s integration with Domino’s platform they can use the Fiddler AI’s Observability platform to validate the models before launching them into production. Fiddler monitors production models for model drift, data drift, performance, data integrity, and traffic behind the scenes, and alerts ML teams as soon as high-priority models’ performance dips.

Fiddler goes beyond measuring model metrics. It arms ML teams with a 360° view of their models using rich diagnostics and explainable AI. Contextual model insights connect model performance metrics to model issues and anomalies, creating a feedback loop in the MLOps workflow between production and pre-production. Fiddler helps ML teams pinpoint areas of model improvement. They can then go back to earlier stages of the MLOps workflow in Domino, to explore and gather new data for model retraining.

LLMOps Observability

Data and software engineers and AI practitioners in LLMOps can evaluate the robustness, safety, and correctness of LLM applications in pre-production using Fiddler Auditor — the open-source LLM robustness library. Fiddler Auditor is available on GitHub and on Domino AI Hub and Domino users can red-team and monitor LLMs without leaving their environment.

Once LLM applications are in production, users can monitor them for correctness, safety, and privacy metrics. LLMOps teams can also perform root cause analysis using a 3D UMAP to pinpoint problematic prompts and responses to understand how they can improve their applications.

Get started on MLOps with Fiddler in three steps

Let’s walk through how you can start monitoring Domino ML models in Fiddler. Install and initiate the Fiddler client to validate and monitor ML models built on Domino’s Platform in minutes by following the steps below or as described in our documentation:

1. Upload a baseline dataset

Retrieve your pre-processed training data from Domino’s TrainingSets. Then load it into a dataframe and pass it to Fiddler:

from domino.training_sets import TrainingSetClient, model

import fiddler as fdl

#get your training data from domino

TRAINING_SET = "Your Training Dataset"

training_set_by_num = TrainingSetClient.get_training_set_version(

training_set_name=TRAINING_SET,

number=2)

baseline_dataset = tsv_by_num.load_raw_pandas()

#initiate fiddler client

fiddler_client = fdl.FiddlerApi(url= "Your Fiddler URL",

org_id="Your Fiddler Org ID",

auth_token="Your Fiddler Auth Token")

dataset_info = fdl.DatasetInfo.from_dataframe(

baseline_dataset,

max_inferred_cardinality=100)

fiddler_client.upload_dataset(

project_id='Your Project Name',

dataset_id=TRAINING_SET,

dataset={'baseline': baseline_dataset},

info=dataset_info)2. Add metadata about the model

Share model metadata: Use Domino Data Lab’s ML Flow implementation to query the model registry and get the model signature which describes the inputs and outputs as a dictionary:

import mlflow from mlflow.tracking import MlflowClient

#initiate MLFlow Client

client = MlflowClient()

model_version_info = client.get_model_version(model_name, model_version)

#Get the model URI

model_uri = client.get_model_version_download_uri(model_name, model_version_info)

#Get the Model Signature

mlflow_model_info = mlflow.models.get_model_info(model_uri) model_inputs_schema = model_info.signature.inputs.to_dict()

model_inputs = [ sub['name'] for sub in model_inputs_schema ]Now you can share the model signature with Fiddler as part of the Fiddler ModelInfo object:

features = model_inputs

model_task = fdl.ModelTask.BINARY_CLASSIFICATION

model_info = fdl.ModelInfo.from_dataset_info(

dataset_info = client.get_dataset_info(YOUR_PROJECT,YOUR_DATASET),

target = 'TARGET COLUMN',

dataset_id= TRAINING_SET,

model_task=model_task,

features=features,

outputs=['output_column'])

#upload model info to Fiddler

client.add_model(

project_id=PROJECT_ID,

dataset_id=DATASET_ID,

model_id='Your Model Name',

model_info=model_info,

)3. Publish events

You can query the data sources in your Domino environment to pull the model inferences and put the new inferences into a data frame to publish to fiddler:

from domino.data_sources import DataSourceClient

#instantiate a client and fetch the datasource instance

redshift = DataSourceClient().get_datasource("YOUR-DATA-SOURCE")

query = """

SELECT

*

FROM

INFERENCE_TABLE

"""

#res is a simple wrapper of the query result

res = redshift.query(query)

#to_pandas() loads the result into a pandas dataframe

df = res.to_pandas()

fiddler_client.publish_events_batch(

project_id='Your Porject Name',

model_id='Your Model Name',

batch_source=df)That’s it! Now you can jump into your Fiddler environment to start observing the model data we just published. Fiddler will be able to alert you whenever there are issues with your model.

We’re here to help. Contact our AI experts to learn how enterprises are accelerating AI solutions with streamlined end-to-end MLOps and LLMOps using Domino and Fiddler together.