How do you train and test a model in machine learning and create responsible AI?

4

Min Read

How is testing done in machine learning? The answer is more complicated than you might realize. Yes, the mechanics behind training and testing a model are complex in themselves, but creating responsible models in machine learning (ML) comes with a whole other set of unique challenges.

Fundamentally, AI has the potential to provide innovative and productive solutions to modern-day problems. However, there are significant risks. To illustrate, bias is one of the most pervasive and critical issues in AI. From AIs showing bias against women to algorithms favoring white patients over black patients in US hospitals, there are many instances where biased AI has run rampant and caused turmoil.

Based on these two examples alone, it is abundantly clear that biased AI has dangerous consequences. Therefore, it is paramount to ensure that models adhere to ethical standards while they are in operation.

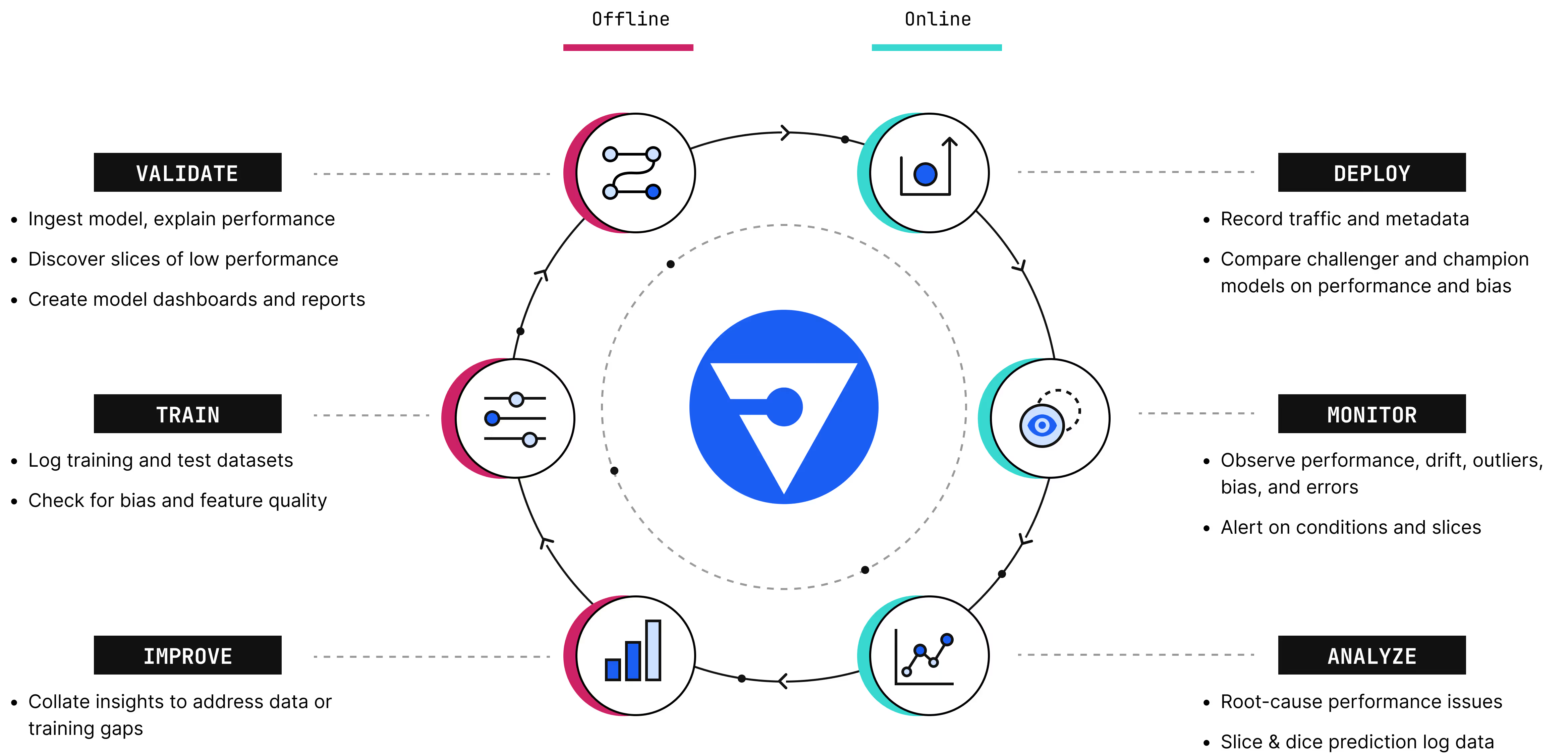

Bias and other issues can be avoided in machine learning models by offering humans greater visibility into every stage of the system life cycle. With improved visibility into model performance online and offline, unethical or error-ridden behaviors can be detected and managed early on.

In this blog, we won’t get too in the weeds describing the technicalities of how to train a machine learning model— taking a deep dive into the processes of machine learning regression testing and supervised machine learning would turn this blog post into a novel.

Instead, we are going to explore these processes on a fundamental level, and explain how model monitoring and machine learning operations technology (MLOps) both improve the training and testing process to create truly trustworthy AI.

Reviewing the basics: how is a machine learning model trained and tested?

As a key component of the MLOps life cycle, ML training and testing has one goal: assess the accuracy of your model.

There are three stages involved in this process:

- Training: The training portion of this process involves creating the model itself, and primarily focuses on formulating the model’s decision making capabilities.

- Validation: The model validation stage allows teams to unearth instances of unwanted model behavior, while still operating in a controlled environment.

- Testing: The testing stage finally determines if your model is performing correctly in real-world settings.

Additionally, each stage of this process involves using specific datasets:

Training data

Training data in machine learning is what an ML algorithm is built on. Essentially, this is the model's DNA. Throughout the training process, the model is designed to continually evaluate the data, make adjustments to function, and complete its objectives.

Validation data

Validation data is also used in the training process. This data incorporates new, unevaluated information into the model. This is where teams test how their model behaves and makes predictions based on new information. In short, validation data helps influence the model’s adaptability.

Test data

Lastly, we must consider how test data is used in machine learning. After the training phase comes to an end, and the model is fully operational, testing begins. Testing data is used to determine how a model interacts with unseen, real-world datasets. This allows teams to determine if the ML algorithm has been effectively trained.

As models are built, trained, and evaluated, data scientists will choose the approach with the best performance. However, because of low visibility, weak performance monitoring, or the opaque nature of models themselves, model bias and other issues will creep in if we aren’t careful.

Creating reliable AI through model monitoring and MLOps technology

With AI increasingly driving critical functions, it is essential to create responsible AI. By closely monitoring how the model is performing, issues like model bias and data drift, can be detected and eradicated before any adverse effects are experienced. Accurate model monitoring tools ensure models are continuously improving and will provide the best results possible.

At Fiddler, our mission is to help you build trust into your AI. Our AI observability platform enables you to observe and explain the changes occurring within your models in real-time, allowing you to make adjustments before deployment.

Request a demo to learn more.