Build Responsible AI with AI Observability and Security

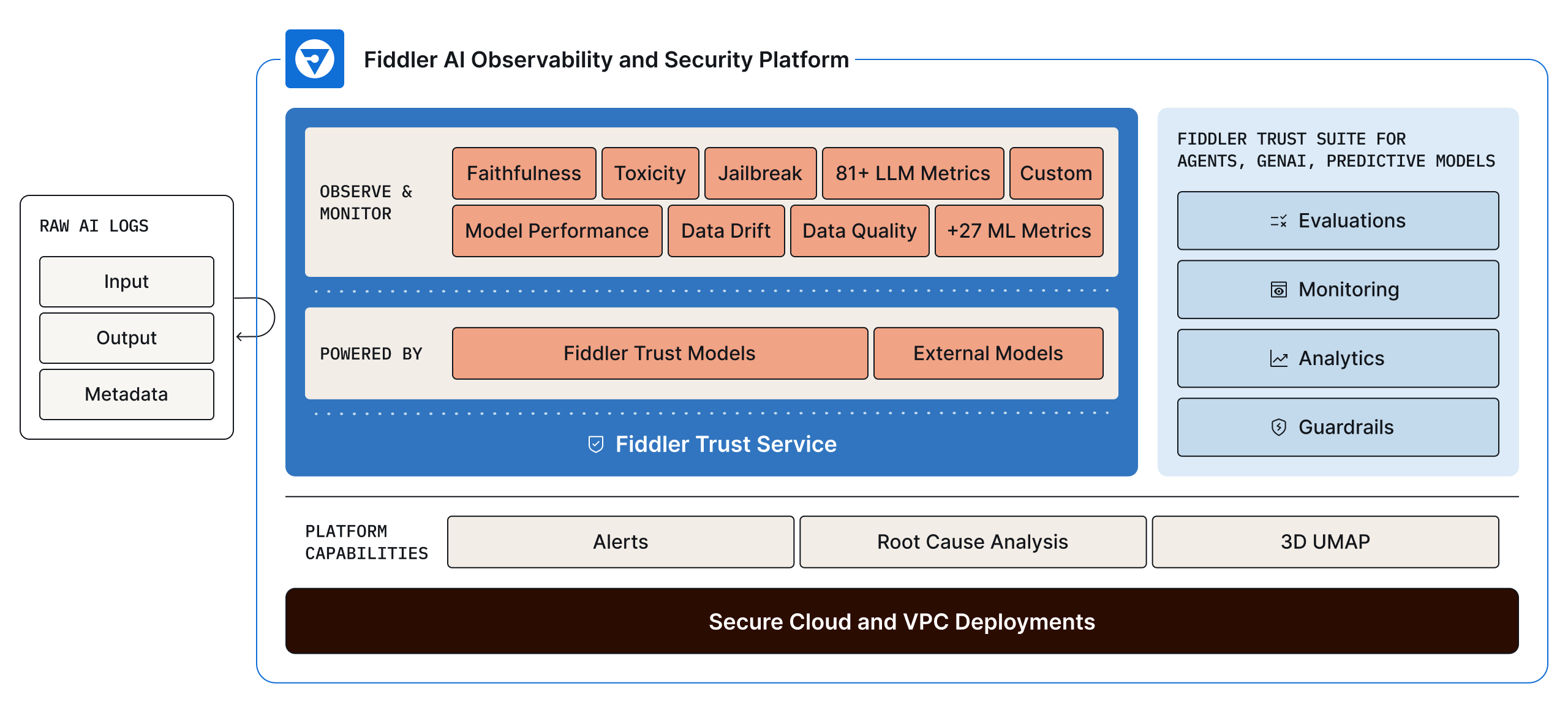

Fiddler is a pioneer in AI Observability and Security — the foundation which gives enterprises the confidence to ship more predictive and generative models and LLM applications and models into production safely and responsibly. We help instill trust and transparency in your agentic, LLM, and ML applications — factors that directly impact your return on investment (ROI). By driving AI governance, risk management, and compliance, Fiddler supports the advancement of responsible AI practices. AI Observability is reliant not only on metrics, but also on how well issues can be explained when something eventually goes wrong.

Fiddler empowers your Data Science, Engineering, Trust & Safety, and Security teams to monitor, analyze, and protect agentic, LLM, and ML applications.

Build Trust into AI with Fiddler

- All-In-One AI Observability and Security Platform: A unified platform that brings together agentic, LLM, and ML observability, Fiddler Trust Service, Guardrails, and contextual model analytics.

- Actionable Diagnostics: Deep root cause analysis and actionable application and model insights for quick issue resolution and model improvement.

- Built for the Enterprise and Government: Enterprise-grade scalability and stability to support secure agentic, LLM, and ML deployments in SaaS and VPC environments, including AWS GovCloud for the government.

Key Capabilities

Monitoring

Monitor predictive models and LLMs in production and manage performance, correctness, and safety metrics at scale in a single pane of glass. From alerts to root cause analysis, pinpoint areas of application and model underperformance and minimize business impact. You can also find quick answers to the root cause and the “why” behind all issues.

Plug Fiddler into your existing agentic, LLM and ML tech stacks for consolidated monitoring to:

- Gain operational efficiencies through faster time-to-market at scale.

- Reduce costs by improving MTTI and MTTR for AI applications.

- Foster responsible innovation by safeguarding agents, LLM and ML applications and data.

Analytics

Analytics must deliver increased transparency and actionable insights that power data-driven decisions. To improve predictions, market context and business alignment must be baked into modeling so results reflect the needs and challenges of your business.

Use descriptive and prescriptive analytics from LLMs and ML models to make decisions so you can:

- Deploy higher ROI models to increase revenue.

- Align decisions to stay in lockstep with business needs.

- Respond quickly and improve applications and models when market dynamics shift.

Security

Safeguard AI applications with enterprise-grade security and low-latency guardrails. Fiddler enables secure deployment in SaaS, VPC, and air-gapped environments, preventing data leaks, prompt injection attacks, and jailbreak attempts while upholding trust and safety.

Proactively protect against security risks:

- Prevent security threats like prompt injection and adversarial attacks.

- Reduce risk exposure with compliance-driven monitoring and alerts.

- Ensure data privacy with secure deployments in SaaS, VPC, and air-gapped environments.

Agentic and LLM Use Cases

- AI Chatbot Monitoring

- Content Summarization

- LLM Cost Management

- Internal Copilot Monitoring

- Multi-Agent Interdependency Impact

- Agentic Hierarchy Monitoring

- AI Governance

- Risk Management

- Compliance

- Security

ML Use Cases

- Anti-money Laundering

- Fraud Detection

- Credit Scoring Investment

- Decision-Making

- Underwriting

- Churn Detection

- AI Governance

- Risk Management

- Compliance

- Security