Visibility, Context, and Control for Agentic and Predictive AI

Drive Innovation, Productivity, and Revenue

Your AI agents and predictive applications are becoming the backbone of operations, generating revenue, accelerating decisions, and solving problems humans can't tackle alone. As your AI systems gain the agency to make autonomous decisions, reason through complex problems, reflect on outcomes, and sustain memory across interactions, a critical question emerges: Can you run and control them?

Gain unified visibility, context, and control across agents and predictive applications, so you can confidently deploy AI that performs consistently and safely at enterprise scale.

The Fiddler AI Observability and Security platform empowers you to:

- Evaluate and monitor agents and predictive applications throughout their lifecycle.

- Analyze and understand agent behavior, model performance, and associated risks.

- Protect and enforce AI applications with guardrails that prevent costly incidents in real-time.

- Gain enterprise-wide visibility into AI applications and meet compliance requirements.

One Platform for Testing and Observability, Guardrails, and Governance

Built for the most demanding enterprise environments with the scale and security you need.

Gain Complete Visibility from Development to Production

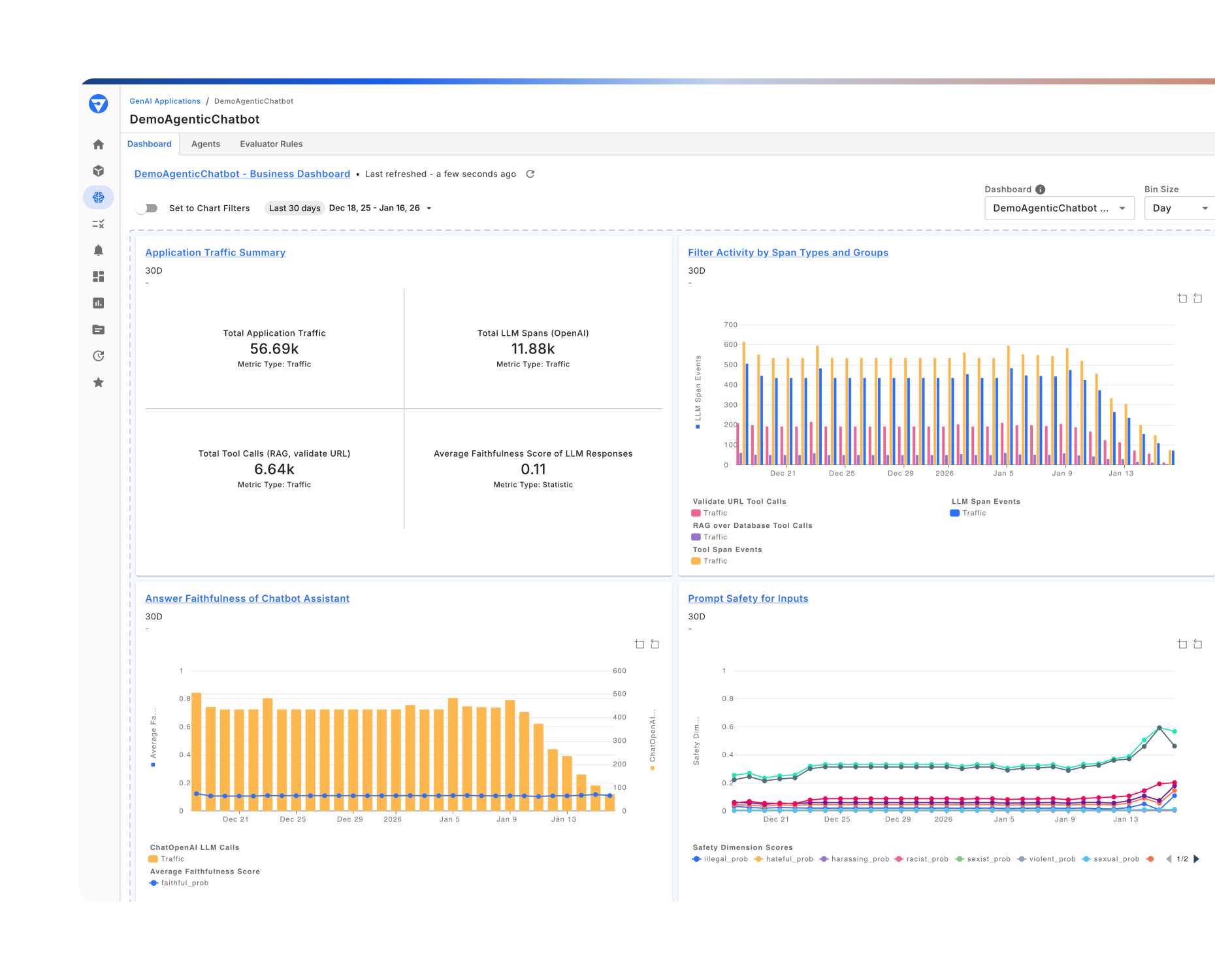

Without proper monitoring, model degradation goes undetected and agentic systems generate thousands of rows of raw event logs, forcing teams to manually parse data to find issues they can't even see. Enterprises need a unified observability solution that continuously evaluates and monitors across the entire lifecycle to ensure high performance across agents and predictive applications.

With Fiddler, you can:

- Evaluate in development and monitor in production to maintain high performance.

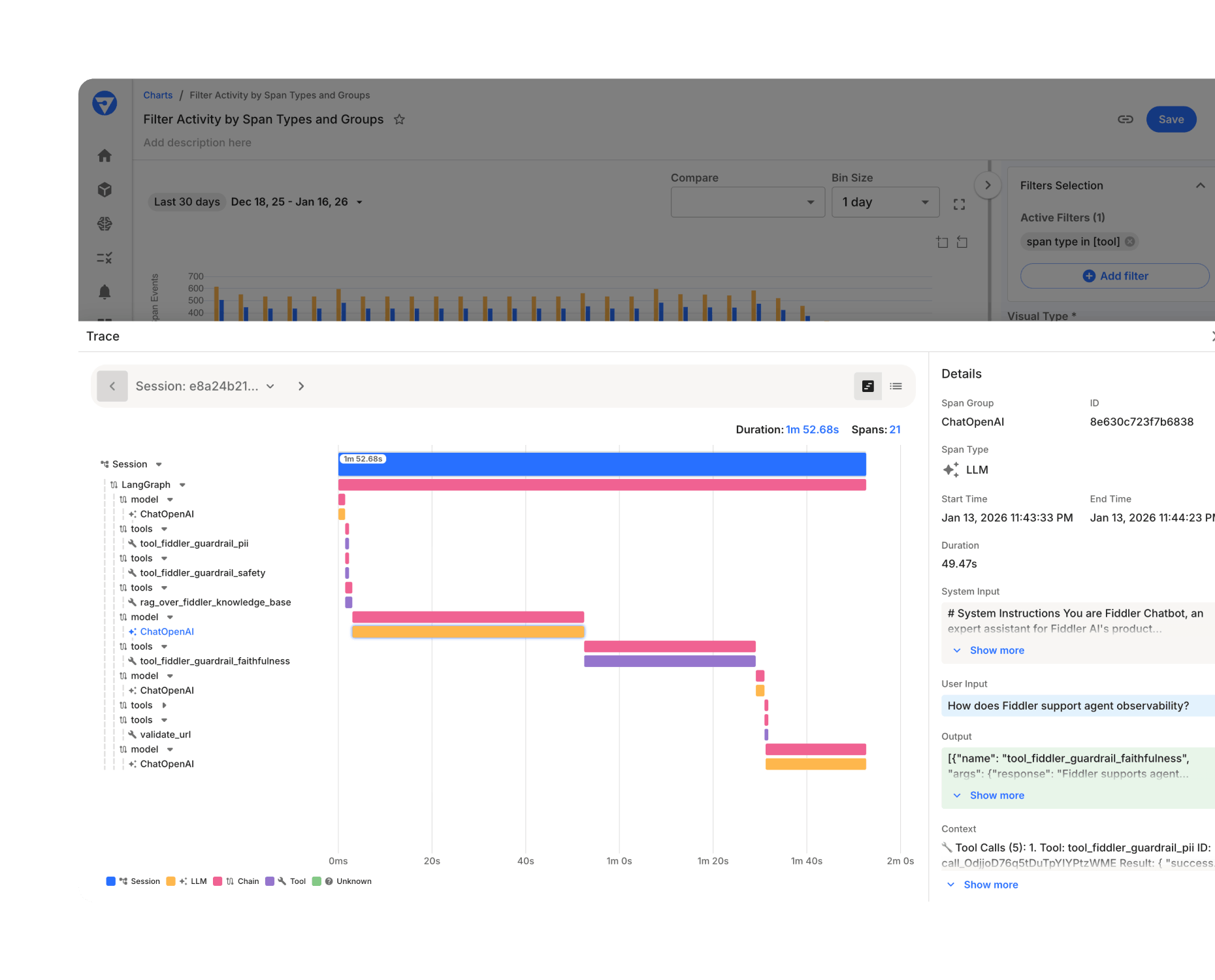

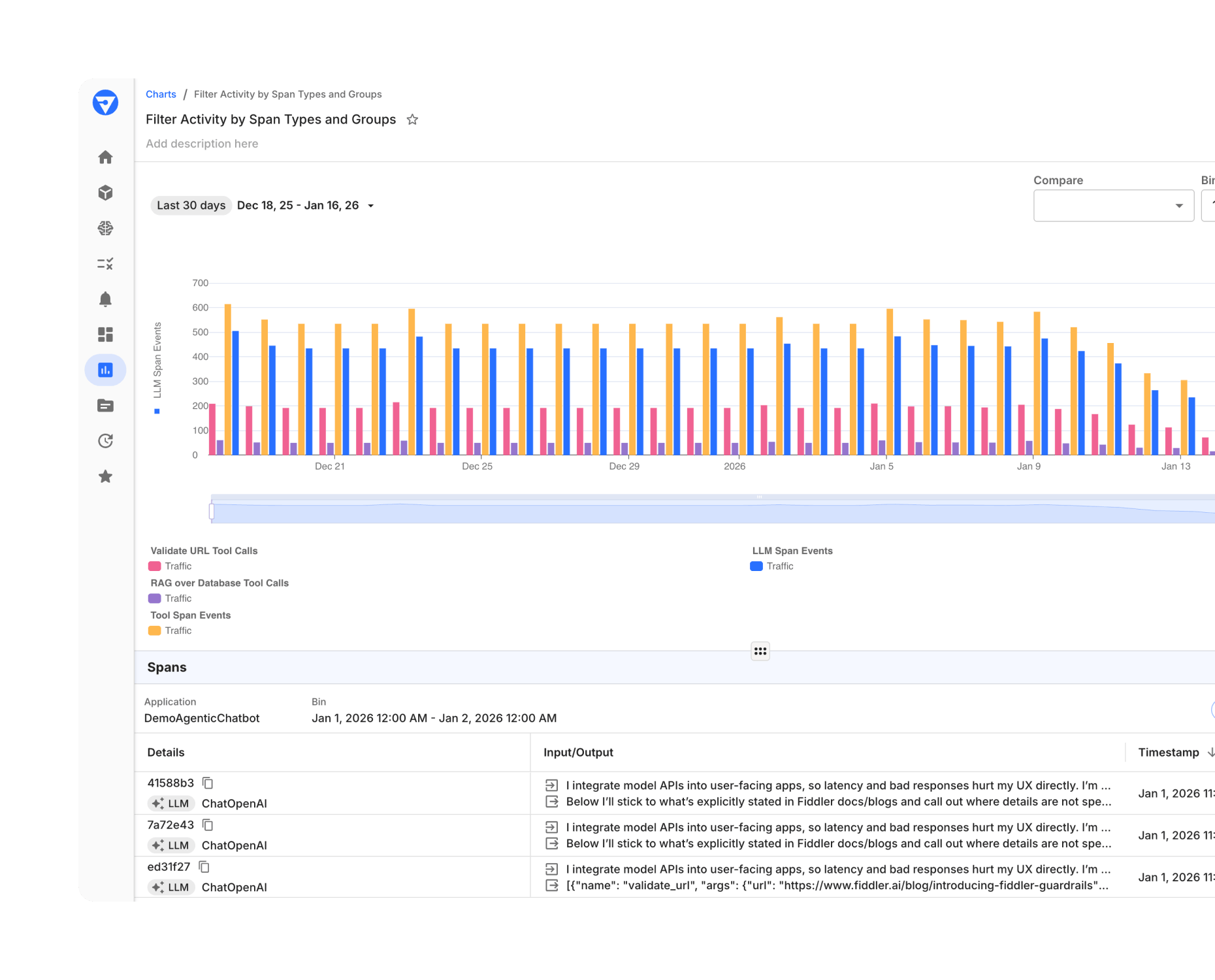

- Visualize rich insights, from aggregate roll-ups to granular details, for agents (application → session → agent → trace → span) and predictive ML (inputs, predictions, explanations).

- Understand agent behavior and model performance with deep diagnostics that reveal root causes of agent failures and model degradation.

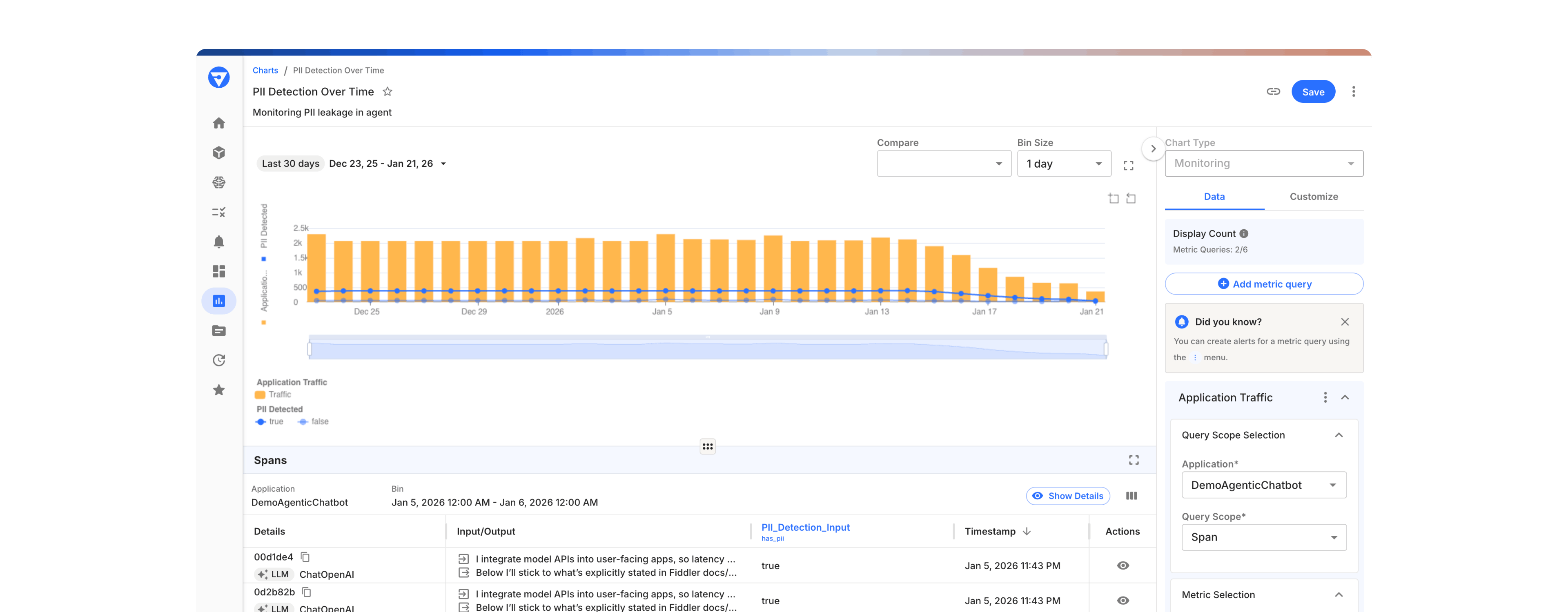

- Select from 100+ out-of-the-box and custom metrics, including hallucination, toxicity, PII/PHI, drift, performance, and industry-specific business KPIs.

- Reduce MTTI and MTTR with real-time alerts that trigger immediate action when performance drops.

Gain Context, Enforce Control Before Costly Risks Happen

Agents make autonomous decisions and predictive models drive critical business outcomes. But without deep insights into AI behavior and enforced guardrails to prevent harmful actions, enterprises can't diagnose failures or prevent harmful actions. This leaves enterprises exposed to compliance violations, reputational damage, and significant financial risk.

With Fiddler, you can:

- Gain deep insights into agent and model behavior with diagnostics that reveal root causes of failures and degradation.

- Detect hallucinations, toxicity, bias, PII/PHI leakage, jailbreaks, and policy violations in real-time.

- Intervene, pause, reroute, or escalate actions when agent behavior deviates from expectations.

- Enforce policies, enterprise rules, and approval workflows across agents and tools.

Executive Oversight and Compliance for Enterprise AI

Enterprises deploy AI across multiple teams and applications with no unified view of what's running, who owns it, or how it impacts business outcomes. This lack of centralized management creates accountability gaps, fragmented audit trails, and makes it impossible for executives to connect AI performance to business KPIs or demonstrate compliance to regulators.

With Fiddler, you can:

- Manage all agents and predictive models from a unified executive dashboard that connects AI behavior and performance to business KPIs.

- Record every decision, action, evaluation, and policy outcome for complete accountability.

- Generate evidence needed for audit trails aligned with enterprise governance and regulatory requirements (GDPR, HIPAA, NAIC, SR 11-7).

- Track ownership, responsibilities, and approvals across all AI applications and teams.

Dedicate Your Efforts to Innovation, Not In-house Systems

Still debating whether to build an internal solution or adopt a unified AI Observability and Security platform?

Building custom observability for agents and predictive applications may seem flexible initially, but maintaining in-house systems diverts engineering resources, accumulates technical debt, and struggles to keep pace with evolving AI frameworks and regulations.

With a unified, purpose-built platform, you can:

- Deploy enterprise-ready observability, guardrails, and governance in weeks instead of years.

- Avoid hidden costs of building, maintaining, and scaling custom infrastructure.

- Monitor agents and predictive applications across tabular, text, and image data without building separate systems.

- Handle growing model volumes and data throughput from gigabytes to petabytes without performance degradation.

- Leverage continuous platform updates for new frameworks and compliance standards instead of perpetual maintenance.

Featured Resources

Frequently Asked Questions About AI Observability

What is AI observability?

AI observability is the practice of monitoring, analyzing, and troubleshooting AI models and systems in real time to ensure performance and reliability. It involves tracking data inputs, model behavior, and outputs to detect anomalies and optimize performance.

Why is AI observability important?

AI observability is crucial for detecting performance degradation, mitigating biases, and ensuring transparency. It gives businesses the confidence to deploy more AI models and applications, while maintaining trust and compliance.

What are the key features to look for in an AI Observability platform?

An AI observability platform should include key features such as metric tracking and visualization, data segmentation, bias detection, root cause analysis, and alerting systems.. These capabilities help AI models operate as expected, maintain transparency, and align with business goals while proactively identifying risks.

How can AI observability improve model performance and reliability?

By continuously monitoring model behavior, AI observability identifies errors, biases, and drift early. This allows teams to identify areas of improvement quicjkly and make adjustments, improving model or application accuracy and reliability.

What are the best methods for measuring AI impact on business performance?

AI impact can be measured using AI Observability by tracking key metrics such as revenue growth, cost savings, customer retention, operational efficiency, or custom metrics specific to your industry or business.