Mitigating unfairness in predictive modeling: what can systems engineering teach us?

This blog series focuses on unfairness that can be obscured when looking at data or the behavior of an AI model according to a single attribute at a time, such as race or gender. In Part 1 of the series, we described a real-world example of bias in AI and discussed fairness, intersectional fairness, and a demonstration with a simple example.

In this blog, we discuss an approach to investigate the causes of the unfairness of an existing ML model that has already been determined to be unfair. As a running example, based on a real-world banking use case, we continue to use a credit approval model that we determined was unfair. This model predicts the likelihood that an applicant will default on their credit payments and decides whether to approve or reject their credit application. Thus, the model provides binary outcomes - approve or reject.

We made the following observations in Part 1:

- The model appears to be fair when we consider gender or race individually, having similar performance across all subgroups. However, when we consider gender and race in combination, the model underperforms for certain protected subgroups such as Pacific Islander men.

- It may not be possible to achieve fairness for multiple fairness metrics simultaneously. Hence, it's important to choose the fairness metric that is most appropriate for a given application setting.

Our key objective here is to illustrate a potential framework to systematically investigate the causes of unfairness by adopting a “systems engineering approach.” This term has several connotations, so we note here to the readers, especially within the Computer Science community, that in this blog, we adopt the definitions and frameworks of a systems engineering approach as mentioned in NASA’s system engineering handbook. The handbook defines systems engineering as follows:

“At NASA, ‘systems engineering’ is defined as a methodical, multi-disciplinary approach for the design, realization, technical management, operations, and retirement of a system. A ‘system’ is the combination of elements that function together to produce the capability required to meet a need. The elements include all hardware, software, equipment, facilities, personnel, processes, and procedures needed for this purpose; that is, all things required to produce system-level results. The results include system-level qualities, properties, characteristics, functions, behavior, and performance. The value added by the system as a whole, beyond that contributed independently by the parts, is primarily created by the relationship among the parts; that is, how they are interconnected. It is a way of looking at the ‘big picture’ when making technical decisions… It is a methodology that supports the containment of the life cycle cost of a system. In other words, systems engineering is a logical way of thinking.”

A systems framework to investigate causes of unfairness

A systems framework includes the following steps:

- Specify an objective

- Describe the subsystems

- Prioritize the subsystems

- Evaluate and analyze the subsystems (our focus)

A systems perspective typically begins with explicitly specifying an objective. In the context of fairness, this would include questions such as:

- Do we want to test for unfairness in our existing model?

- Do we want to mitigate unfairness having already identified it?

- Or do we want to design a fair model from the get-go?

Critically, the objective itself influences the way we approach the problem before we even start thinking about defining fairness.

In this blog, our objective is to identify the causes of unfairness in this model as a first step toward mitigating them. Once we have a set objective, we must describe and list the subsystems influencing the outcomes of interest.

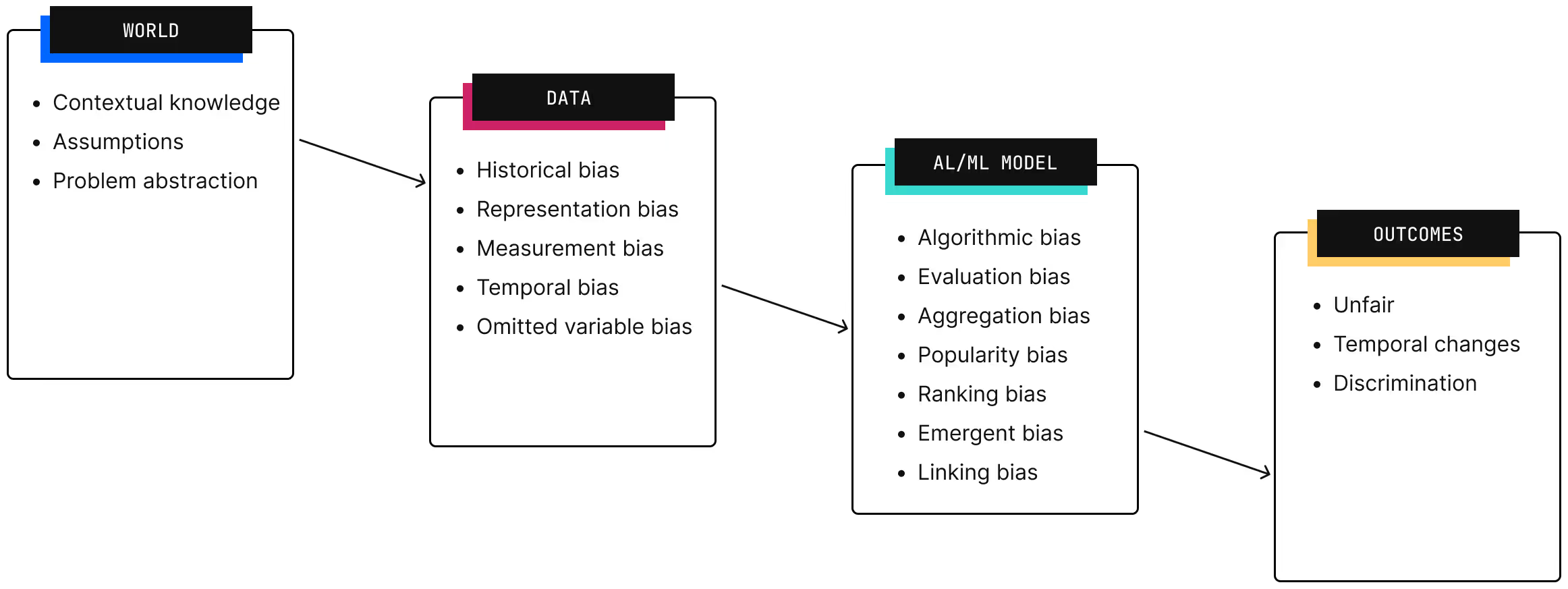

In the context of ML systems, various “parts” of the system include (1) the real-world problem and context, (2) Data, (3) the Model, and (4) Outcomes. Each of these “parts” is a system itself with its own assumptions, functions, and constraints; because of this, we consider them to be the subsystems of the bigger ML system. Thus, a systems approach in an ML context means accounting for the behaviors of all the various subsystems of an ML system that ultimately contribute to the outcome of interest (fairness here). Investigating each subsystem can provide interpretability and explainability of the functioning or malfunctioning of a system which, in the context of fairness, is crucial to designing ethical and responsible AI systems. We will use the credit approval model as a running example to illustrate how to describe each of these subsystems.

After describing the subsystems, it's important to know the order in which the systems need to be evaluated. This is crucial for understanding the potential causes that rendered our model unfair. In an ML context, the typical sequence of subsystems is: context -> environment -> data -> model -> outcome.

Lastly, and most importantly, ML and data science domain knowledge is required to pin down specific issues of unfairness and biases within each subsystem. Further, we need to consider the order in which the analysis is conducted to identify the root causes of unfairness in our modeling system.

In the following, we focus on step 4 of the systems engineering approach. This is because steps 1 to 3 in the context of ML systems essentially discuss the problem the ML system solves, the data used, the model developed, and the model predictions, which is summarized in Part 1 of the series.

Evaluating and analyzing the subsystems

This step investigates why the credit model has low intersectional fairness.

Context/environment analysis

We begin by ensuring the problem framing and the abstraction do not suffer from any potential biases. Since the problem chosen has two outcomes in the real world, where the credit line is either approved or denied, the framing does not require any assumptions or abstractions. Thus, we can safely consider no assumption violations or errors in the problem framing. Let’s say, on the other hand, if we were to model market behaviors such as the price predictions for homes, as in the case of Zillow, where the outcome is continuous and the prices can swing wildly, we would need to deeply look into what assumptions were made to abstract the problem and handle its complexity.

Data analysis

Next, we move to the data we have in hand. We know that the environment from which the data is collected is not exactly known. However, it has been verified and utilized by others on an open-source platform such as Kaggle. Here, we rely on the wisdom of the crowds to assume that the data collection process is not faulty. Note that this is an assumption and should be conveyed to the team for everyone to have a shared mental model of the potential causes of concern.

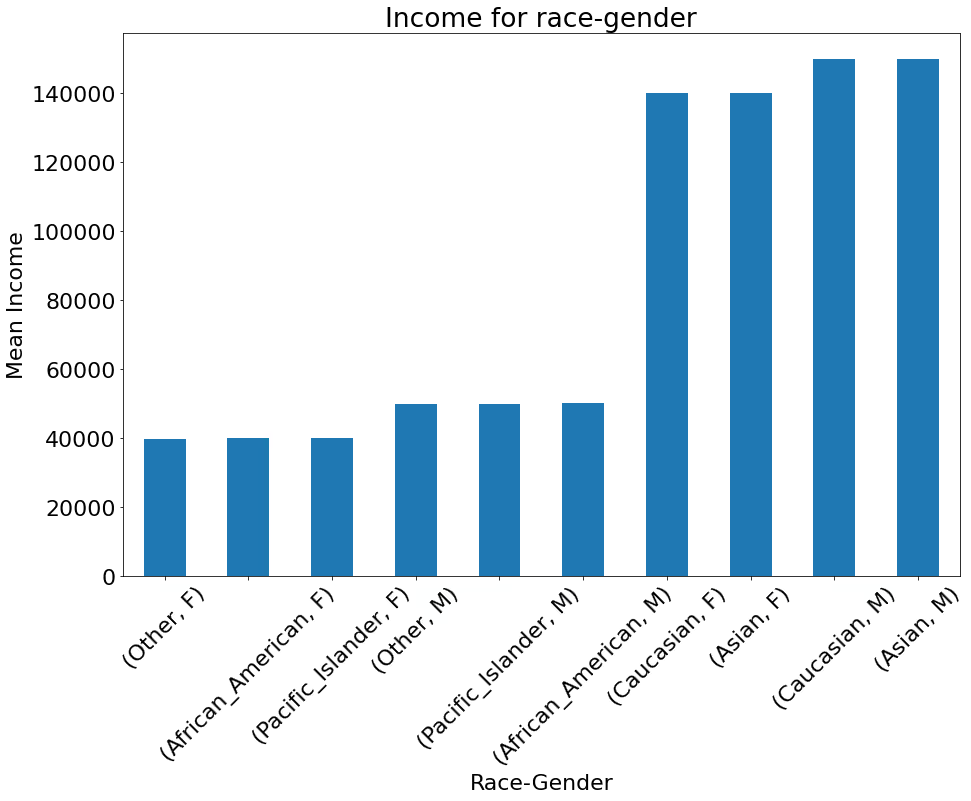

Given the data in hand, we evaluate data feature correlations with race and gender given that we know the model is intersectionally unfair. Fiddler allows us to explore potential biases, correlations, and variations in the feature space of our dataset. Given the credit dataset for our running example, we find the following interesting results as a part of data subsystem analysis:

- Average income is not significantly different for gender, but it is for race.

- Intersectional analysis reveals bimodal distribution for income given race and gender subgroups.

- There is a mismatch in the number of data samples for intersectional subgroups given race and gender.

While these findings are important, they alone cannot provide enough evidence for a causal claim that such correlations among data features cause unfairness in the model. They do however have the potential to be a factor. Perhaps if income was a crucial factor in generating the target variable then maybe the model is learning to be biased towards subgroups.

Modeling analysis

In supervised learning, target labels are provided to the ML model to learn and predict new cases. The process of generation and source of these target labels is an important consideration within the modeling process that determines how well the model performs and whether it does so fairly.

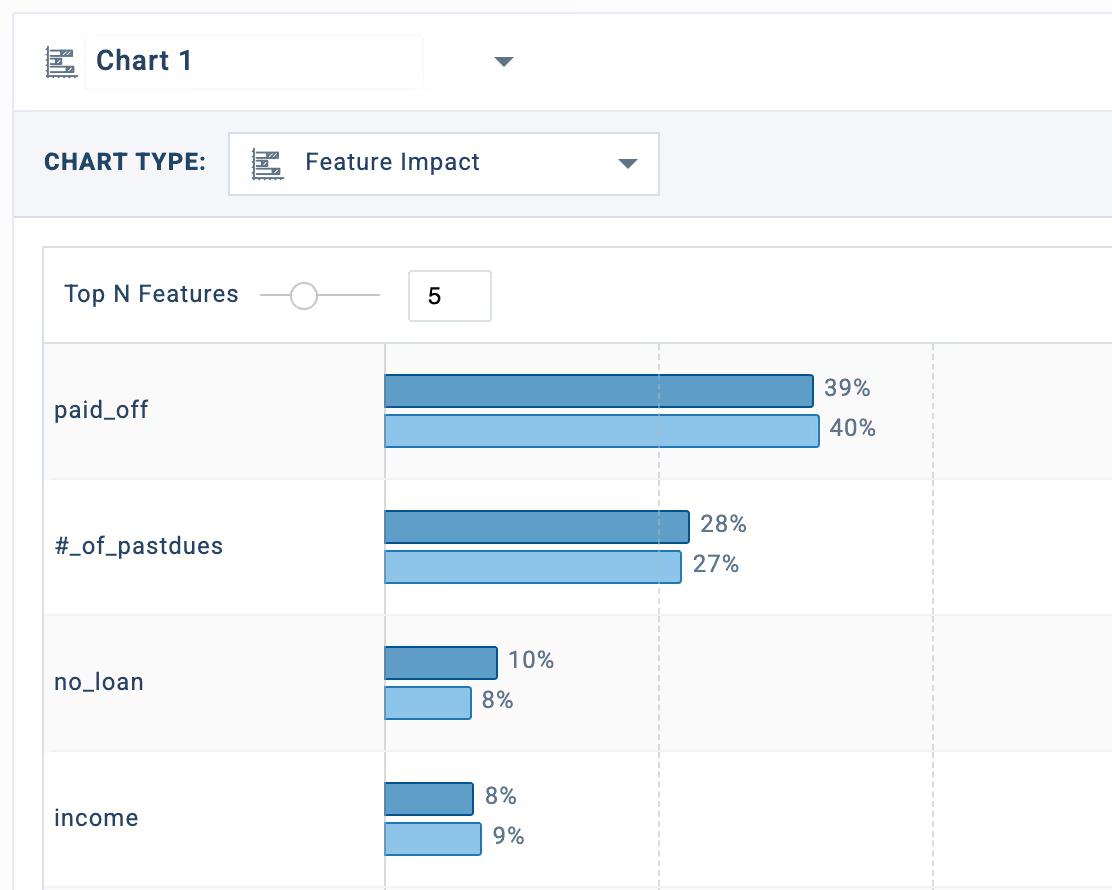

For our example, we generated the target label of accept (1) or reject (0) for every individual based on credit behaviors and their income. In reality, the impact of various features on target labels may be unknown as there may be a lack of knowledge on the explicit functional mapping between input features and target labels. Thus, evaluating the impact of the feature on the predictions can provide a deeper understanding of the presence of model bias.

Fiddler allows us to evaluate feature impact on model predictions. To do so, we leverage the randomized feature ablations algorithm which is a part of Fiddler’s core functionality. For this example, we observe that income has a 9% impact on the predictions! This is a significant contribution of one feature on outcomes, implying that the intersectional income disparity has crept into the model.

Metrics and time subsystems

As we have established the source of our bias within the data subsystem, we do not further investigate the metrics and time subsystems in this example. However, suppose the dataset was such that there were multiple choices of features and some were less biased than others in evaluating the likelihood of default. In that case, the model subsystem requires a closer look at the functional mapping it learns between various features and the outcomes for a possible improvement. Furthermore, if we have production data over a significant period, we may also need to evaluate the effect of shifts in data distributions on the model performance and its impact on fairness.

Conclusion

We have illustrated an approach that can be leveraged as a systems perspective framework to evaluate model unfairness issues. We demonstrated how the data subsystem had correlated features that may have the potential to cause fairness issues. However, to make causal claims, a systems perspective is required where we investigate whether feature correlations impact model predictions, and in our example they do. This enables the investigator to confidently conclude that correlated data features are being leveraged by the model in a manner that significantly influences the model outputs. Completing the circle then, we can causally claim that the intersectional fairness issue in our model is attributable to the influence of disparate incomes earned within intersectional subgroups based on gender and race on the model’s approval or rejection of credit applications. Such insight was possible due to the systems approach of analyzing each subsystem, their inherent assumptions, and the potential causes of concern. We hope such an approach empowers us to understand and explain AI responsibly and ethically.