What Is MLOps and Why Do We Need It?

5

Min Read

Machine learning (ML) is an exciting space with many companies applying this technology to a variety of issues with great success. However, most data scientists report that 80% or more of their projects stall before deploying an ML model. Why is this happening and how does this problem get solved? Research has shown that the lack of structure and formalized processes around the machine learning lifecycle are mostly to blame. The answer to this problem is MLOps.

What is MLOps and why do we need it?

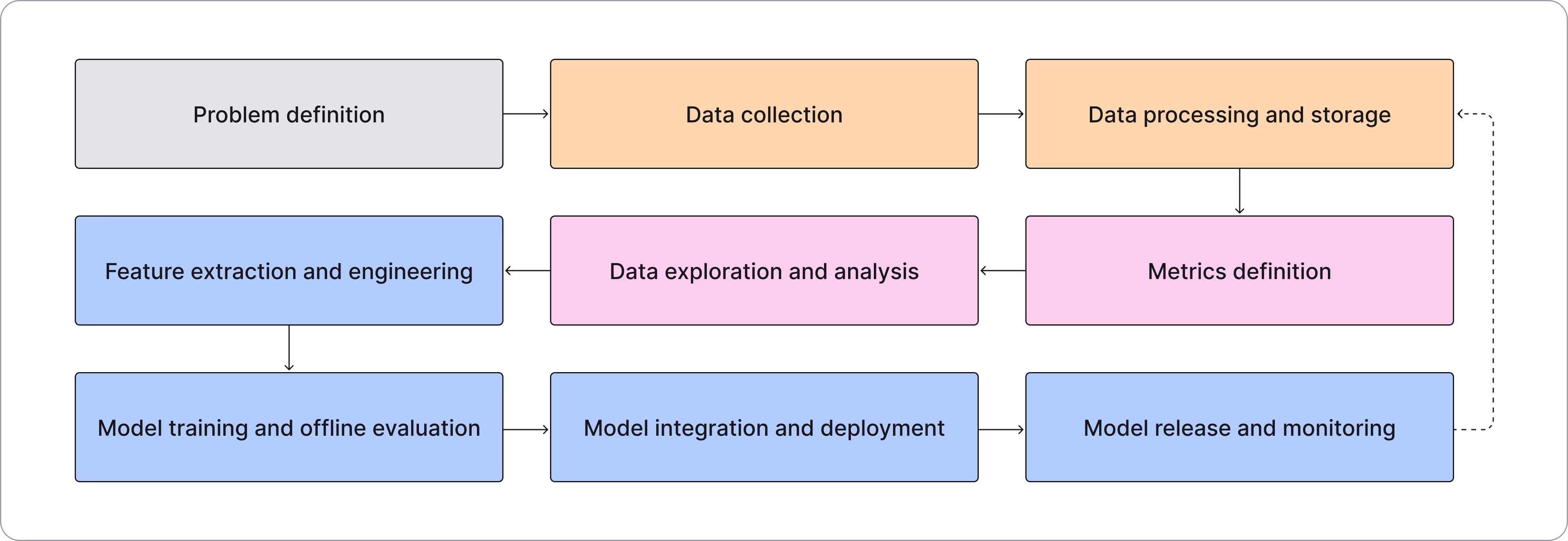

MLOps, also known as machine learning operations, is a set of practices that detail how to roll out machine learning models, monitor them, and retrain them in a structured and segmented manner. There are nine stages in the MLOps lifecycle:

- Problem definition - When developing models, the first step is to identify the problem that will be solved with AI.

- Data collection - After identifying the problem, next comes the data collection phase. This data will be used to train the models and should come from an appropriate source like user behavior.

- Data processing or storage - In order to effectively train your models, you will need a large amount of data. Data warehouses or data lakes are typically used for storage. This data can be consolidated and cleaned in batches or as a stream, depending on which suits your purposes best.

- Metrics definition - In order to measure the quality of the models and determine if they are successful in solving the problem identified in the first stage, your team needs to agree on which metrics you will use.

- Data exploration - The next phase involves data scientists developing hypotheses about what modeling techniques will be most useful based on data analysis.

- Feature extraction and engineering - After developing hypotheses, data scientists will next determine which aspects of the data will be used as inputs to the models. These aspects are known as features. For example, if the problem being solved is a loan approval algorithm for financial services, features of the models might include the credit score of the users. Data scientists will also have to decide how to generate features (in addition to which aspects are selected to be features) and engineers must be involved to continually update features as new data is collected.

- Model training and offline evaluation - As models are built, trained, and evaluated, data scientists will choose the approach with the best performance. Approximately 80-90% of the data that is collected and processed will be used to train models, while 20–30% will be reserved for evaluation.

- Model integration and deployment - After the models are trained and evaluated, the next step is to integrate them into the product and then deploy them, typically within a cloud system like AWS. Model integration may involve building new services and hooks so that your product can retrieve the model predictions that will drive your end-user outcomes. Going back to the loan application example, once the model for loan approval is working, the website will need a way to access the algorithm's assessment for who should or should not be approved.

- Model release and monitoring - Once deployed, models need to be closely monitored to make sure there are no issues like data drift or model bias. An additional benefit of model monitoring is that you can identify how the model can be improved with retraining on new data.

What is MLOps and DevOps?

When reading about MLOps, you’ll notice there is often discussion about DevOps in the same articles. That is because in a way, MLOps evolved from DevOps. In the same way that DevOps was developed to help operationalize software development, MLOps has become a way to operationalize machine learning. However, machine learning is fundamentally different from software, requiring its own specific process. They may share similarities, but the lifecycle steps are unique to each.

What problems does MLOps solve?

Like DevOps, MLOps helps to improve the quality of production models, while incorporating business and regulatory requirements and model governance. A few of the problems that MLOps solves are:

- Inefficient workflows - MLOps provides a framework for managing the machine learning lifecycle effectively and efficiently. By matching business expertise with technical prowess, MLOps creates a more structured, iterative workflow.

- Failing to comply with regulations - Machine learning is a fairly new field, and regulatory bodies continue to change their requirements and update their guidelines. MLOps takes ownership of staying in compliance and up-to-date with shifting regulations, such as those found in banking.

- Bottlenecks - With complicated, non-intuitive algorithms, bottlenecks can often happen. MLOps facilitates collaboration between operations and data teams, helping to reduce the frequency and severity of these types of issues. The collaboration that MLOps promotes leverages the expertise of previously siloed teams, helping to build, test, monitor, and deploy machine learning models more efficiently.

What are the key outcomes of MLOps?

Ultimately, the key outcome of MLOps is a high quality model that you can rely on to do what you need it to do. MLOps also gives you the ability to observe changes that are happening with your models, so that negative impacts can be caught and adjusted before going into production. There are, of course, many benefits of MLOps, but these two key outcomes are the most important for ML success.

What is MLOps technology?

MLOps technology encompasses any tool that helps with any stage of the machine learning lifecycle. MLOps tools like Fiddler give insight into the black box of machine learning, shedding light on why your algorithm is making certain decisions. Your MLOps and Data Science teams can use Fiddler to develop responsible AI using explainable AI.

Try Fiddler to understand how we enable your MLOps lifecycle.