How do you check the performance of a model?

7

Min Read

Model monitoring is key to having effective and responsible AI systems. Proper ML monitoring will allow you to better understand and explain the operational health of your models, ultimately confirming everything is running as intended.

There are many moving parts when it comes to checking the performance of your model, like measuring the quality of your data, watching for data drift and outliers, and ensuring your model runs smoothly throughout development and after deployment. In this article, we’ll be focusing on some of the more commonly used monitoring metrics, their importance, and how you can use these metrics and other model monitoring tools to get the most out of your ML models.

Why do we need model monitoring?

Leaving machine learning unchecked can have many unintended consequences, ranging from hidden model bias to under-representation of certain groups. Proper monitoring and analyzing techniques allow MLOps teams to catch these problems before they cause further issues.

A look at the latest in AI news makes the importance of model monitoring clear, as you can usually find examples of machine learning going wrong. For example, consider the story about the Microsoft chatbot that learned how to be sexist and racist.

Microsoft released an AI chatbot named “Tay” in 2016 on Twitter. Within 24 hours of its release, things quickly spiraled out of control. Tay was designed to mimic the way people chatted with her. Regrettably, this meant that Tay picked up some unsavory traits and values from the public data she was built on, like supporting genocide and making racist comments.

This is one of many examples that show how vital it is to monitor all aspects of a model’s performance, from the quality of the data and training going into the model to the final output produced. But how do you monitor the performance of a production model, exactly, to avoid these issues? Let’s get into some model monitoring best practices.

How do you measure the performance of a machine learning model?

An AI observability platform provides control and insight into ML model performance, allowing teams to measure how well their model is doing and understand which features are driving this performance. Think of it as a way to avoid (or successfully resolve) problems that may arise at any point during the life of your model.

Because ML models serve a wide range of industries and use cases, there are a variety of metrics you can use to measure model performance for your team’s unique needs. In this blog, we will examine classification metrics specifically, as they are one of the most common across all industries. This is only one way to measure performance, but it’s a good starting point for understanding how model monitoring works.

But before getting into the basics of classification metrics, you first need to understand the confusion matrix.

The confusion matrix in model performance monitoring

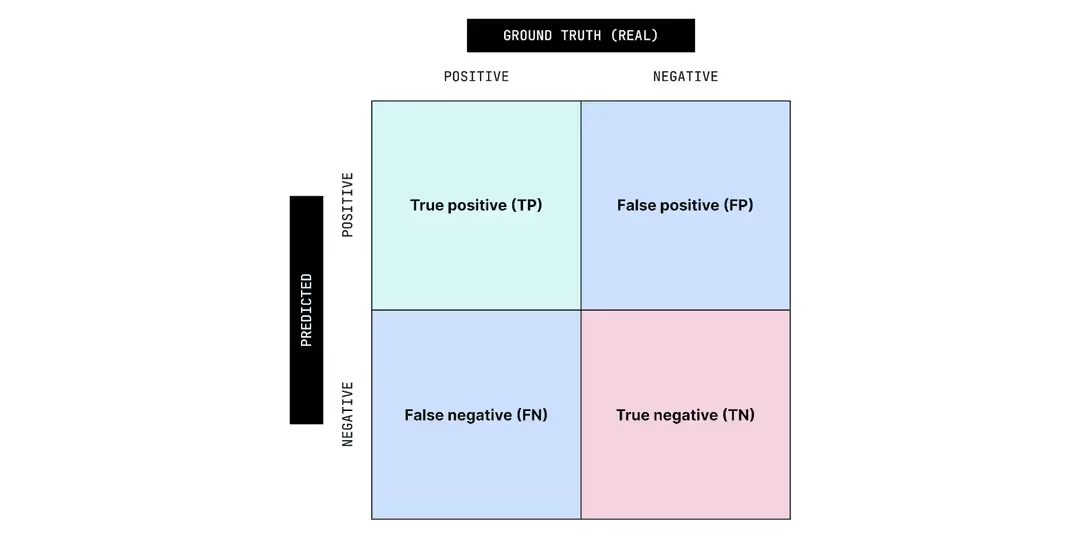

Below is a common illustration of the confusion matrix that visualizes classifications of predictions within a model. Along one side, you have the “ground truth” or what the reality is, and on the other side, you have what is predicted. The below example uses a simple binary classification (meaning the predictions are either true or false).

The matrix can be expanded depending on the number of outputs being monitored since not all model outputs can be classified as true or false. The four classes of prediction we have in this illustration are:

- True Positive (TP) - The prediction was positive and accurate.

- False Positive (FP) - The prediction was positive and inaccurate.

- False Negative (FN) - The prediction was negative and inaccurate.

- True Negative (TN) - The prediction was negative and accurate.

Let’s use a familiar and basic example to show this in action. Imagine a model is built for a business to determine if a customer will churn (which means the customer stops using a business’s services or products). If the model predicts a customer will churn, but they do not, that would be a false positive. If that same customer ends up churning instead, the prediction would be a true positive.

How do you measure the performance of a model using the confusion matrix?

You can classify ML model outputs according to a confusion matrix and then use that information to calculate performance metrics. Some of the more commonly used metrics for classification include:

Accuracy - Accuracy determines the ratio of accurate predictions to all predictions. Sticking with the churn example, the accuracy metric would show how well the model can make accurate predictions overall as to whether or not a customer will churn.

$$Accuracy = {TP + TN\over TP + TN + FP + FN}$$

Precision - Precision determines the ratio of true positives to the total predicted positives. This would show how well the model can predict which customers will churn compared to the number of those predicted to churn.

$$Precision = {TP\over TP + FP}$$

Negative Predictive Value - This metric determines the ratio of true negatives to the total predicted negatives. This would show how well the model can predict which customers will not churn compared to the number of those predicted not to churn.

$$Negative Predictive Value = {TN\over TN + FN}$$

Sensitivity/Recall - Sensitivity and recall are used interchangeably to describe this metric, which determines the ratio of true positives to the total ground truth positives. This would show how well the model can predict which customers will churn compared to the number of those who truly churn in the real world.

$$Sensitivity/Recall = {TP\over TP + FN}$$

Specificity - Specificity determines the ratio of true negatives to the total ground truth negatives. This would show how well the model can predict which customers will not churn compared to the number of those who truly don’t churn in the real world.

$$Specify = {TN\over TN + FP}$$

The metric you use depends on which error is more undesirable for your case—the false positives or false negatives.

Continuing with the customer churn example, let’s say you have 1,000 customers. 900 of them are not planning to churn, but your model inaccurately predicts that 70 of those 900 will. These are the false positives, as 70 model predictions of people churning (the “positive” in this case) are inaccurate. Your team would most likely give those 70 customers unnecessary extra attention to prevent them from leaving.

The other 100 customers are planning to churn, although your model predicts that 30 of them will not churn. These are the false negatives, as 30 model predictions of customers not churning (the “negative” in this case) are inaccurate. Those 30 customers would likely not receive the special treatment needed to convince them to stay, and you would lose those 30 customers.

So, which error is more undesirable to your team? Would you rather:

- Waste the energy catering to 70 customers who were never planning to churn, or;

- Lose 30 customers who were neglected?

Most would agree that losing customers is more undesirable, meaning false negative errors are more significant to your success as a business in this scenario. Therefore, avoiding a metric that uses false negatives is the way to go, such as the precision metric.

This example offers a mere glimpse of how you can measure ML model performance. As you can see, even this brief look at a sample of classification metrics is complex and requires numerous observations and calculations. This is why the best way to monitor model performance is with an AI observability platform.

Model monitoring tools in MPM platforms

A quality MPM platform will allow you to observe performance, drift, outliers, and errors, and provide explanations for model decisions. It should alert stakeholders to any potential issues and why those issues are occurring. And the platform capabilities shouldn’t stop there—they should cover the entire MLOps lifecycle, from validating and training the model to analyzing outputs. Ultimately, it’s about ensuring you are building high-performing, responsible AI.