Customers

You’re in good company. Data science and AI teams across industries build responsible AI solutions with Fiddler.

Integral Ad Science Scales Transparent and Compliant AI Products with AI Observability

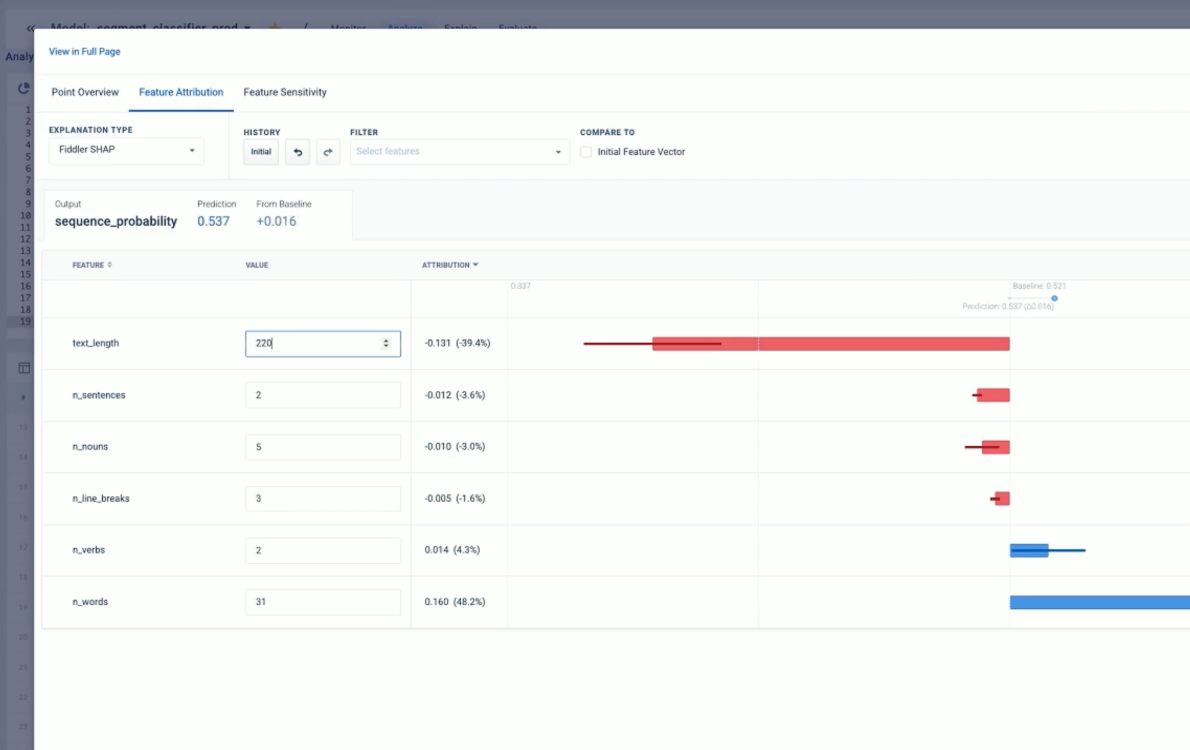

With Fiddler, IAS has experienced significant benefits:

- Reduction in monitoring operation costs due to increased efficiency

- Proactive regulatory compliance and easier demonstration of responsible AI practices to stakeholders

- A unified, standardized view of rich model insights and metrics for improved team collaboration, and audits

Read case study

At IAS, our entire product suite is powered through AI, machine learning and data driven decision making. The level of observability that the Fiddler AI Observability platform brings to our modeling stack has been extremely helpful in proactively monitoring and actioning on any gaps in the data coverage or any drifts in the input data, concept, or prediction that could impact the quality of our client facing solutions.

Kumaresh Singh

SVP Data Science, IAS

Featured Resources

Product Webinar

Visibility, Context, and Control in Enterprise Agentic Observability

Blog

Agentic Observability: A 4-Stage Walkthrough

Guide

5 Critical Lessons for Production-Ready AI Agents

Video

Build High Performing AI Agents with Fiddler Agentic Observability

Blog

From Evaluations to Production: End-to-End Observability for the Agentic AI Lifecycle

Blog

Agentic Observability Starts in Development: Build Reliable Agentic Systems