In the first part of this series, we explored the power of Fiddler AI and DataStax when used together to enhance RAG-based LLM applications and ensure their correctness, safety, and privacy. In this second part, we’ll dive deeper into the technical integration required to bring Fiddler’s AI Observability platform for generative AI (GenAI) to monitor and optimize the performance, accuracy, safety, and privacy of your RAG-based LLM applications.

As a refresher, DataStax’s Astra DB is a Database-as-a-Service (DBaaS) that supports vector search, providing both real-time vector and non-vector data. This allows you to quickly build accurate GenAI applications and deploy them into production. Built on Apache Cassandra®, Astra DB adds real-time vector capabilities that can scale to billions of vectors and embeddings; as such, it’s a critical component in a GenAI application architecture.

The Fiddler AI Observability platform enables you to monitor and protect your LLM applications with industry-leading LLM application scoring. This scoring is powered by proprietary, fine-tuned Trust Models that assess prompts and responses across trust-related dimensions, like faithfulness, legality, PII leakage, jailbreaking, and other factors to monitor and detect LLM issues. These Trust Models enable you to not only detect issues in real-time but also allow you to perform offline diagnostics, with the Fiddler platform, to identify the root cause of the issues.

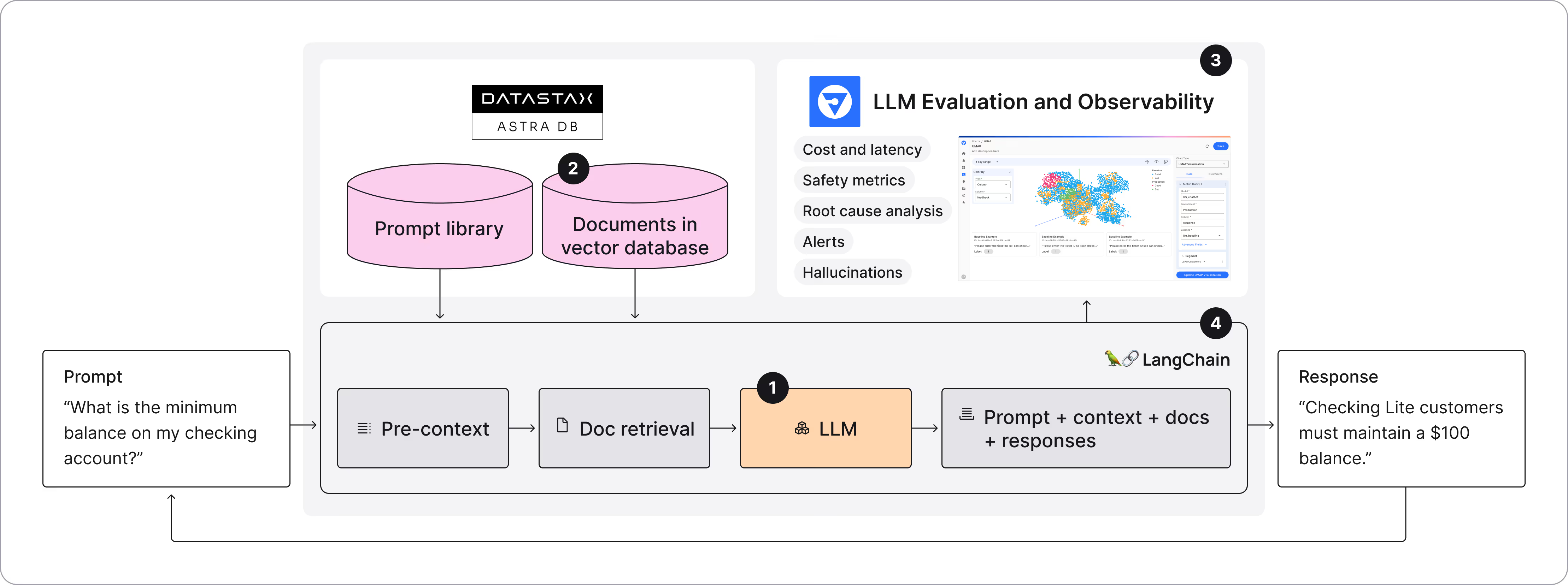

The architecture below shows how DataStax’s Astra DB and Fiddler’s AI Observability are integrated with the spans of your RAG-based LLM applications.

As we delve deeper into this integration, we will share the code snippets and technical integration details needed to wire up your DataStax-powered LLM applications with the Fiddler AI Observability platform. For this technical deep dive, we have instrumented DataStax’s own publicly available WikiChat application.

Use Case: AI Observability for the DataStax WikiChat RAG Chatbot

WikiChat is an example RAG-based LLM application offered for free by DataStax and is meant to be used as a starter project that illustrates how to create a chatbot using Astra DB. It's designed to be easy to deploy and use, with a focus on performance and usability.

The application retrieves the 10,000 most recently updated Wikipedia articles to enhance its RAG-based retrieval, providing helpful and timely answers on topics currently being updated.

How to Onboard WikiChat to Fiddler

For the purposes of this technical integration, we will be publishing our WikiChat LLM trace data to our Fiddler environment. The trace data includes the prompt, the prompt context, the document data retrieved from Astra’s vector DB during RAG retrieval, and the application response. Other metadata can also be passed in for tracking purposes like the LLM foundation model being used, the duration of the trace, the user’s session ID, and more.

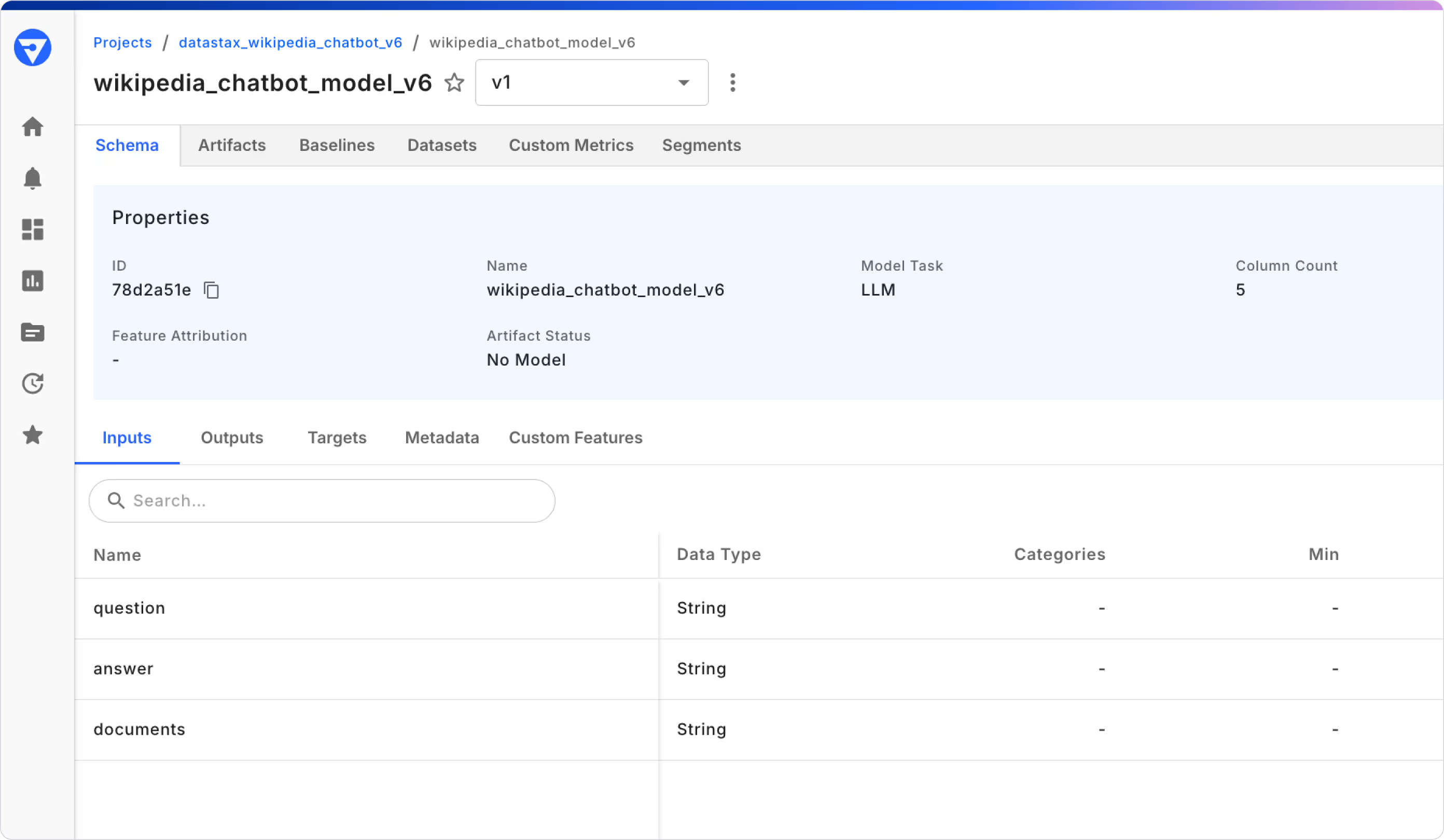

Before publishing trace data to Fiddler, we first need to establish (or “onboard”) the WikiChat chatbot to our Fiddler environment. This onboarding process allows Fiddler to understand the “schema” of our trace data. Once onboarded, Fiddler will have an understanding of the data from our LLM application data, as shown in the LLM application card below.

The code snippets below illustrate how we used the Fiddler Python client to onboard the WikiChat LLM application within the Fiddler environment.

First, we must install the Fiddler Python client.

!pip install -q fiddler-client

import numpy as np

import pandas as pd

import time as time

import fiddler as fdlAnd connect that client to DataStax’s Fiddler environment.

URL = 'https://datastax.trial.fiddler.ai'

TOKEN = 'BNt0d3zQaik_I8D6AsMjO_kWOXVly4HSGK_blah_blah'

fdl.init(

url=URL,

token=TOKEN

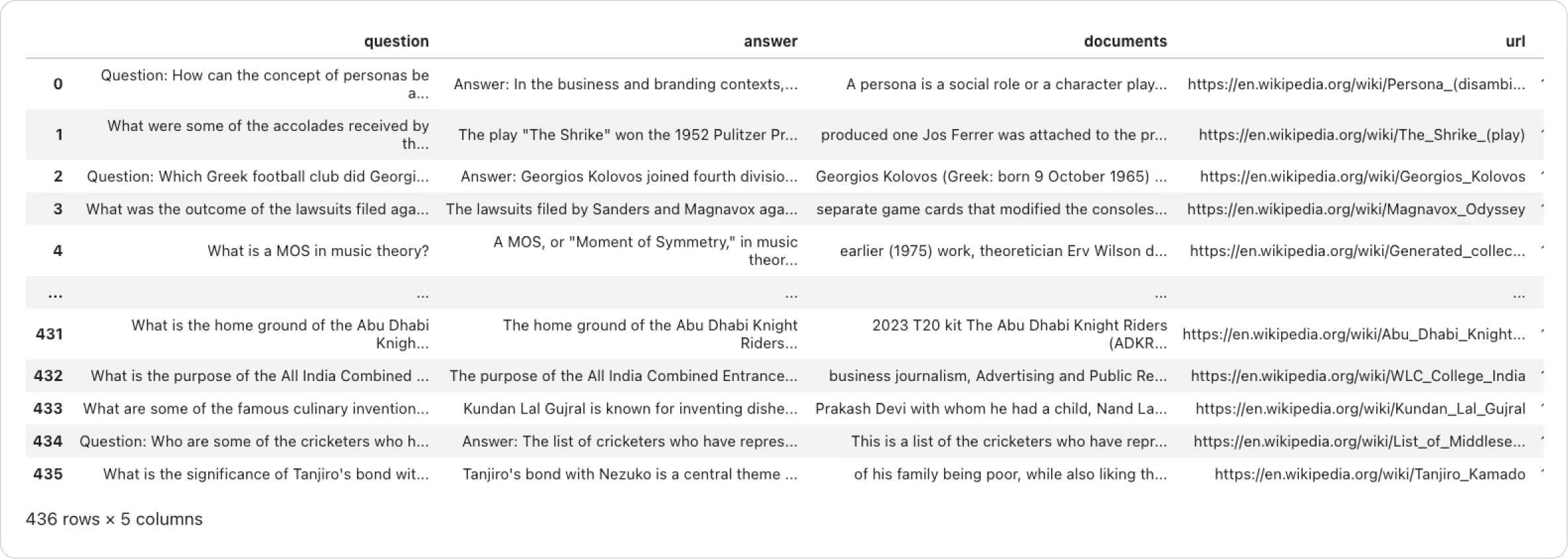

)Then, we load a dataframe with sample trace data that the Fiddler client will use to inspect the columns and data types of our WikiChat trace data. This sample data allows Fiddler to understand the data types of each column in our trace data.

PATH_TO_SAMPLE_CSV = 'https://docs.google.com/spreadsheets/d'

sample_df = pd.read_csv(PATH_TO_SAMPLE_CSV)

sample_df

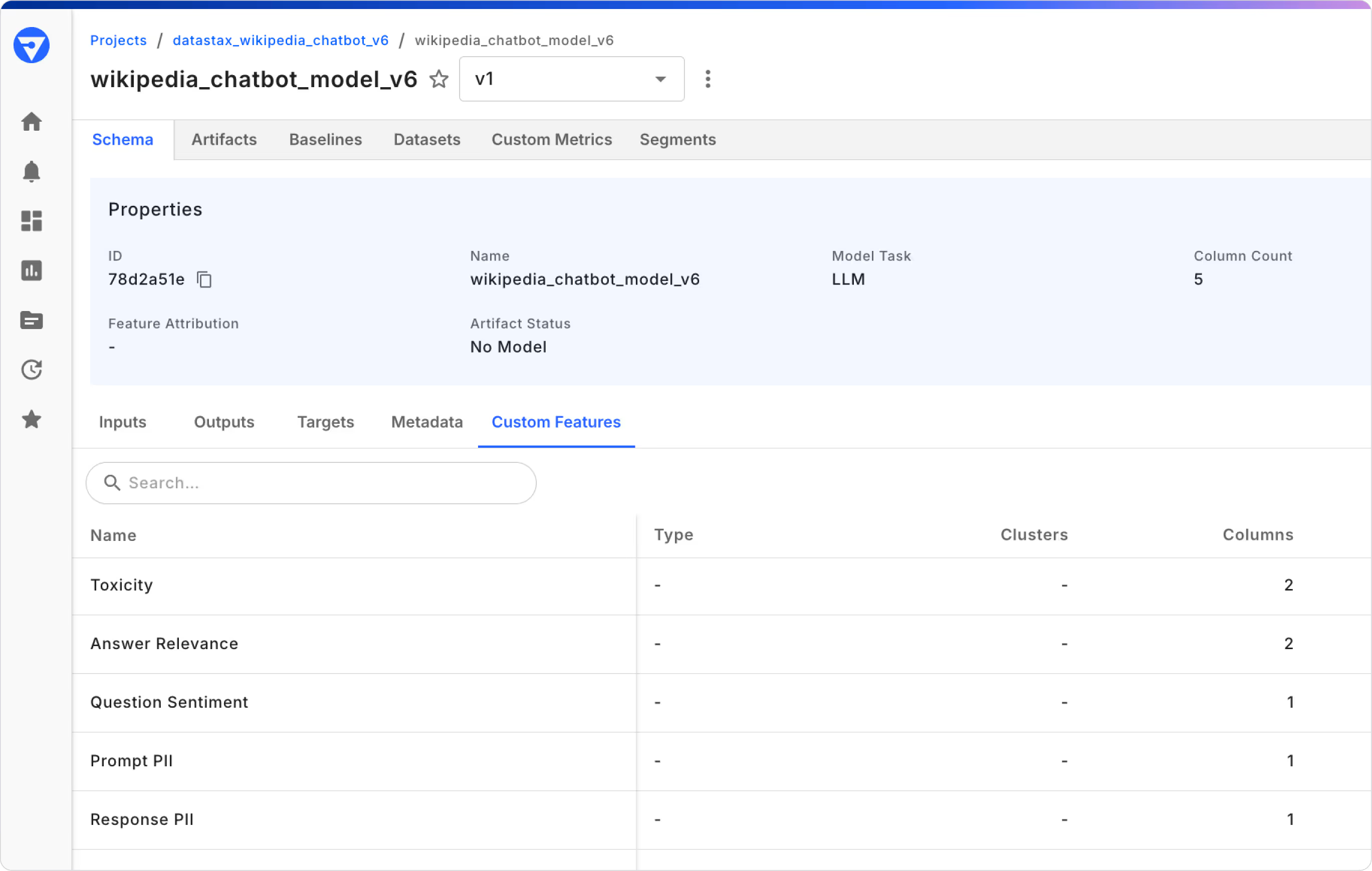

Next, we’ll define the Fiddler Trust Scores we want to use for monitoring the behavior of our WikiChat chatbot. Below, we instruct Fiddler to generate embedding vectors for our unstructured prompts and responses. Additionally, we configure Fiddler to score our WikiChat traces for toxicity, answer relevance, sentiment, PII-leakage, and prompt safety. A full list of Fiddler Trust Scores can be found on Fiddler’s documentation site.

fiddler_trust_scores = [

fdl.Enrichment(

name='Enrichment Prompt Embedding',

enrichment='embedding',

columns=['question'],

),

fdl.TextEmbedding(

name='Prompt TextEmbedding',

source_column='question',

column='Enrichment Prompt Embedding',

n_tags=10

),

#response enrichments

fdl.Enrichment(

name='Enrichment Response Embedding',

enrichment='embedding',

columns=['answer'],

),

fdl.TextEmbedding(

name='Response TextEmbedding',

source_column='answer',

column='Enrichment Response Embedding',

n_tags=10

),

fdl.Enrichment(

name='Toxicity',

enrichment='toxicity',

columns=['question', 'answer'],

),

fdl.Enrichment(

name = 'Answer Relevance',

enrichment = 'answer_relevance',

columns = ['question', 'answer'],

config = {

'prompt' : 'question',

'response' : 'answer',

},

),

fdl.Enrichment(

name='Question Sentiment',

enrichment='sentiment',

columns=['question'],

),

fdl.Enrichment(

name='Prompt PII',

enrichment='pii',

columns=['question'], # one or more columns

allow_list=['fiddler'], # Optional: list of strings that are white listed

score_threshold=0.85, # Optional: float value for minimum possible confidence

),

fdl.Enrichment(

name='Response PII',

enrichment='pii',

columns=['answer'], # one or more columns

allow_list=['fiddler'], # Optional: list of strings that are white listed

score_threshold=0.85, # Optional: float value for minimum possible confidence

),

fdl.Enrichment(

name='FTL Safety',

enrichment='ftl_prompt_safety',

columns=['question', 'answer'],

),

]We then create a model_spec object which defines the schema of our WikiChat application within Fiddler, and…

model_spec = fdl.ModelSpec(

inputs=['question', 'answer', 'documents'],

metadata=['url', 'timestamp'],

custom_features = fiddler_trust_scores

)

model_task = fdl.ModelTask.LLM

timestamp_column = 'timestamp'Onboard (or "create") the application within Fiddler by calling model.create().

MODEL_NAME = 'wikipedia_chatbot_model_v6'

model = fdl.Model.from_data(

source=sample_df,

name=MODEL_NAME,

project_id=project.id,

spec=model_spec,

task=model_task,

event_ts_col=timestamp_column

)

model.create()Once complete, the application will appear in the Fiddler environment, with the Trust Scores we specified available under the "Custom Features" tab.

How to Publish LLM Trace Data to the Fiddler AI Observability Platform

The WikiChat chatbot is built using next.js. In Next.js 14, you can use the API Route, which runs on the server side, to ingest chat events into Fiddler. To do this, create a file in the api/fiddler_ingestion/route.ts folder and place the following code blocks in it.

This will involve importing necessary modules from Next.js for handling requests and responses, along with Axios for making HTTP requests and handling responses.

import { NextRequest, NextResponse } from "next/server";

import axios, { AxiosInstance, AxiosResponse } from "axios"; Extracting environment variables for Fiddler API.

const { FIDDLER_MODEL_ID, FIDDLER_TOKEN, FIDDLER_BASE_URL } = process.env;Enum to define the environment types and Set the current environment to production.

enum EnvType {

PRODUCTION = "PRODUCTION",

PRE_PRODUCTION = "PRE_PRODUCTION",

}

const environment = EnvType.PRODUCTION;Function to create an authenticated Axios session with the Fiddler API.

function getAuthenticatedSession(): AxiosInstance {

return axios.create({

headers: { Authorization: `Bearer ${FIDDLER_TOKEN}` },

});

}Function to publish or update events to the Fiddler API.

async function publishOrUpdateEvents(

source: object, // The event source data

environment: EnvType, // The environment type (production or pre-production)

datasetName?: string, // Optional dataset name

update: boolean = false, // Flag to determine if the event should be updated or created new

): Promise<any> {

const session = getAuthenticatedSession(); // Get the authenticated Axios session

const method = update ? "patch" : "post"; // Determine HTTP method based on the update flag

const url = `${FIDDLER_BASE_URL}/v3/events`; // Construct the Fiddler API URL

const data = {

source: source,

model_id: FIDDLER_MODEL_ID,

env_type: environment,

env_name: datasetName,

};

let response: AxiosResponse;

try {

// Send the request to the Fiddler API

response = await session.request({ method, url, data });

} catch (error) {

// Handle errors from the Axios request

if (axios.isAxiosError(error)) {

throw new Error(error.response?.statusText); // Throw error with response status text if available

} else {

throw new Error(`An unexpected error occurred : ${error}`); // Throw generic error message

}

}

return response.data; // Return the data from the Fiddler API response

}Handler for POST requests in NextJs 14 Api Route.

export async function POST(req: NextRequest, res: NextResponse) {

const fields = ["question", "answer", "documents", "url"]; // Required fields in the request body

const data = await req.json(); // Parse the JSON body of the request

// Check if all required fields are present in the request body

for (const field of fields) {

if (!data[field]) {

return NextResponse.json({ error: `${field} is missing in request body` }, { status: 400 }); // Return error if a field is missing

}

}

// Extract relevant fields from the request data

const { question, answer, context, url } = data || {};

// Construct the source object to be sent to the Fiddler API

const source = {

type: "EVENTS",

events: [

{

question: question, // Chat Question

answer: answer, // Chat Answer

documents: context, // Query Context from the database

url: url, // URL of the documents from teh Database

timestamp: Math.floor(Date.now() / 1000), // Current timestamp in seconds

},

],

};

try {

// Publish or update the event in Fiddler

const result = await publishOrUpdateEvents(source, environment, null, false);

console.log("Event Ingested Successfully into Fiddler:", result); // Log success message

return NextResponse.json(result, { status: 200 }); // Return success response with result data

} catch (error) {

console.error("An error occurred while ingesting event into the fiddler:", error.message); // Log error message

return NextResponse.json({ error: error.message }, { status: 400 }); // Return error response with error message

}

}After placing the above code in route.ts, you can simply call it using the fetch method from your page component as shown below.

Function in client side component to ingest the Fiddler events.

const fiddlerIngestion = async (question: string, answer: string, documents: string, url: string) => {

try {

const response = await axios.post(

"/api/fiddler_ingestion",

{ question: question, answer: answer, documents: documents, url: url },

{

headers: {

"Content-Type": "application/json",

},

},

);

if (response.statusText !== "OK") {

throw new Error(`Failure Occured While Ingestion Production Data into Fiddler:${response}`);

}

} catch (error) {

console.error("Error:", error);

}

};Fiddler Delivers Rich Insights on RAG-based LLM Applications

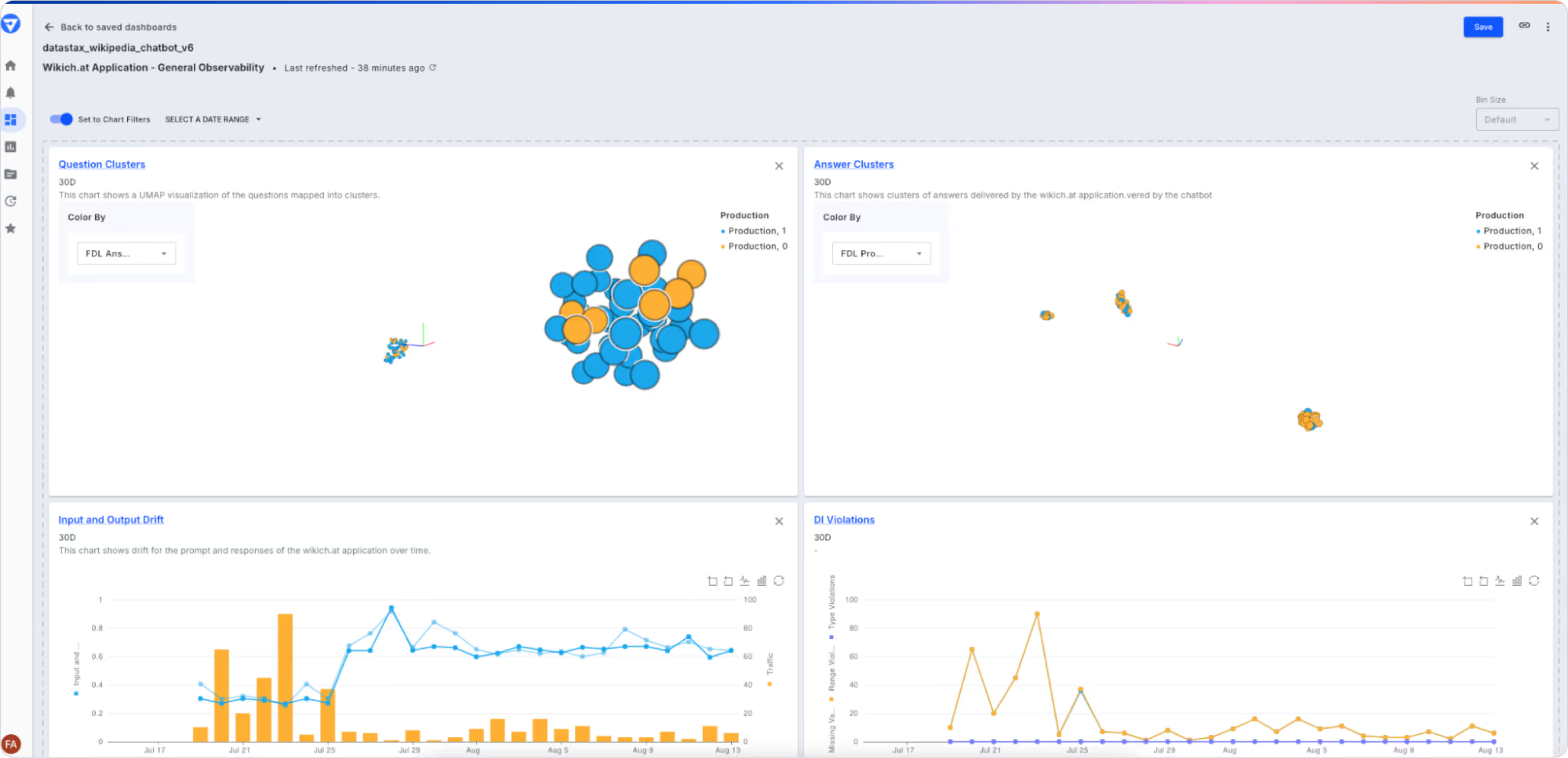

With trace data flowing from the WikiChat application, Fiddler Trust Models will score the prompts, responses, and metadata, assessing the trust-dimensions to detect and alert you to issues related to correctness and safety. You can customize dashboards and reports to focus on insights most relevant to your use case. For instance, you can create reports to track different dimensions of hallucination, such as faithfulness/groundedness, coherence, and relevance. Additionally, Fiddler will instantly detect unsafe events like prompt injection attacks, toxic responses, or PII leakage, allowing you to take corrective measures to protect your RAG-based LLM application.

Once correctness, safety, or privacy issues are detected, you can run diagnostics to understand the root cause of the problem. You can visualize problematic prompts and responses using a 3D UMAP, and apply filters and segments to further analyze the issue in greater detail.

Tracking the LLM metrics most relevant to your use case also helps you measure the success of your LLM application against your business KPIs. You can share findings and insights with executives and business stakeholders on how LLM metrics connect to KPIs. Sample business KPIs below:

- Customer Trust and Satisfaction

- LLM Metrics

- Faithfulness/Groundedness

- Answer Relevance

- Context Relevance

- PII

- Sentiment

- LLM Metrics

- User Engagement

- LLM Metrics

- Answer Relevance

- Context Relevance

- Session Length

- LLM Metrics

- Compliance and Risk Management

- LLM Metrics

- Faithfulness/Groundedness

- PII

- Regex Match

- Banned Keywords

- LLM Metrics

- Security

- LLM Metrics

- Jailbreak

- PII

- LLM Metrics

- Operational Efficiency

- LLM Metrics

- Faithfulness/Groundedness

- Conciseness

- Cost (Tokens)

- Latency

- LLM Metrics

You can explore how to build RAG chatbots by reading our 10 Lessons from Developing an AI Chatbot Using RAG guide.

You can also request a demo of the Fiddler AI Observability platform to explore how to monitor your RAG-based LLM applications.