At our recent Generative AI Meets Responsible AI summit, Dr. Ali Arsanjani, head of Google’s AI Center of Excellence, spoke about the evolution of generative AI, looking at the background of foundation models, clued viewers into the latest research at Google, and gave insights into where the MLOps lifecycle needs to be updated for generative AI. Here are some key takeaways from his talk:

TAKEAWAY 1:

The relationship between the size of language models and their capabilities is not linear.

The relationship between the size of language models and their capabilities is not linear; instead, it displays emergent behavior. As LLMs grow larger, they experience significant leaps in model performance, potentially following an exponential distribution. This emergent behavior, similar to complex adaptive systems, allows models with more parameters to perform a wider range of tasks compared to models with less parameters of similar size.

For instance, the Gopher model served as a foundation for the Chinchilla model, which, despite being four times smaller, was trained with four times the number of tokens, showcasing the complex relationship between size and capabilities in language models.

The key insight is that data and data efficiency are vital for building conversational agents or the models behind them. Pre-training typically involves around 1 billion examples or 1 trillion tokens, while fine-tuning has approximately 10,000 examples. In contrast, prompting requires only tens of examples, awakening the models' "superpowers" through few-shot data when needed. The combination of pre-training, fine-tuning, and prompting highlights the primary role of data and data efficiency in training these models effectively.

TAKEAWAY 2:

Explainability for AI-generated outputs are crucial for addressing toxicity, safety issues, and hallucination problems, while improving the reliability and monitoring of LLMs.

In recent years, companies like Google have been implementing safeguards to address toxicity, safety issues, and hallucination problems that may arise from AI-generated models, such as diffusion models that generate images from text. These models demonstrate varying levels of understanding, from distinguishing cause and effect to understanding conceptual combinations within a specific context. Context remains a critical factor in ensuring accurate and reliable outputs.

Large language models can tackle complex problems by breaking them down through chain-of-thought prompting, thus providing a rationale for the results obtained. As the field of AI research advances, it is essential to establish a traceable path for model outputs, enhancing their explainability. By leveraging explainable AI, it becomes possible to dissect the process into fundamental parts and identify any potential errors. This approach facilitates better model monitoring and understanding of the models' provenance and background, ensuring higher quality and more reliable outcomes.

TAKEAWAY 3:

Foundation models face a wide range of unique security concerns.

Addressing security concerns with LLMs is essential, as their ubiquity and generality can make them a single point of failure, similar to traditional operating systems. Issues such as data poisoning, where malicious actors inject harmful content, can compromise the models. Function creep and dual usage can lead to unintended applications, while distribution shifts in real-world data can cause significant drops in performance. Ensuring model robustness and AI safety is crucial, including maintaining human control over deployed systems to prevent negative consequences.

Mitigating misuse is also vital, as lowering the barrier for content creation makes it easier for malicious actors to carry out harmful attacks or create personalized content for spreading misinformation or disinformation. The potential amplification of misinformed or disinformed content through language generators can have a significant impact on the political scene, making it essential to address these concerns for the development and deployment of LLMs as part of a responsible AI strategy.

Watch the rest of the Generative AI Meets Responsible AI sessions here.

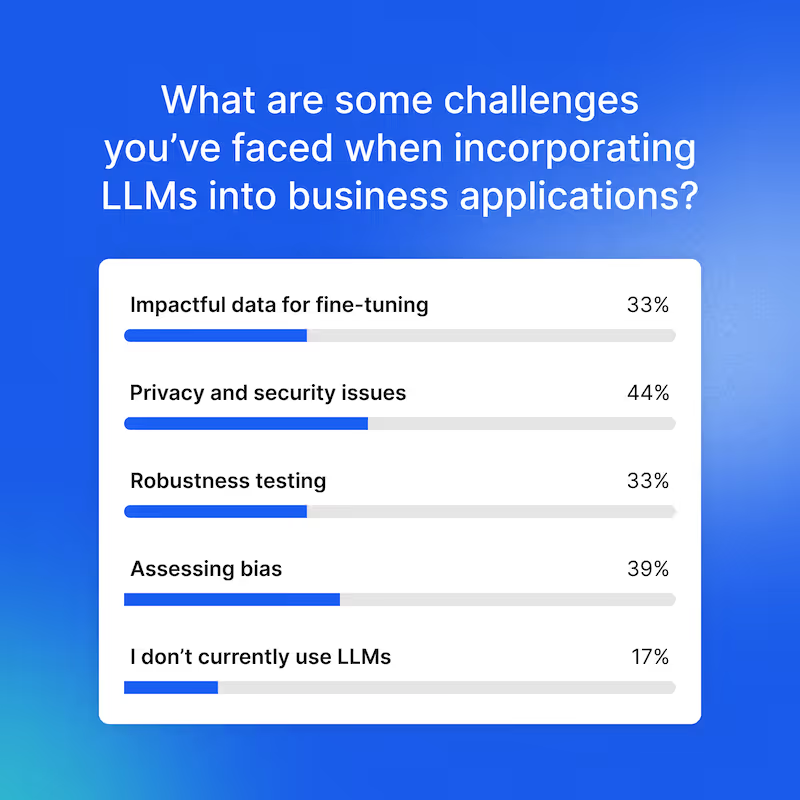

We asked the audience what challenges they faced incorporating LLMs into business applications. 44% were concerned with privacy and security issues: