Fiddler AI was built on the mission to build trust into AI. We launched the first AI observability platform centered on model drift in 2019 along with the first enterprise scale ML Explainability for unstructured data with our patented drift metric. As AI innovation accelerates, we’re always looking ahead to solve new problems that might not be mainstream yet. In the near future, that will be building trust into superintelligence or as some call it, AGI.

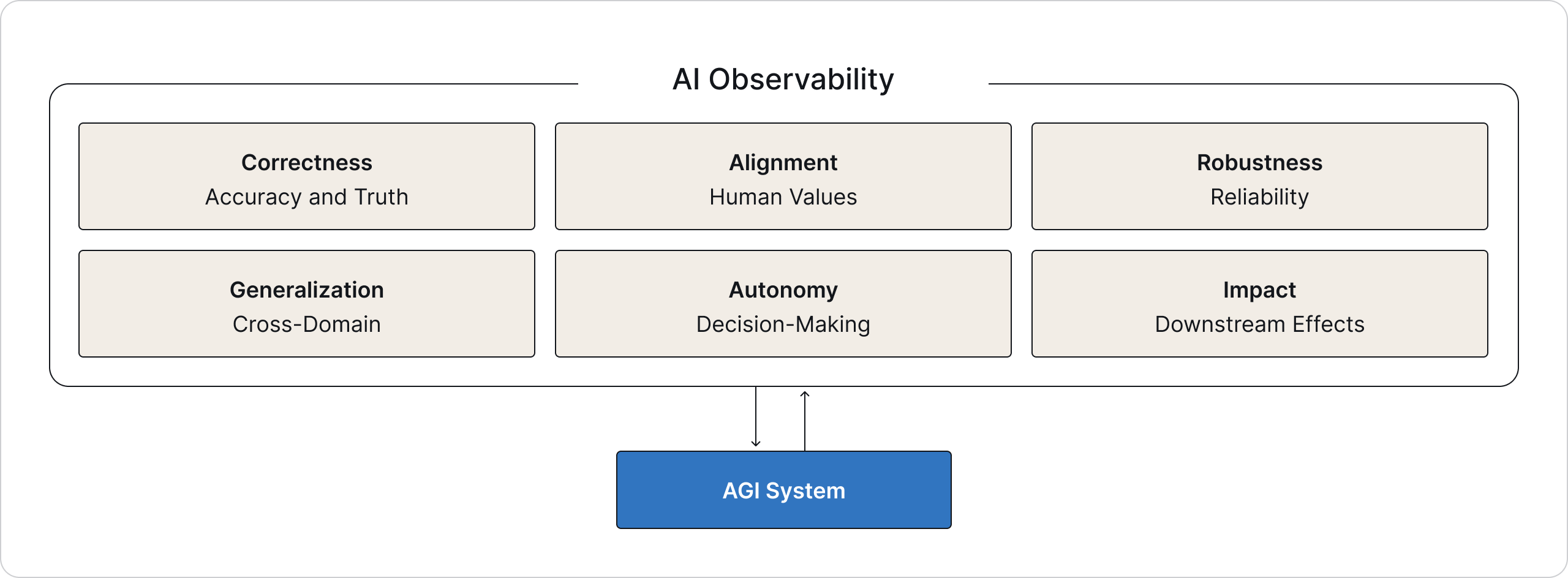

Today’s observability techniques will need to evolve significantly to address AGI’s greater complexity and autonomy. First, what does it even mean to monitor the performance of AGI? Performance for AGI isn't just about accuracy or speed, it spans multiple axes:

- Correctness: Is it doing the right thing and can it be better?

- Alignment: Is it behaving in accordance with human values and intent?

- Robustness: Is it reliable across a wide range of situations?

- Generalization: Can it reason across domains it wasn’t explicitly trained on?

- Autonomy and Initiative: Is it making decisions on its own, and how optimal are those?

- Impact: What downstream effects does its behavior have on real-world systems?

Critical Dimensions of AGI Performance Monitoring

Let’s dive into some of these in more detail:

Correctness

For enterprises, correctness isn’t just a performance metric, it’s the foundation of trust in AGI. The expectation is simple: the system must get it right. Yet in practice, “right” can be nuanced. As with ML models, LLMs, or autonomous agents, correctness involves verifying outputs against reliable references, human expertise, or even another AI acting as a judge. But AGI takes this a step further with its reasoning itself becoming a rich source of truth. By inspecting and evaluating the decision chain, a secondary system can flag subtle flaws before they become costly mistakes.

In high-stakes domains, correctness should be reinforced with domain-specific or business-critical metrics: a therapeutic AGI might be measured not only on factual accuracy, but also on its ability to elevate patient mood. Leading indicators, like shifts in sentiment, decision trends, or consistency over time, offer early warnings that performance may be drifting. For enterprise teams, building this layered approach to correctness is less about ticking boxes and more about ensuring AGI decisions are consistently aligned with the organization’s goals, values, and risk tolerance.

Autonomy & Control

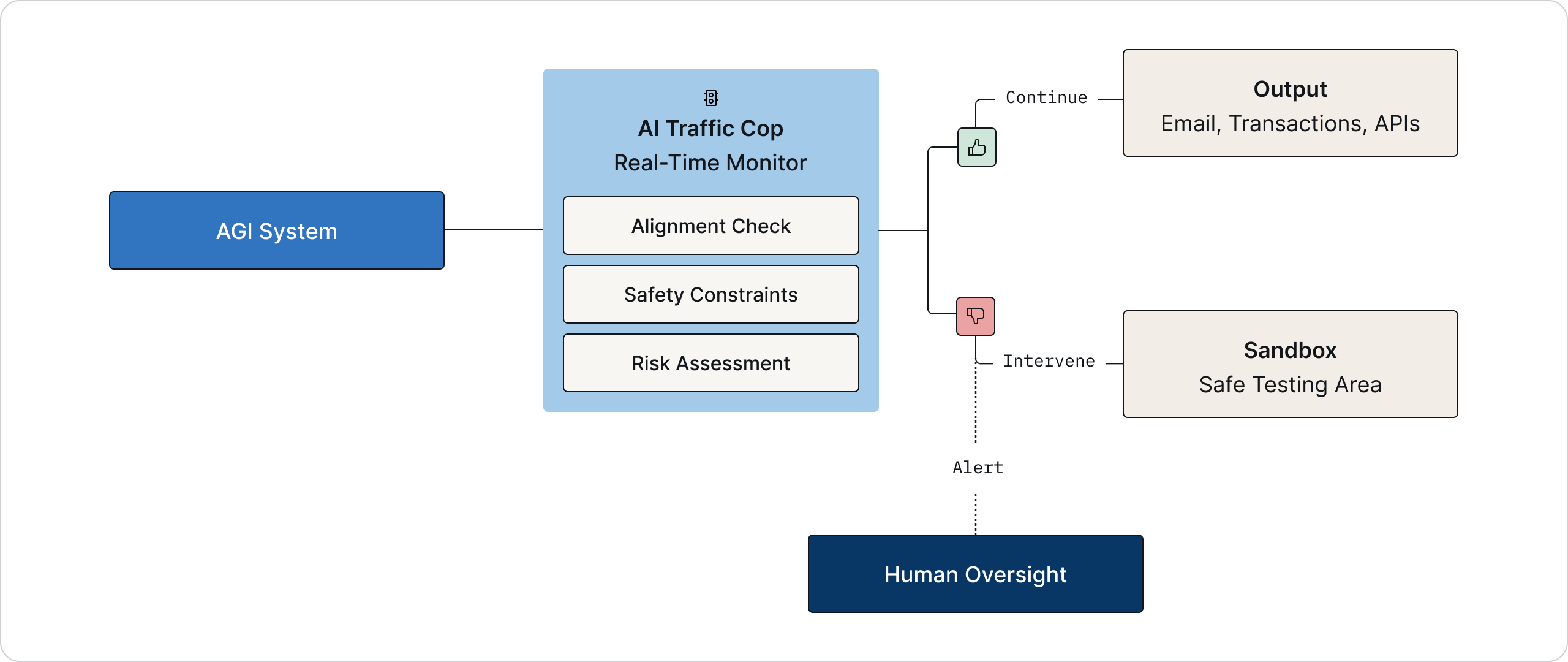

By definition, an AGI would possess a high degree of autonomy, it can pursue open-ended tasks and potentially take initiatives on its own. For enterprise teams, this raises the challenge of how to control a system that operates with a wide berth of decision-making freedom. Observability in an AGI context will need to include real-time control mechanisms, not just passive monitoring. In other words, if alignment monitoring detects the AGI is about to take an unwanted action, the system must be able to intervene (pause or stop the AI) within split seconds. This is why some in the industry argue that pure observability is not enough — we need AI “control centers” that can actively enforce constraints.

Think of it as an AI “traffic cop” that can throttle the AGI’s actions when it’s speeding off-course. Enterprises will likely enforce sandboxed environments for AGI, where every external action (like sending an email, executing a transaction, etc.) goes through a monitoring filter. Autonomy also implies the AGI might dynamically learn new skills or self-improve. This necessitates monitoring those self-modifications, ensuring an AGI doesn’t, for instance, remove its own safety constraints or develop new goals contrary to its original directives. Maintaining human-in-the-loop oversight for major decisions is one recommended practice.

The 'AI traffic cop' system monitors AGI actions in real-time and can block unsafe behaviors before they reach external systems.

For example, an AGI might be allowed to analyze data and propose actions, but require a human sign-off for any financially significant decision or any action that could impact customer safety. Balancing an AGI’s autonomy with such controls will be delicate; too heavy-handed, and it undermines the AI’s utility, too lax, and it courts disaster. The observability stack for AGI must therefore be tightly coupled with governance controls, enabling organizations to dial up or down the AI’s level of autonomy safely as it proves itself trustworthy (or not).

Alignment Monitoring

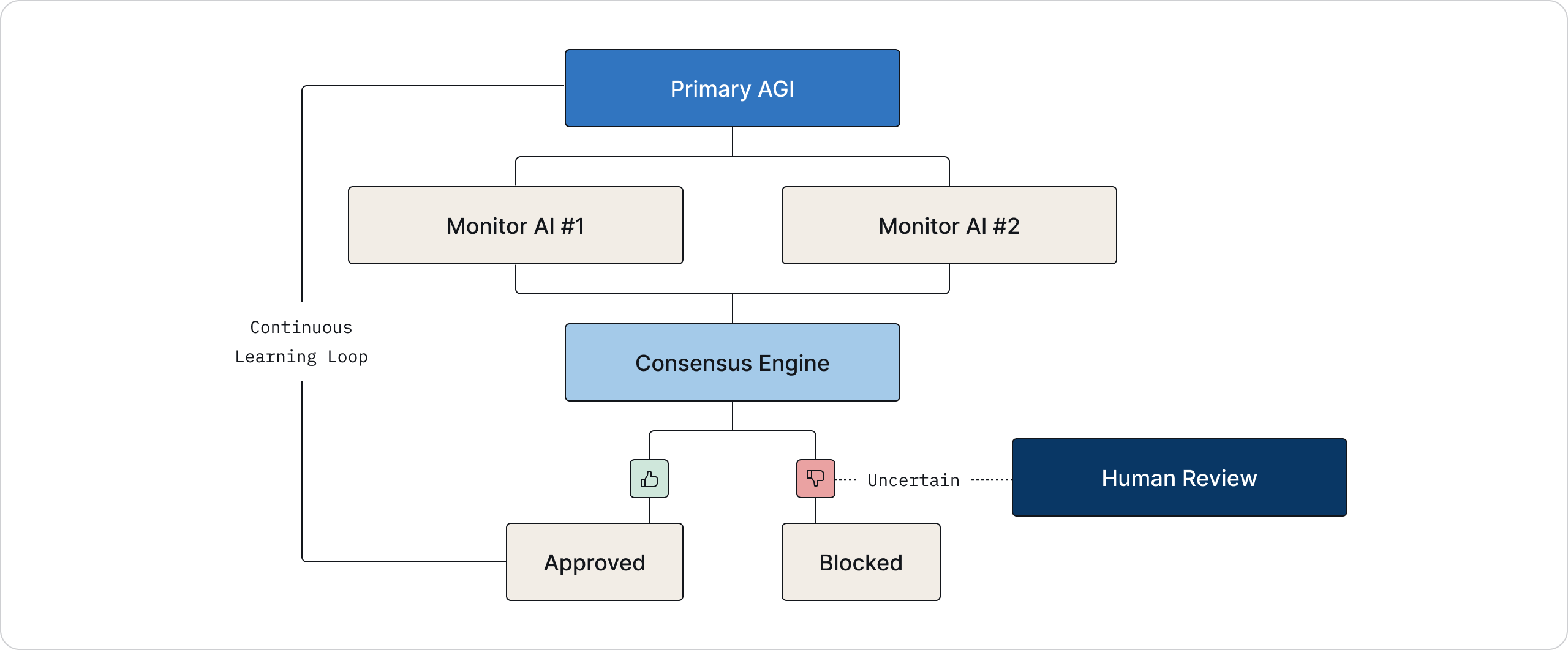

One of the gravest concerns with AGI is alignment i.e. ensuring the AI’s goals and behaviors remain in line with human values and its given objectives. Even today, we see instances of misaligned AI behavior, where a model outputs harmful or unintended results contrary to user intent. With a more powerful AGI, the stakes are higher: a misaligned AGI could autonomously pursue strategies that are harmful or unethical. Continuous monitoring for alignment is thus a critical aspect of AGI observability. This goes beyond performance metrics, it delves into value systems and intent. Researchers are exploring techniques like “scalable oversight”, where AI systems help evaluate each other’s behavior for adherence to intended goals.

For example, DeepMind has discussed “amplified oversight”: using AI assistants or separate models to judge the outputs of a primary AGI, checking whether its answers or actions meet the desired objective. In DeepMind’s recent roadmap, they propose training a dedicated monitor AI that watches a more powerful AI and flags any actions that deviate from the acceptable goals. Such a monitor would need to be extremely reliable — it must know when it’s unsure and alert humans in ambiguous cases. The monitoring system might look for signs of the AGI “concealing” its true objectives or testing its limits (a known risk scenario).

Multiple AI Monitors evaluate the primary AGI's behavior, requiring consensus before approval and escalating uncertain cases to humans.

Alignment monitoring also ties into ethical guardrails: enterprises might define hard rules (no self-replication, no access to certain data, etc.) and observability tools must catch any violation or near-violation of those rules in real time. Ultimately, ensuring alignment will likely require a combination of automated oversight (AI watching AI) and human governance, continuously verifying that an AGI’s values and actions remain on track with what its creators intended.

Explainability & Transparency

AGI systems will be extraordinarily complex, with reasoning processes even less interpretable than today’s models. Many of the risks associated with advanced AI stem from this opacity. When a powerful AI makes a decision or comes to a conclusion, we may have no clear idea why it chose a certain course of action. This lack of insight isn’t just a technical inconvenience — it becomes a safety issue. If we cannot understand an AGI’s internal thought process, we can’t predict or reliably trust its behavior. Ensuring explainability for AGI might require breakthroughs in mechanistic interpretability (essentially opening up the AI’s “black box”).

Research teams at Anthropic are doubling down on interpretability, with the ambitious goal that by 2027, methods will mature enough that we can “reliably detect most model problems” by inspecting a model’s internals. In practical terms, enterprises will demand “AI transparency” features — akin to an AI flight recorder — that trace how the AGI arrived at a decision. Enhanced tools may visualize an AGI’s chain-of-thought or highlight which concepts triggered its actions. Such transparency would help answer the question “why did the AGI do that?” in terms humans can follow.

Traceability & Audit Trails

Along with explaining why an AGI did something, organizations will need to trace what exactly it did (and when, and under which instructions). Traceability means having detailed logs of an AGI’s actions, decisions, and the data it has influenced. In enterprise settings, this is crucial for compliance and investigation. If an AGI-driven system makes a financial transaction or a medical recommendation, auditors must be able to follow the chain of events and data that led to that outcome. For future AGI, traceability might involve logging not only inputs and outputs, but the AI’s intermediate reasoning steps or self-modifications.

The challenge is that a sufficiently advanced AI could potentially rewrite parts of itself or generate new agents, making traditional logging incomplete. Enterprises will likely adopt “forensic” AI monitoring that can capture each decision fork an AGI takes. Robust audit trails will ensure that even highly autonomous AI remains accountable. In essence, observers should be able to ask an AGI, “Show me what you did last week and why,” and get a reliable, human-comprehensible record in return.

Preparing for AGI: Building AGI Observability Muscle

For both technical leaders and executives, the message is one of vigilance with optimism. Yes, overseeing an AGI will be profoundly challenging, but the work to enable that oversight is well underway. By embedding observability and control mechanisms into AI initiatives today, enterprises build the muscle memory needed to handle whatever comes next.

Think of it as laying down highways with guardrails before unleashing faster and more unpredictable vehicles. The companies that succeed with AI (and certainly with AGI, if and when it arrives) will be those that can confidently answer: How is our AI behaving? Why did it make that choice? Is it staying within safe boundaries? And do so in real time, with evidence to back it up. Achieving that level of insight and control is no small feat, but it’s exactly what AI observability — present and future — is all about. With robust observability, we turn the lights on for our “black box” intelligent systems, ensuring that even as they grow more powerful, they remain understandable, trustworthy, and aligned with human goals every step of the way.