We saw AI in the spotlight a lot in 2021, but not always for the right reasons. One of the most pivotal moments for the industry was the revelation of the “Facebook Files” in October. Former Facebook product manager and whistleblower, Frances Haugen, testified before a Senate subcommittee on ways Facebook algorithms “amplified misinformation” and how the company “consistently chose to maximize growth rather than implement safeguards on its platforms.” (Source: NPR). It was an awakening for everyone — from laypeople unaware of the ways they interacted with AI and triggered algorithms, to skilled engineers building innovative, AI-powered products and solutions. We — the AI industry collectively — have to and can do better.

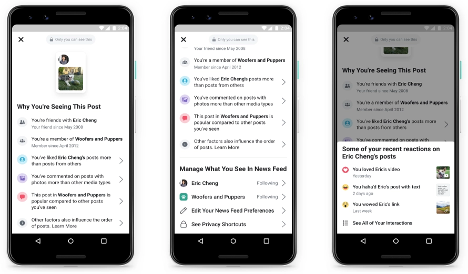

As a former engineer on the News Feed team at Facebook, one who worked on the company’s “Why am I seeing this?” application, which tries to explain to users the logic behind post rankings, I was saddened by Haugen’s testimony exposing, among other things, Facebook’s algorithmic opaqueness. After the 2016 elections, my team at Facebook started working on putting guardrails around News Feed AI algorithms, checking for data integrity, and building debugging and diagnostic tooling to understand and explain how they work.

Features like “Why am I seeing this?’’ started to bring much-needed AI transparency to the News Feed for both internal and external users. I began to see that problems like these were not intractable, but in fact solvable. I also began to understand that they weren’t just a “Facebook problem,” but were prevalent across the enterprise.

Two years ago, for example, Apple and Goldman Sachs were accused of credit-card bias. What started as a tweet thread with multiple reports of alleged bias (including from Apple’s very own co-founder, Steve Wozniak, and his spouse), eventually led to a regulatory probe into Goldman Sachs and their AI prediction practices.

As laid out in the tweet thread, Apple Card’s customer service reps were rendered powerless to the AI’s decision. Not only did they have no insight into why certain decisions were made, they also were unable to override them. And yet it is still happening.

Where Do We Go from Here?

Algorithmic opacity and bias aren’t just a “tech company” problem, they are an equal opportunity menace. Today, AI is being used everywhere from credit underwriting and fraud detection to clinical diagnosis and recruiting. Anywhere a human has been tasked with making decisions, AI is either now assisting in those decisions or has taken them over.

Humans, however, don’t want a future ruled by unregulated, capricious, and potentially biased AI. Civic society needs to wake up and hold large corporations accountable. Great work is being done in raising awareness by people like Joy Buolamwini, who started the Algorithmic Justice League in 2020, and Jon Iwata, founding executive director of the Data & Trust Alliance. To ensure every company follows the path of Tiktok and discloses their algorithms to regulators, we need strict laws. Congress has been sitting on the Algorithmic Accountability Act since June 2019. It is time to act quickly and pass the bill in 2022.

Yet even as we speak, new AI systems are being set up to make decisions that dramatically impact humans, and that warrants a much closer look. People have a right to know how decisions affecting their lives were made, and that means explaining AI.

But the reality is, that’s getting harder and harder to do. “Why am I seeing this?” was a good faith attempt at doing so — I know, I was there — but I can also say that the AI at Facebook has grown enormously complex, becoming even more of a black box.

The problem we face today as global citizens is that we don’t know what data the AI models are being built on, and these models are the ones our banks, doctors, and employers are using to make decisions about us. Worse, most companies using those models don’t know themselves.

We have to hit the pause button. So much depends on us getting this right. And by “this,” I mean AI and its use in decisions that impact humans

This is also why I founded a company dedicated to making AI transparent and explainable. In order for humans to build trust with AI, it needs to be “transparent.” Companies need to understand how their AI works and be able to explain their workings to all stakeholders.

They also need to know what data their systems were, and are, being trained on, because if incomplete or biased (whether inadvertently or intentionally), the flawed decisions they make will be reinforced perpetually. And then it’s really the companies, leaders, and all of us that are being unethical.

Getting It Right

So what’s the fix? How do we ensure the present, future and ongoing upward trajectory of AI is ethical and that AI is as much as possible always used for good? There are three steps to getting this right:

- Making AI “explainable” is an important first step, which in turn creates a need for people, software, and services that can 1) deconstruct current AI applications and identify their data and algorithmic roots and taxonomies and 2) ensure the recipes of new AI applications are 100% transparent. For this to work, of course, companies need to be well-intentioned. Explainable AI only results in Ethical AI if companies want it to. That said, companies should not view Ethical AI as a cost to their business. Good Ethics is good for business.

- Regulating AI is not only a logical next step, I’d argue it’s an urgent one. The potential for AI to be abused, and the ramifications of that abuse so morally, socially, and economically significant, is such that we can’t just rely on self-policing. AI needs a regulatory body analogous to the one imposed on the financial services industry after the 2008 financial crisis – remember “too big to fail”? With AI, the stakes are just as high, or higher, and we need a watchdog, one with teeth, to actively guide and monitor future AI deployments. AI regulations could provide a common operating framework, set up a level playing field, and hold companies accountable – a boon for all stakeholders.

- A framework like the one used for Environmental, Social, Governance (ESG) reporting would be a huge value add. Today, companies around the world are being held accountable by shareholders, supply chain partners, boards, and customers to decarbonize their operations to help meet climate goals. ESG reporting makes corporate sustainability efforts more transparent to stakeholders and regulators. AI would benefit from a similar framework.

Until AI is “explainable,” it will be impossible to ensure it is “ethical.” My hope is that we learn from years of inaction on climate change that getting this right, now, is critical to the present and future wellbeing of humankind.

This is solvable. We know what we need to do and have models we can follow. Fiddler and others are providing practical tools, and governing bodies are taking notice. We can no longer abdicate responsibility for the things we create. Together, we can make 2022 a milestone year for AI, implementing regulation that offers transparency and ultimately restores trust in the technology.