According to a survey by WEF, 85% of financial services companies have implemented AI in some way as of early 2020, with the expectation of mass adoption over the next two years in domains such as customer service, client acquisition, risk management, process re-engineering and generation of new revenue potential. AI’s impact on banking in particular is expected to be transformational with the global business value of AI in banking expected to reach $300B by 2030 and North America projected to be the largest market. The opportunities in banking focus on growing existing or creating new revenue centers with better risk management, process efficiencies and customer operations. Some of the key use cases are in credit lending, fraud and threat detection, anti-money laundering, compliance etc. AI is therefore set to drive the next stage of growth in banks. This has led to a race to build AI into a competitive advantage - JP Morgan has expanded AI deployment with a significant data science center in Silicon Valley while WellsFargo already has thousands of ML models deployed in production.

But operationalizing AI is challenging. As teams build more complex models with the aim of increasing model accuracy, these models are becoming more and more opaque, providing limited visibility into their workings. ML models are also unique software entities - as compared to traditional code - and are trained for high performance on repeatable tasks using historical examples. Their performance can fluctuate over time due to changes in the data input into the model after deployment. Because ML models have nuanced issues specific to machine learning, traditional monitoring products were not designed to take on this challenge.

This results in a significant lack of observability which presents a challenge to financial services for two compliance reasons:

1. Regulation

Key banking use cases, especially credit lending, are regulated by a host of laws not including the Equal Credit Opportunity Act (ECOA), The Fair Housing Act (FHA), Civil Rights Act, Immigration Reform Act etc. Additionally, upcoming regulations like Algorithmic Accountability Act that aim to mitigate the adverse effects of incorrectly deployed AI are looming. Companies deploying AI also face ethical and compliance risks, as indicated by frequent news stories about algorithmic bias. When not addressed, these issues can lead to a lack of trust, negative publicity, and regulatory action resulting in hefty fines.

2. Model Risk Management

Banks need to ensure they adhere to guidelines laid down by the Federal Reserve, OCC and other governing bodies. This was especially critical in the aftermath of the Great Recession that was caused in part due to model risk. The SR 11-7 and OCC Bulletin 2011-12 guidance for example outlines how banks should manage model risk by documenting details about model data, validating model behavior validation and monitoring the model once deployed. SR-11 7 and OCC require every model (especially high risk models) to be validated before they get launched.

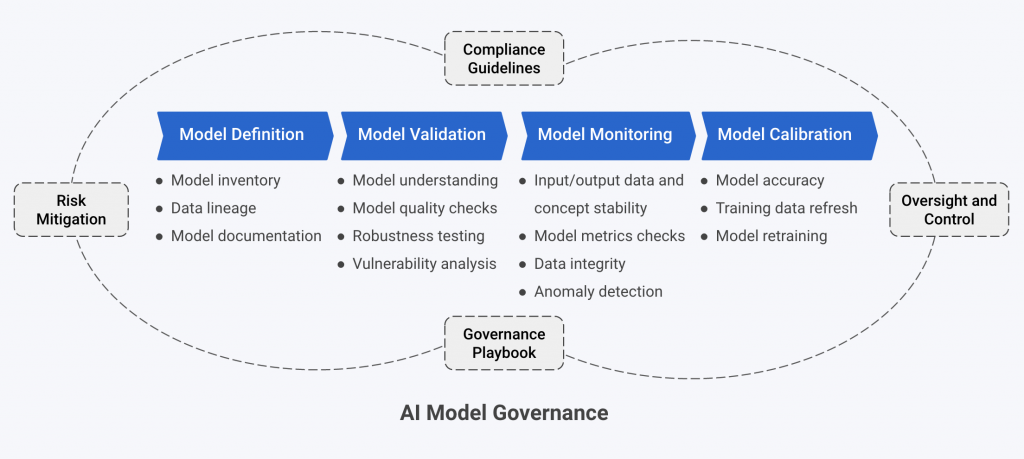

AI Governance

Banks have already been using a related type of mathematical model for decades: statistical or quantitative models. To ensure these guidelines are met for these models, banks have embraced a process with three lines of defense.

a) The first-line is the Business team owning the use case and building the model

b) The second-line is the Risk Management team that ensures the models meets risk goals, and finally,

c) The third-line is the Internal Audit team that ensures the first two lines of defense are operating consistent with expectation.

This organization structure already in place is getting updated to handle the new AI context - the Risk Management or MRM team now includes the AI Governance team, responsible for quality assurance of an ML model. Leading banks have already begun to assemble their AI Governance teams. These teams comprise a new role, AI Validator, that requires a data science background, similar to the model’s developers, to be able to break the model quickly.

AI Validator

AI validators have the critical responsibility of ensuring quality assurance of the ML. Model developers provide them with complete model documentation that includes detailed data lineage, modeling approaches, assumptions and comprehensive validation reports. Validators begin by probing the model to learn its behavior across individual data instances (local behavior), groups of instances (region behavior) and all data instances (global behavior). Since the models can drive significant financial decisions, adversarial attacks can result in unintended losses. So validators test for model robustness and weaknesses using a variety of counterfactual and adversarial techniques. In essence, validators attempt to unlock the black box that is an ML model to understand all its risks before approval for deployment.

They must be able to answer questions that unlock the ‘why’ behind model decisions - for example, when evaluating a credit applicant, an AI Validator might ask questions such as:

- What were the main features that impacted approval or rejection outcomes globally or across specific groups?

- For individual loan applicants, which features were responsible for an approval or rejection?

- Are there any characteristics that stand out for individual applicants that are inconsistent with others in their group?

- What are the minimal changes an applicant would need to make for their approval outcome to change?

Once deployed, AI Validators continuously monitor the model not only for compliance reasons but also to ensure changing data behavior doesn’t cause model decisions to go awry. Gartner has documented the rising trend of AI validation roles to manage AI enterprise risk.

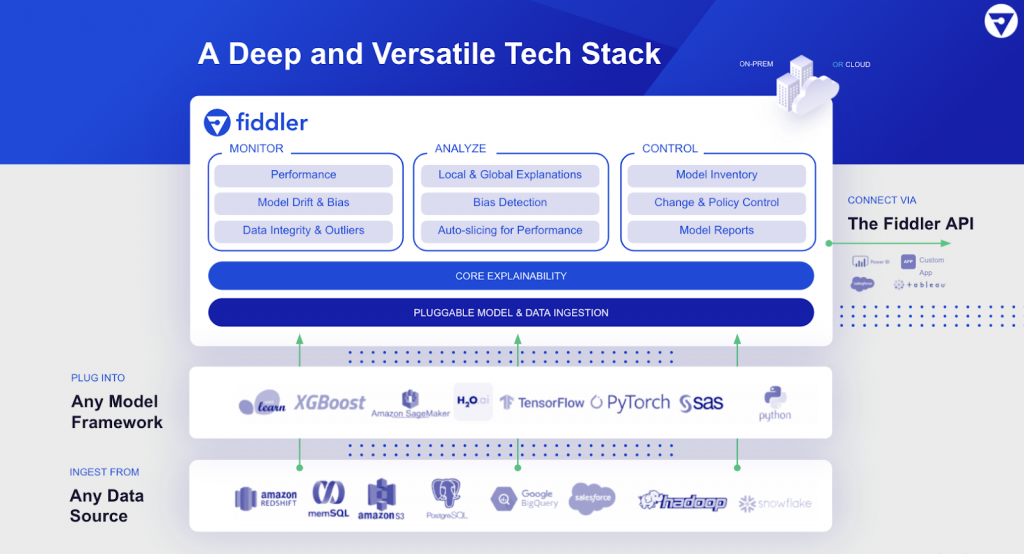

Tooling

Since ML models are increasingly opaque, they need a different set of tools to allow validators to test and monitor these AI systems. Explainable AI, a recent research approach to reverse engineer model decisions, allows teams to inspect individual decisions and analyze models holistically. These insights can be documented for regulators and other model stakeholders. Models also face unique operational issues that require a purpose built solution to help not just alert on problems quickly but trouble shoot them for fast turn around. Large banks have been using MRM systems to handle quantitative models. These systems serve as model inventory, model approval flows and model QA. With the arrival of ML models, they will need significant changes to adapt to this new workflow or ultimately be relegated to quantitative models only with a new connected system for ML models.

As AI adoption accelerates in financial services, the AI governance teams with the new AI Validator role will become an integral part of the risk management process and the new tooling will mature to meet the needs of this new market.