The rapid rise of agentic AI presents a double-edged sword: the remarkable potential for autonomous systems to plan, reason, and act, alongside a new landscape of complex risks and strategic challenges. In our recent AI Explained fireside chat, "Agent Wars," we sat down with AI strategist and former Amazon Prime Video Head of Product, Nate B. Jones, to cut through the noise and explore the reality of agentic AI adoption, the critical architectural decisions teams are facing, and the hidden risks that can derail production deployments.

Navigating the Trough of Disillusionment

While it may seem like every company is deploying sophisticated agents, the reality on the ground is more nuanced. Nate Jones provided a practical perspective, confirming that many are in a "trough of disillusionment." The hype is immense, but teams quickly hit a steep S-curve of difficulty. As Nate put it, "successful implementation of agents [requires] a tremendous amount of skill and work... It's not plug and play yet."

As the majority of teams move through the "exploring" and "prototyping" phases, we're seeing a corresponding rise in the need for agentic observability to manage this new complexity. The dazzling, dynamic nature of generative AI can create unrealistic expectations. Teams discover that while getting started is easier than ever — the "blank page" problem is solved — getting to a finished, reliable product is more difficult than ever. The complexity has been inverted: the challenge is no longer starting, but properly constraining and managing the system to do exactly the right thing. Without the right guardrails, an agent whose data source is disconnected won't throw an error; it will simply "riff," filling the space with confident hallucinations. This new class of silent, non-deterministic failures requires a fundamental shift in how we build and monitor software.

The Architect's Dilemma: Your First Decisions Are Your Most Important

Before a single line of agentic code is written, teams face foundational architectural decisions that will have long-lasting consequences. These choices can be the difference between building an intelligent, autonomous system and creating a "fragile orchestration" that is brittle and impossible to maintain. Nate broke down the most pressing trade-offs:

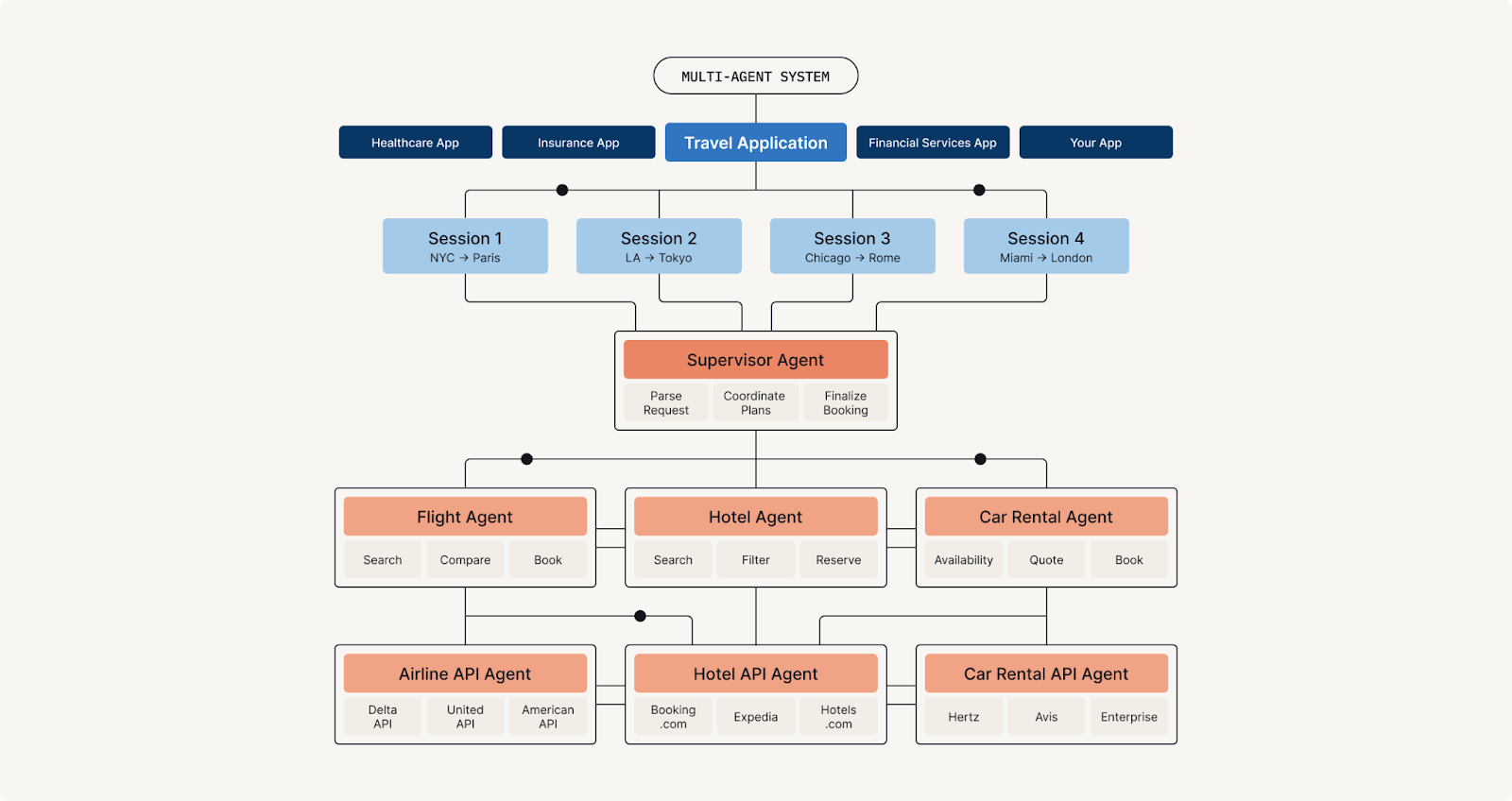

Single-Agent vs. Multi-Agent Systems

One of the first forks in the road is whether to build a single, powerful "god agent" or a system of smaller, specialized agents. As an agent's responsibilities grow, it can quickly become a monolithic black box that is difficult to update or understand.

Multi-agent systems, on the other hand, offer greater modularity and resilience. This is often a human-centric choice, allowing teams to articulate a "separation of concerns" that helps them reason through complex workflows. However, the challenge shifts from internal complexity to external coordination. How do agents communicate? How do you prevent cascading failures?

Nate offered a key insight from an Anthropic white paper: multi-agent systems are often a proxy for spending more tokens on a problem. By having multiple agents "think" about a task, you are effectively increasing the computational power dedicated to it. Therefore, the decision should be guided by the problem itself: is this a task where throwing more tokens at it linearly increases the chance of success? If so, a multi-agent system may be worth the architectural overhead. If not, a simpler, single-agent approach is likely the more prudent choice.

The Build vs. Buy Decision

With a growing ecosystem of agentic frameworks and off-the-shelf tools, the "build vs. buy" decision is more critical than ever. Nate is bullish on "buy" for tools that enable developers, but "less bullish on finished tools that you can buy as a company." This caution stems from a fundamental understanding of where the true value of AI agents lies: in their ability to process complex, unique business contexts. Enterprise environments are characterized by their intricate data structures, bespoke rules, and nuanced operational procedures.

Crafting a universal, one-size-fits-all agent capable of seamlessly navigating and understanding these specificities is exceedingly challenging. The inherent heterogeneity of enterprise data and processes means that generic, pre-built solutions often fall short, struggling to adapt to the highly individualized needs of a given organization. While buying developer tools can be highly advantageous, the real strategic advantage in the agentic space often emerges from the ability to custom-build solutions that deeply integrate with and leverage a company's distinctive operational fabric.

As Nate framed it, a simple agent that "scrapes the slack and it delivers a report" will be commoditized. But a complex agent that handles dispatch for a fleet of trucks — aware of weather, maintenance records, and driver schedules — is a different beast. Such an agent isn't a product you buy; it's a new class of strategic asset you develop, straddling the line between software and employee. If the agentic workflow is a core competitive differentiator, you should invest in building a custom solution. For more generic tasks, leveraging existing frameworks can dramatically accelerate development.

Observability: The Non-Negotiable Foundation

Moving an agent from a promising demo to a reliable, production-ready system is where most teams face their biggest challenges. One capability becomes the non-negotiable foundation for success in the real world.

As agents become more autonomous, the ability to see and understand their behavior becomes the most critical capability of all. In the world of deterministic software, the 80/20 rule meant spending most of your effort on QA before launch. As Nate explained, for probabilistic AI, that rule flips. You must now invest heavily in ongoing evaluation, ongoing observation of what is going on with your AI agents in actual production.

This is because an AI agent will behave in ways you cannot predict. You are no longer determining the capabilities of the tool; you are discovering them. This requires a fundamental shift in mindset and investment, moving from a primary focus on pre-launch QA to a primary focus on post-launch observability. Without this continuous insight, teams are flying blind, unable to debug failures, measure performance, or build the trust required to deploy agents for mission-critical tasks.

Watch the full AI Explained fireside chat with Nate B. Jones.