This blog is also published in Tecton’s blog.

Imagine you've spent months building the perfect fraud detection model. It's catching suspicious transactions with impressive accuracy, saving your company millions in potential losses. But six months later, your false positives spike, customers get frustrated, and suddenly you're scrambling to understand what went wrong.

Your models are only as good as the data you’re feeding them. Your data can change over time, shifting away from your training data before you even know it, or it could even be broken upstream of your model. So, how do you make sure that the online feature values used for inference are still aligned with the features used to train your models?

Why Feature Drift Detection is Important for ML Performance

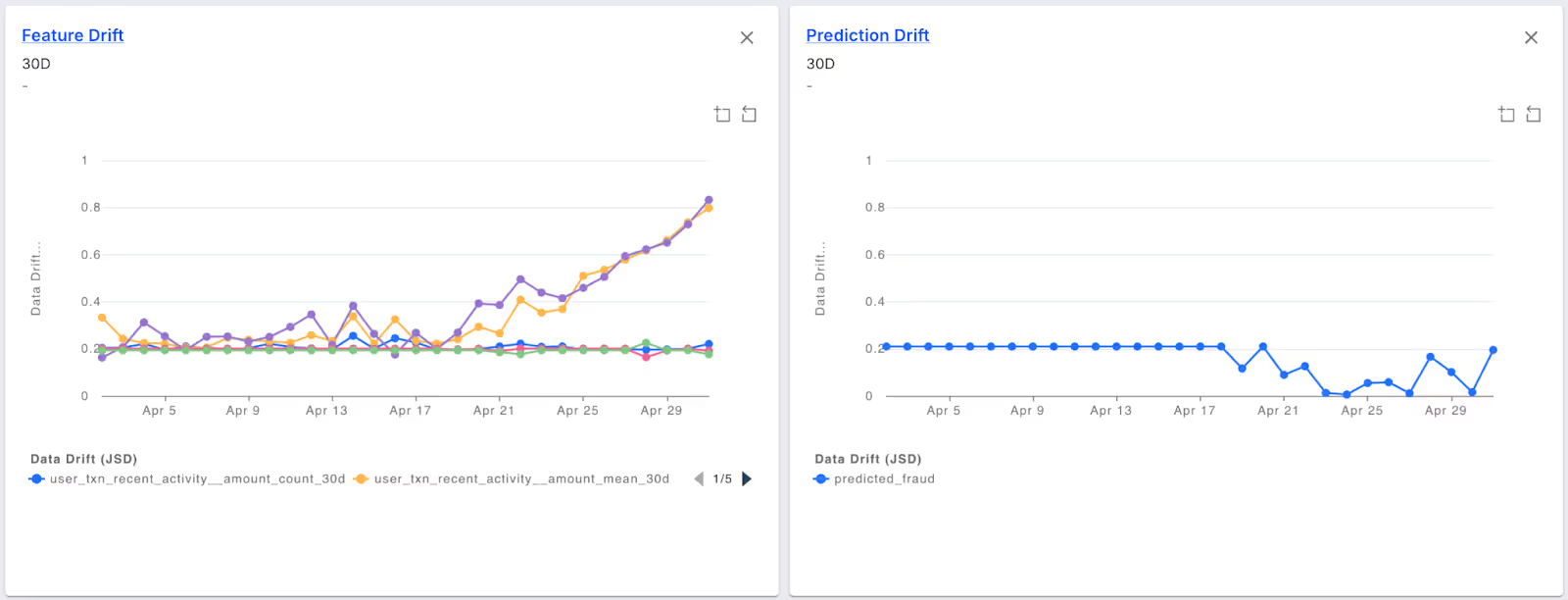

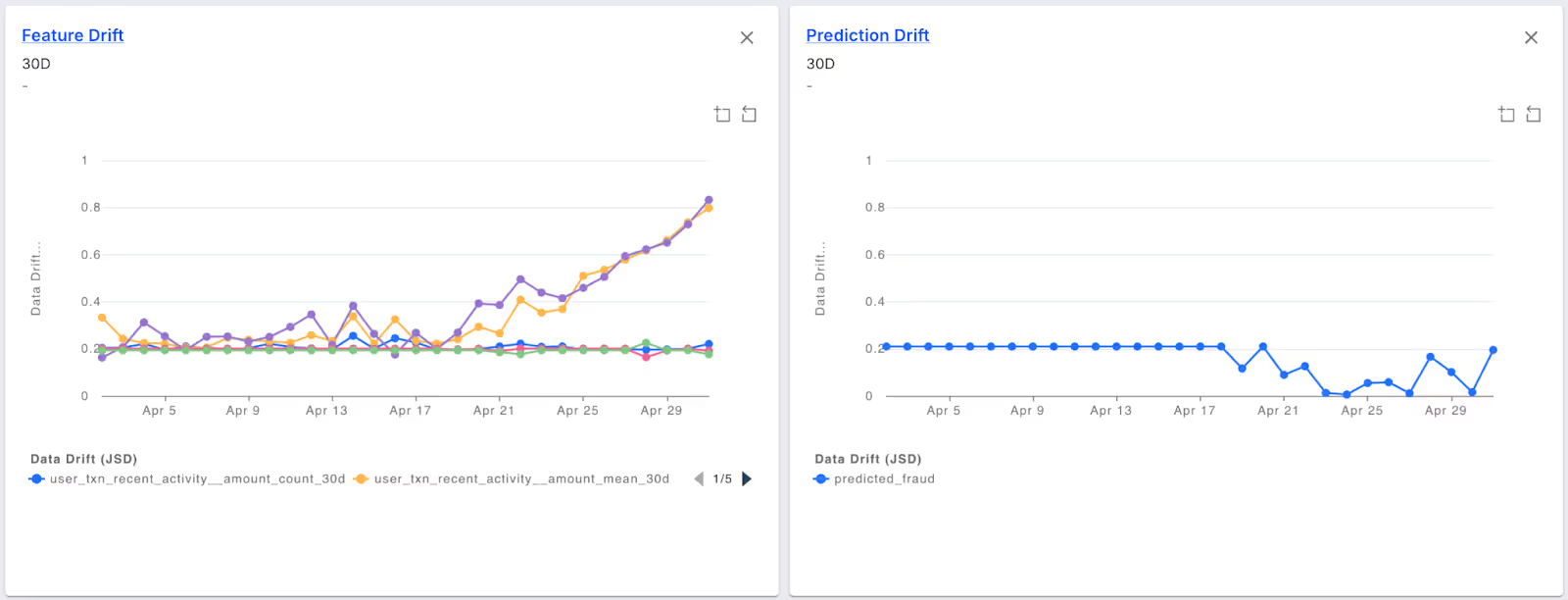

This Feature Drift chart shows two features that have been drifting in their distribution compared to the model's training baseline over the last two weeks. The Prediction Drift chart shows how the prediction distribution is changing as a result.

When feature distributions change significantly compared to your training baseline, three critical problems emerge:

- Model Performance Stability: Models are typically trained on historical data. When the properties of the input features change significantly, the model might not generalize well to new data, leading to performance degradation.

- Early Warning System: Detecting drift in features can act as an early warning mechanism. Before noticeable deterioration in predictive performance occurs, teams can be alerted to the changes in data distributions and take proactive measures.

- Data Integrity and Reliability: Proactive monitoring for feature drift helps ensure that the incoming data remains consistent with the data used in training. This is critical in regulated industries or high-stakes environments where decision-making relies on accurate predictions.

The Solution for Implementing Feature Drift Detection

We've built a powerful solution that combines two best-in-class platforms into a comprehensive ML monitoring solution:

Tecton

A feature platform like Tecton uses consistent feature definitions for offline training and online serving, which removes the initial risk of training/serving misalignment. It manages the data pipelines and continuously keeps features fresh and ready to serve for inference. Tecton’s declarative approach reduces the time it takes to design, test, and deploy production-ready feature pipelines from months to days.

While feature platforms usually include some basic data quality metrics and even data quality validation, the feature platform itself doesn’t usually offer extensive facilities for monitoring model performance or feature drift.

Fiddler

An AI Observability and Security platform for monitoring, analyzing, and improving ML model performance in production. The platform monitors 30 out-of-the-box ML metrics, 50 LLM metrics, and custom metrics. Based on the ML use case, it tracks drift — both in model output and in the features supplied as inputs. Fiddler is a robust early warning system that closes the loop in the ML lifecycle to drive ongoing model improvement.

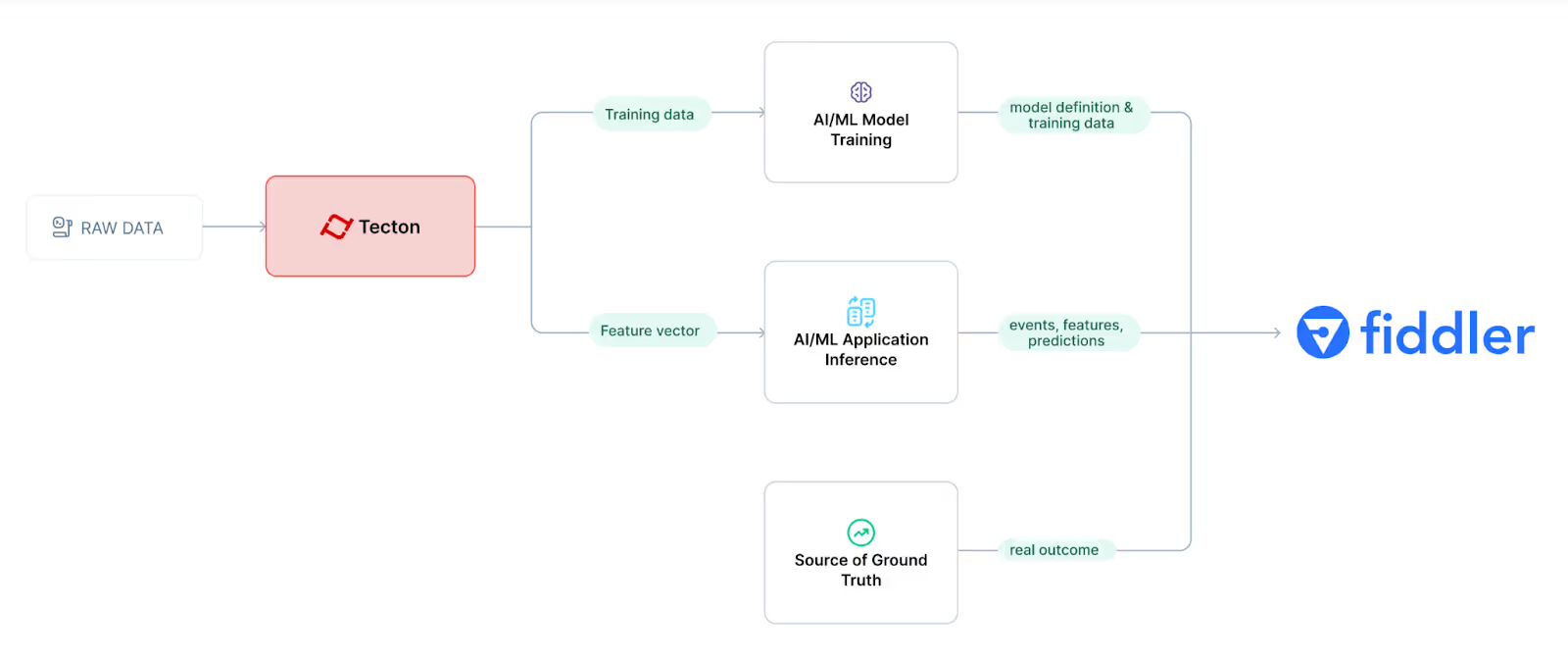

Together, Tecton’s feature platform and Fiddler’s AI Observability and Security capabilities help enterprises create training datasets, serve features online for inference, and send those same feature values and predictions to Fiddler for logging and monitoring.

Tecton + Fiddler Integration Data Flow

When training data is used to build a model, it can be uploaded to Fiddler to create the pre-production baseline that establishes expected feature and prediction distributions. When a model makes predictions using features from Tecton, the event identifier, the resulting prediction, and input features are submitted to Fiddler as an event or batch upload. At a later time, when the events are labeled, the ground truth associated with each event is uploaded to Fiddler. This enables Fiddler’s feature and model drift tracking by comparing online value distributions to the training baseline.

Here’s an example that uses Fiddler logging of training data and online feature data from Tecton as well as inference time results to deliver model and feature drift analysis.

Setting Up a Fraud Detection Feature Service in Tecton

For model training, we start with some labeled event data. In this fraud detection example, the label is “is_fraud”:

Tecton uses feature views to transform raw streaming, batch, and real-time data into features for training and serving models online.

First, we’ll create a streaming feature view to describe recent account activity.

With Tecton, features are managed as Python code modules typically written in interactive notebooks. When this code is applied to Tecton, data pipelines are created to update feature values for training and serving continuously.

A Streaming Feature

This fraud detection streaming feature example calculates features for recent spending totals and 30-day moving means and standard deviations:

@stream_feature_view(

source=transactions_stream,

entities=[user],

mode="pandas",

timestamp_field="timestamp",

features = [

Aggregate( name="sum_amount_10min", function="sum",

input_column=Field("amount", Float64),

time_window=timedelta(minutes=10)),

Aggregate( name="sum_amount_last_24h",function="sum",

input_column=Field("amount", Float64),

time_window=timedelta(hours=24)),

Aggregate( name = "amount_mean_30d",function="mean",

input_column=Field("amount", Float64),

time_window=timedelta(days=30)),

Aggregate( name = "amount_count_30d",function="count",

input_column=Field("amount", Float64),

time_window=timedelta(days=30)),

Aggregate( name = "amount_stddev_30d",function="stddev_samp",

input_column=Field("amount", Float64),

time_window=timedelta(days=30)),

],

)

def user_txn_recent_activity(transactions_stream):

return transactions_stream[["user_id", "timestamp", "amount"]]A Realtime Feature

We’ll also need a way to identify outliers when a new transaction comes in, so we’ll use a real-time feature view to calculate a z-score:

request_ds = RequestSource(

name = "request_ds",

schema = [Field("amount", Float64)]

)

request_time_features = RealtimeFeatureView(

name="request_time_features",

sources=[request_ds, user_txn_recent_activity],

features = [

Calculation(

name="amount_zscore_30d",

expr="""

( request_ds.amount -

user_txn_recent_activity.amount_mean_30d

)

/ user_txn_recent_activity.amount_stddev_30d

""",

),

],

)Finally, we’ll roll both of these feature views into a single feature service that we’ll use to deliver point-in-time correct training data and serve live features in production to a fraud detection model:

from tecton import FeatureService

fraud_detection_feature_service = FeatureService(

name="fraud_detection_feature_service",

features = [ user_txn_recent_activity, request_time_features]

)tecton apply

The `tecton apply` command publishes the data sources, feature views, and feature services defined in the Python modules into the platform. It initiates data processing jobs to populate the offline and online stores:

> tecton apply

Using workspace "fraud_detection" on cluster https://fintech.tecton.ai

✅ Imported 1 Python module from the feature repository

✅ Imported 1 Python module from the feature repository

⚠️ Running Tests: No tests found.

✅ Collecting local feature declarations

✅ Performing server-side feature validation: Initializing.

↓↓↓↓↓↓↓↓↓↓↓↓ Plan Start ↓↓↓↓↓↓↓↓↓↓

+ Create Stream Data Source

name: transactions_stream

+ Create Entity

name: user

+ Create Transformation

name: user_txn_recent_activity

+ Create Stream Feature View

name: user_txn_recent_activity

materialization: 11 backfills, 1 recurring batch job

> backfill: 10 Backfill jobs from 2023-12-02 00:00:00 UTC to 2025-03-25 00:00:00 UTC writing to the Offline Store

1 Backfill job from 2025-03-25 00:00:00 UTC to 2025-04-24 00:00:00 UTC writing to both the Online and Offline Store

> incremental: 1 Recurring Batch job scheduled every 1 day writing to both the Online and Offline Store

+ Create Realtime (On-Demand) Feature View

name: request_time_features

+ Create Feature Service

name: fraud_detection_feature_serviceGreat! Now we can query our feature service to enhance training events with features.

Pre-Production Training

Data scientists use the feature service method get_features_for_events to retrieve time-consistent training data from a notebook. Depending on the volume of the data, they can run training data generation using local compute or larger remote engines like Spark or EMR.

Given a dataframe of training events (labeled or not), get_features_for_events enhances each event with time-consistent feature values. Training data must be time-consistent to prevent initial feature drift in production. This means retrieving feature values precisely as they would have been calculated during each training event.

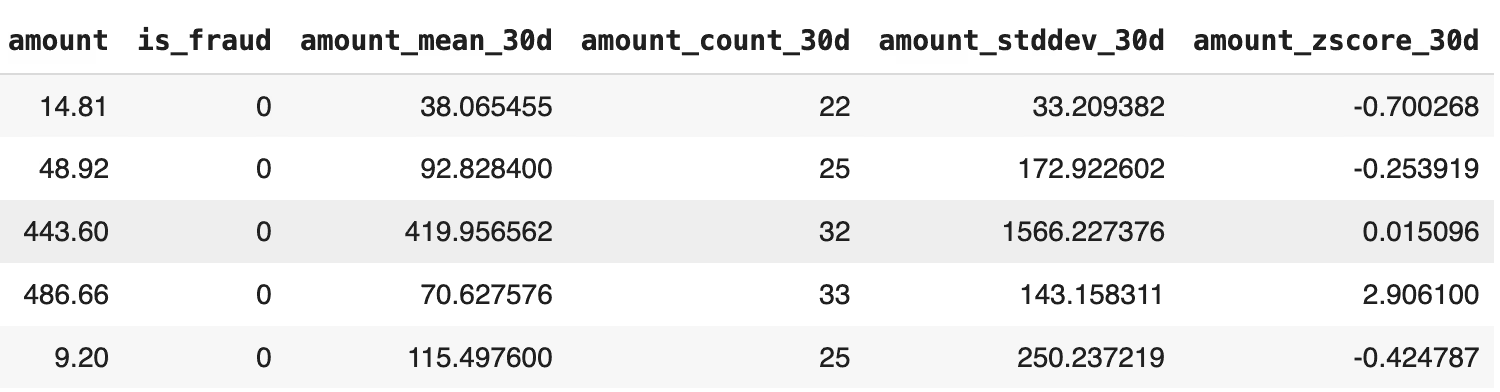

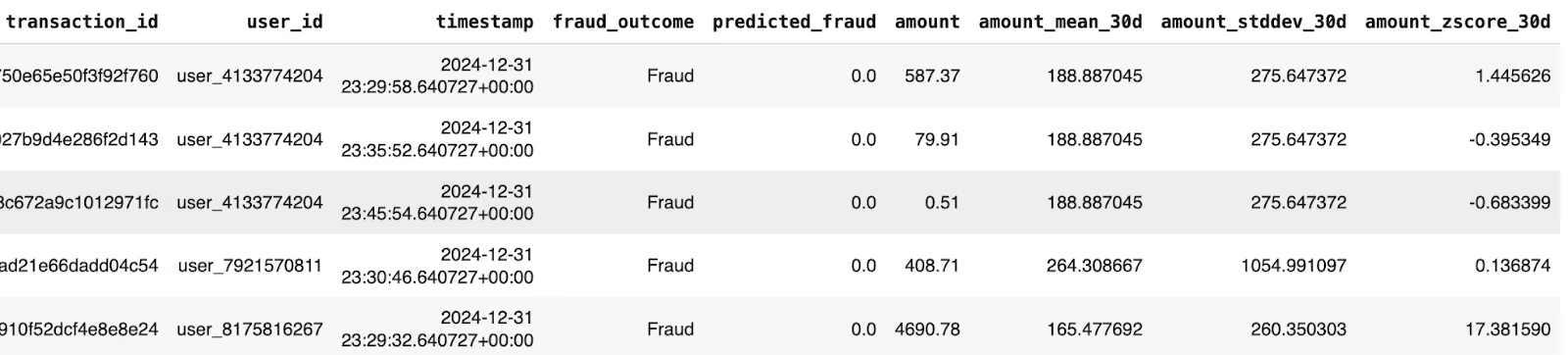

training_data = fraud_detection_feature_service.get_features_for_events(training_events)Here’s a sample of the resulting training_data:

This training data is then used to train and validate an ML model in your favorite ML development platform, and this is the point where we integrate Fiddler into our process.

Registering and Baselining Our Model in Fiddler

Next, we should register our model with Fiddler and create a baseline for the model training data and outputs.

Fiddler logging code integrates directly into your model training code and runs wherever and whenever you run your training. This might be in a notebook or a scheduled training script used when a model is ready for production deployment.

# get or create the project in Fiddler

project = fdl.Project.get_or_create( name="tecton_integration" )

# define the model schema

model_spec = fdl.ModelSpec(

inputs = input_columns, # list of model input features

outputs = ['predicted_fraud'], # model prediction

targets = ['fraud_outcome'], # truth label

metadata = ['user_id', 'timestamp'],

)

fdl_model = fdl.Model.from_data(

name = "transaction_fraud_monitoring",

version = "v1.0",

project_id = project.id,

source = training_result_data.sample(100), # training sample with predictions

spec = model_spec,

task = fdl.ModelTask.BINARY_CLASSIFICATION, # type of model

task_params = fdl.ModelTaskParams(target_class_order=['Not Fraud', 'Fraud']),

event_id_col = 'transaction_id', # column used to id each event

event_ts_col = 'timestamp', # event time column

)

# register new model on Fiddler

fdl_model.create()We also need to let Fiddler know what our baseline data for this model looks like so it can be used to compare new feature values for drift.

The trained model is used to calculate predictions for the baseline training data, and the actual outcome of the event (fraud_outcome) is added along with the model prediction (predicted_fraud):

input_data = training_data.drop(['transaction_id', 'user_id',

'timestamp', 'amount'], axis=1)

input_data = input_data.drop("is_fraud", axis=1)

input_columns = list(input_data.columns)

#calculate predictions

predictions = model.predict(input_data)

# add prediction to training set

training_data['predicted_fraud']=predictions.astype(float)

# add outcome column

training_data.loc[(training_data["is_fraud"]==0),'fraud_outcome'] = 'Not Fraud'

training_data.loc[(training_data["is_fraud"]==1),'fraud_outcome'] = 'Fraud'

display(training_data.sample(5))Here’s an excerpt of the baseline training data:

And here’s how we log that training data:

baseline_publish_job = fdl_model.publish(

source = training_data, # full training dataset with predictions

environment = fdl.EnvType.PRE_PRODUCTION,

dataset_name = "baseline_training_data", # dataset name

)

baseline_publish_job.wait() # optionally wait for job to completeDefining a Simple Model Application

Now that we have our feature service set up, we’ll build a simple application to run our model, feeding in live features from Tecton.

This sample application logic runs in a notebook cell to illustrate the steps used when retrieving online features to run inference with our model. In production, getting features from Tecton and evaluating the model is part of the application logic, which can be built on any tech stack.

current_transaction = {

"transaction_id": "57c9e62fb54b692e78377ab54e9d7387",

"user_id": "user_1939957235",

"timestamp": "2025-04-08 10:57:34+00:00"

"amount": 500.00

}

# feature retrieval for the transaction

feature_data = fraud_detection_feature_service.get_online_features(

join_keys = join_keys = {"user_id": current_transaction["user_id"]},

request_data = {"amount": current_transaction["amount"] }

)

# feature vector prep

columns = [ f["name"].replace(".", "__")

for f in feature_data["metadata"]["features"]]

data = [ feature_data["result"]["features"]]

features = pd.DataFrame(data, columns=columns)[X.columns]

# inference

prediction = {"predicted_fraud": model.predict(features).astype(float)[0]}Hooking up Fiddler in our Model Application

When a model makes predictions using features from Tecton, the prediction result along with the input features and event identifiers are logged to Fiddler as an event. This enables Fiddler feature and model drift tracking by comparing values to the training baseline.

So we add this code to each prediction:

publish_data = current_transaction | features.to_dict('records')[0] | prediction

project = fdl.Project.from_name( name = 'tecton_integration')

fdl_model = fdl.Model.from_name( name = 'transaction_fraud_monitoring',

project_id = project.id)

fdl_model.publish( publish_data, environment=fdl.EnvType.PRODUCTION)Since this is a fraud detection example, the ground truth is whether the transaction is fraudulent or not. In most cases, this information is unknown until some arbitrary time later, like when the cardholder reports a transaction as fraudulent. This is why Fiddler models include the event_id_col, which indicates which data column uniquely identifies an event. You can use Fiddler’s asynchronous logging of the target column using the event_id_col to associate a logged prediction with its corresponding ground truth.

event_update = [

{

'fraud_outcome': 'Fraud',

'transaction_id': '57c9e62fb54b692e78377ab54e9d7387',

},

]

fdl_model.publish(

source = event_update,

environment = fdl.EnvType.PRODUCTION,

update = True,

)Payoff: Proactive ML Monitoring in Action

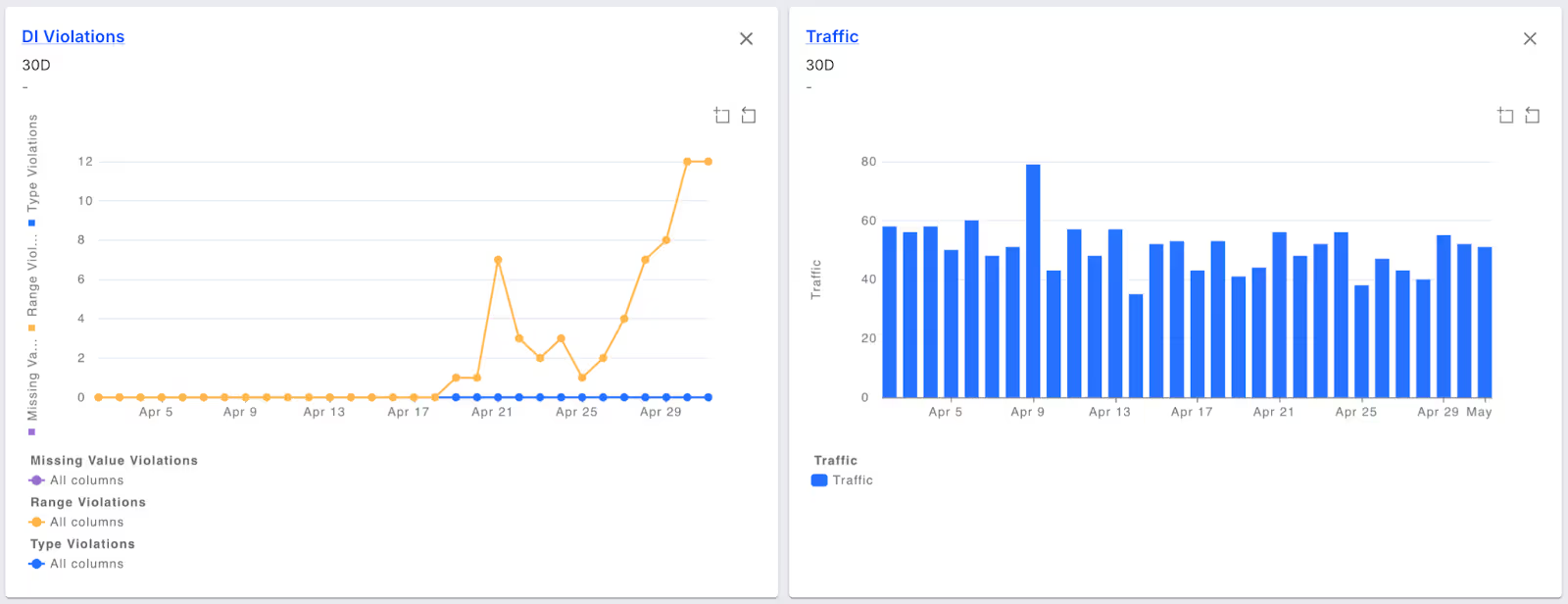

We did all this work so that we can leverage Fiddler to give us feedback on model performance, and so that we can get early alerts on our feature data pipeline. This feedback can then be used to adjust feature engineering in Tecton and/or retrain the model.

The Fiddler dashboard shows us features that are drifting over the last two weeks and how they affect the prediction distribution:

Boom! We now have both:

- A great monitor of our model performance, ensuring we maintain fraud detection accuracy over time

- An early warning system for issues in our upstream data or skew in our features

By setting up Fiddler alerts, we can be notified whenever any of the features or model distributions drift beyond a threshold, allowing us to address model issues before they impact the business.

Learn more about Tecton and Fiddler: