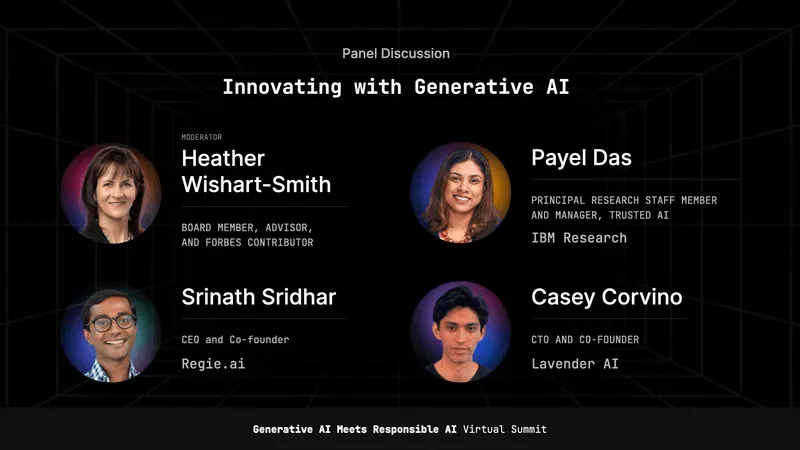

We kicked off our Generative AI Meets Responsible AI summit last week with a great panel discussion focused on the innovations that are happening with generative AI. The panel was moderated by Heather Wishart-Smith (Forbes) and included the rockstar panelists: Payel Das (IBM), Srinath Sridhar (Regie.ai), and Casey Corvino (Lavender AI). We rounded up the top three key takeaways from this panel.

TAKEAWAY 1:

Generative AI is a rapidly-evolving technology which promises to revolutionize industries like drug discovery and sales, but they come with their own set of technical challenges.

Generative AI models are being used across different domains, including enterprise and scientific applications. Our panelists specifically use generative AI models to develop:

- Chatbots in guarded environments

- New pharmaceutical drugs for novel applications

- Synthetic data for banks while adhering to regulatory rules and data sharing restrictions

- Content and email personalization in seconds to enable enterprise Sales teams

- Antibiotics and antivirals in collaboration with experimental labs

But generative AI models are currently limited by the need to deeply understand linguistics and semantic knowledge. Users often have to, as Casey Corvino says, “[tell] GPT very specific commands to, for lack of a better word, trick it to actually do what we want it to do".

Fine-tuning AI models, such as the need for positive examples and the inability to use copyrighted data, is another challenge to widespread adoption. "My options are to use a year and a half old model that I can fine-tune in a fast moving space or to actually use the latest models, but I have to use all the data that OpenAI used, and I cannot use my own copyrighted data," Srinath Sridhar explained.

TAKEAWAY 2:

The evolution of generative AI landscape will result in a market compression

The generative AI landscape is undergoing rapid expansion and growth, and currently features four types of entities:

- Foundational players such as Google, Microsoft, OpenAI, and Stability, who build the technology

- Horizontal players, which includes companies like Jasper, CopyAI, and Writesonic, that create general-purpose tools

- Vertical players, like Lavender and IBM, that build specific generative AI applications for different domains, such as sales, life sciences, or legal services

- Platform players, such as Adobe and Notion, who offer platforms for building generative AI models

As the space matures, there’s an expectation that the market will compress, as clear winners emerge across each group.

TAKEAWAY 3:

Generative AI models need to be checked for bias, can be used to explain how they work, and can be used to generate guardrails that are consistent with a framework.

Since end users don't have access to the training data for these generative models, how can companies carry out model bias audits to ensure responsible AI? ML leaders need to consider using multiple categories of bias and social issues to test their generative AI models. Regie uses 10 categories that are widely ranging from age, socioeconomic status, gender, disability, geopolitics, race, ethnicity and religion and will look for biases across their predefined categories. They use an extensive database to analyze and identify potential biases in their work.

The panelists discussed three dimensions of best practices in AI: trust, ethics, and transparency. Trust is a multi-faceted concept involving model robustness, causality, uncertainty, and explainable AI. IBM's Trust 360, for example, aims to break down trust into its components, allowing developers to score AI models on each dimension. This results in a factsheet or model card, similar to a medicine label, which provides end users with an understanding of the model and its testing process.

Ethics involves following associational norms and adhering to the ethical guidelines set by each enterprise. It’s critical to ensure models abide by these guidelines.

A key component to the above is having a human in the loop. Incorporating a human in the loop allows AI systems to benefit from human intuition and ethical judgment, leading to more responsible and contextually appropriate decision-making that combines the strengths of both entities.

Watch the rest of the Generative AI Meets Responsible AI sessions here.