Bias in AI is an issue that has really come to the forefront in recent months — our recent blog post discussed the Apple Card/Goldman Sachs alleged bias issue. And this isn’t an isolated instance: Racial bias in healthcare algorithms and bias in AI for judicial decisions are just a few more examples of rampant and hidden bias in AI algorithms.

While AI has had dramatic successes in recent years, Fiddler Labs was started to address an issue that is critical — that of explainability in AI. Complex AI algorithms today are black-boxes; while they can work well, their inner workings are unknown and unexplainable, which is why we have situations like the Apple Card/Goldman Sachs controversy. While gender or race might not be explicitly encoded in these algorithms, there are subtle and deep biases that can creep into data that is fed into these complex algorithms. It doesn’t matter if the input factors are not directly biased themselves — bias can, and is, being inferred by AI algorithms.

Companies have no proof to show that the model is, in fact, not biased. On the other hand, there’s substantial proof in favor of bias based on some of the examples we’ve seen from customers. Complex AI algorithms are invariably black-boxes and if AI solutions are not designed in such a way that there is a foundational fix, then we’ll continue to see more such cases. Consider the biased healthcare algorithm example above. Even with the intentional exclusion of race, the algorithm was still behaving in a biased way, possibly because of inferred characteristics.

Prevention is better than cure

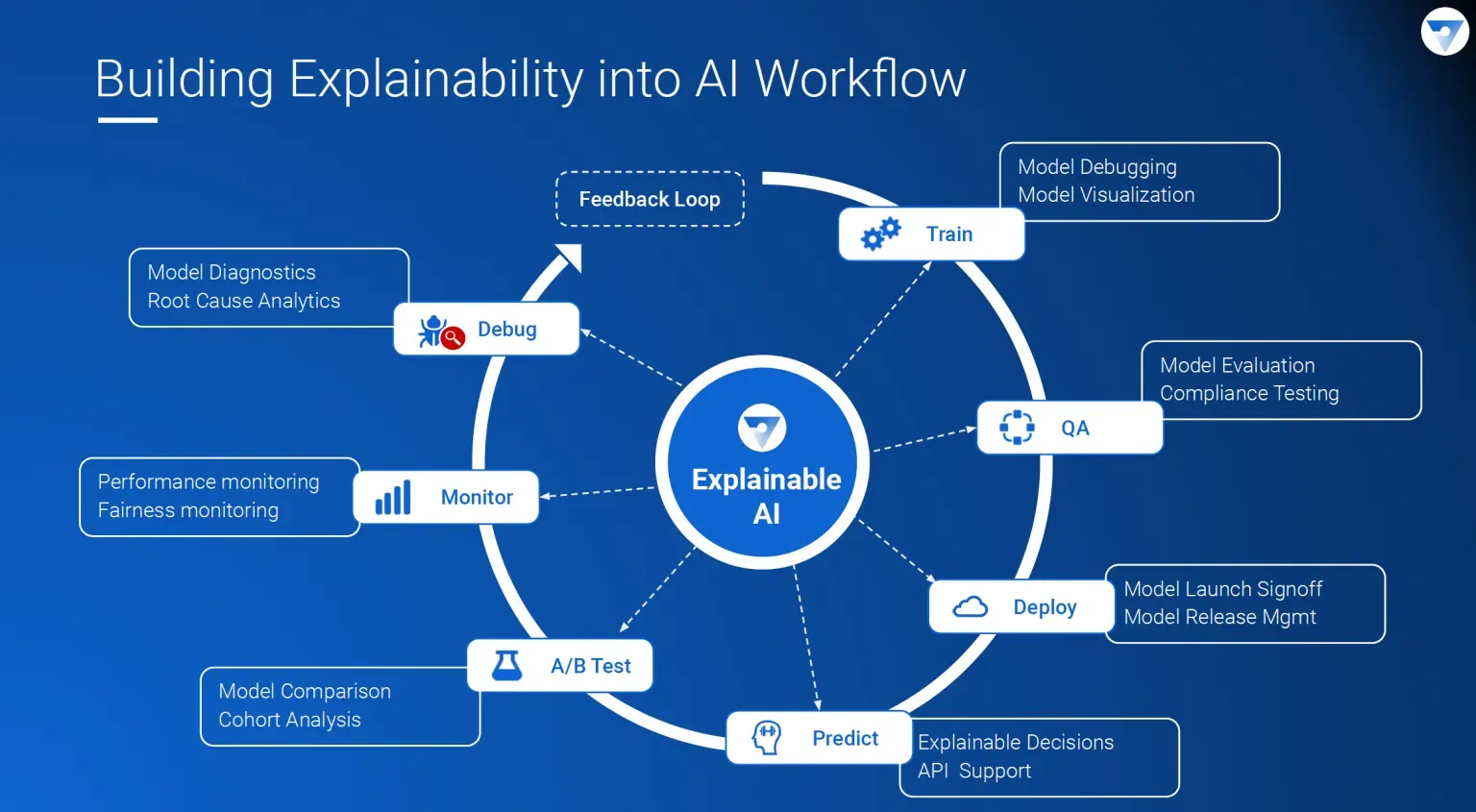

One of the main problems with AI today is that issues are detected after-the-fact, usually when people have already been impacted by them. This needs to be changed: explainability needs to be a fundamental part of any AI solution, right from design all the way to production - not just part of a post-mortem analysis. In order to prevent bias we need to have visibility into the inner workings of AI algorithms, as well as data, throughout the lifecycle of AI. And we need humans-in-the-loop monitoring these explainability results and overriding algorithm decisions where necessary.

What is explainable AI and how can it help?

Explainable AI is the best way to understand the why behind your AI. It tells you why a certain prediction was made and provides correlations between inputs and outputs. Right from the training data used to model validation in testing and production, explainability plays a critical role.

Explainability is critical to AI success

With the recent launch of Google Cloud’s Explainable AI, the conversation around Explainable AI has accelerated. Google’s launch of Explainable AI completely debunks what we’ve heard a lot recently - that Explainable AI is two years out. It demonstrates how companies need to move fast and adopt Explainability as part of their machine learning workflows, immediately.

But it begs the question, who should be doing the explaining?

What do businesses need in order to trust the predictions? First, we need explanations so we understand what’s going on behind the scenes. Then we need to know for a fact that these explanations are accurate and trustworthy, and come from a reliable source.

At Fiddler, we believe there needs to be a separation between church and state. If Google is building AI algorithms and also explains it for customers -without third party involvement - it doesn’t align with the incentives for customers to completely trust their AI models. This is why impartiality and independent third parties are crucial, as they provide that all important independent opinion to algorithm-generated outcomes. It is a catch-22 for any company in the business of building AI models. This is why third party AI Governance and Explainability services are not just nice-to-haves, but crucial for AI’s evolution and use moving forward.

Google’s change in stance on Explainability

Google has changed their stance significantly on Explainability. They also went back and forth on AI ethics by starting an ethics board only to be dissolved in less than a fortnight. A few top executives at Google over the last couple of years went on record saying that they don’t necessarily believe in explaining AI. For example, here they mention that ‘..we might end up being in the same place with machine learning where we train one system to get an answer and then we train another system to say – given the input of this first system, now it’s your job to generate an explanation.’ And here they say that ‘Explainable AI won’t deliver. It can’t deliver the protection you’re hoping for. Instead, it provides a good source of incomplete inspiration’.

One might wonder about Google’s sudden launch of an Explainable AI service. Perhaps they got feedback from customers or they see this as an emerging market. Whatever the reason, it's great that they did in fact change their minds and believe in the power of Explainable AI. We are all for it.

Our belief at Fiddler Labs

We started Fiddler with the belief of building Explainability into the core of the AI workflow. We believe in a future where Explanations not only provide much needed answers for businesses on how their AI algorithms work, but also help ensure they are launching ethical and fair algorithms for their users. Our work with customers is bearing fruit as we go along this journey.

Finally, we believe that Ethics plays a big role in explainability because ultimately the goal of explainability is to ensure that companies are building ethical and responsible AI. For explainability to succeed in its ultimate goal of ethical AI, we need an agnostic and independent approach, and this is what we’re working on at Fiddler.

Founded in October 2018, Fiddler's mission is to enable businesses of all sizes to unlock the AI black box and deliver trustworthy AI experiences for their customers. Fiddler’s next-generation Explainable AI Engine enables data science, product and business users to understand, analyze, validate, and manage their AI solutions, providing transparent and reliable experiences to their end users. Our customers include pioneering Fortune 500 companies as well as emerging tech companies. For more information please visit www.fiddler.ai or follow us on Twitter @fiddlerlabs.