The rapid adoption of artificial intelligence (AI) is transforming operations across industries, from automating customer service to powering advanced predictive analytics. Among these innovations, large language models (LLMs) have become central to modern enterprise strategies. But as AI capabilities expand, so do the challenges related to compliance, ethics, and security.

Managing compliance and risk is especially critical for LLMs, which can generate sensitive content or inadvertently expose private information. Organizations need robust governance tools to address these risks, providing visibility and control when LLM deployments are in production.

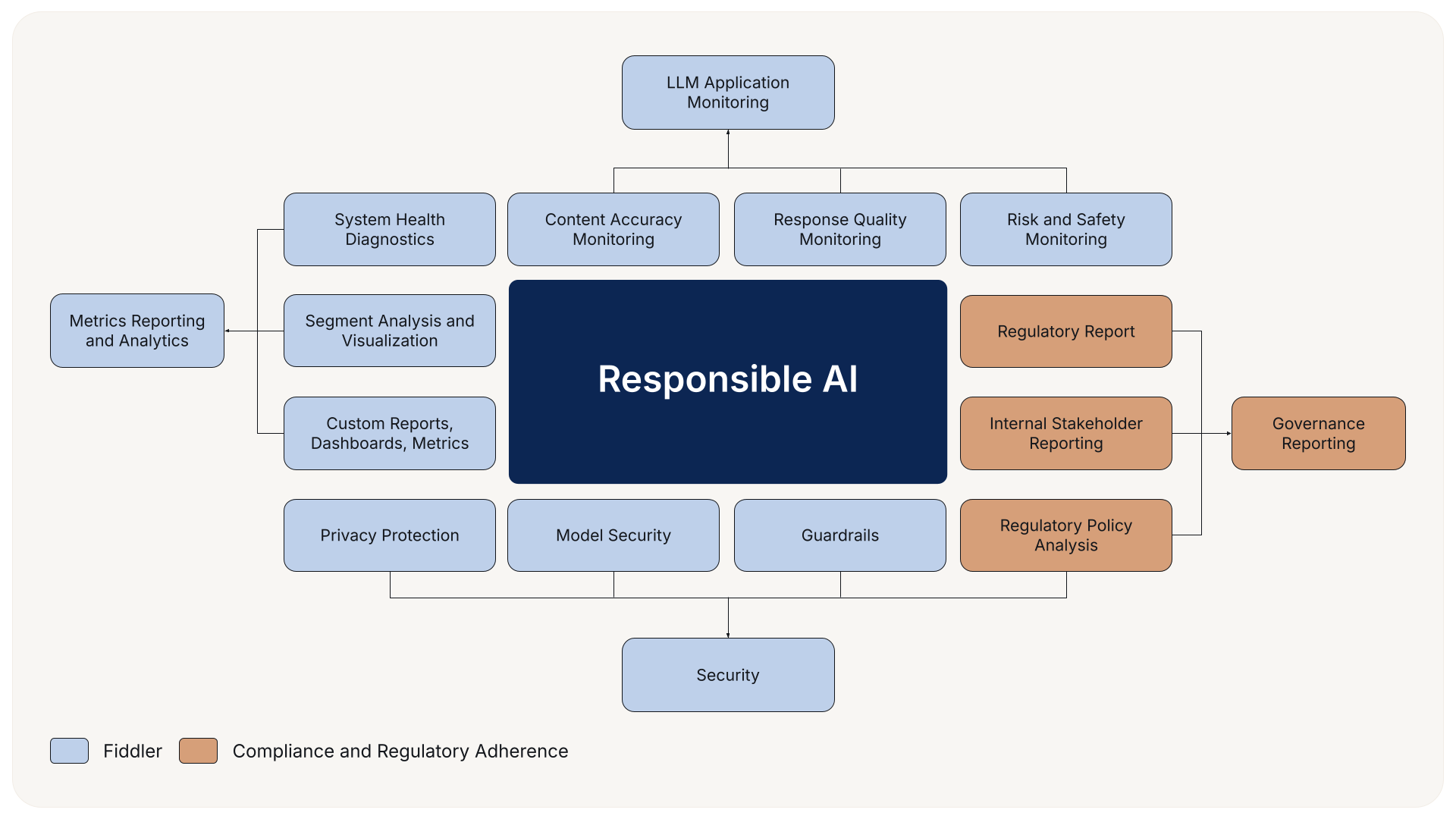

The Fiddler AI Observability and Security platform offer a unified solution to help organizations ensure LLM compliance, strengthen risk management, and build trust as they scale generative and predictive AI technologies.

In this blog, we’ll break down the fundamentals of LLM compliance, risk management, and governance, examine the evolving regulatory landscape, and explore how Fiddler enables responsible and safe AI deployment at scale.

Understanding AI Compliance

What is AI Compliance?

AI compliance involves meeting regulatory, legal, and ethical standards in developing and using AI technologies. For large language models (LLMs), this means ensuring systems are accurate, safe, and secure, while protecting data privacy and upholding human rights. Key focus areas include accuracy, data protection, and accountability.

Why Does AI Compliance Matter?

Compliance is critical when working with LLMs. These models can generate sensitive content or inadvertently expose private information, making data privacy and output monitoring essential. Failure to meet compliance requirements can lead to legal penalties, reputational harm, and loss of customer trust.

By prioritizing compliance, organizations ensure responsible LLM use, reduce risk, and build more trustworthy, secure AI systems, while maintaining a competitive edge and meeting growing regulatory demands.

AI Governance and Regulatory Compliance

Establishing AI Governance Structures

Effective LLM compliance begins with a strong governance foundation. Organizations must clearly define roles and responsibilities for AI oversight, establish policies for ethical AI use, and implement a structured compliance process to monitor LLM systems performance and impact.

A well-defined AI governance framework ensures alignment between organizational goals, regulatory requirements, and ethical standards, strengthening risk management efforts and enabling continuous improvement throughout the LLM lifecycle.

Regulatory Requirements for AI

AI regulations are evolving rapidly worldwide. Landmark legislation like the European Union’s AI Act sets stringent requirements for transparency, risk assessment, and human oversight. Other jurisdictions are developing similar rules to address LLM risks comprehensively.

Organizations must stay informed about regulatory changes, conduct regular risk assessments, and adapt their governance frameworks to maintain compliance. This dynamic environment demands agile compliance strategies supported by real-time monitoring tools.

International Standards for AI Compliance

Global standards from the International Organization for Standardization (ISO) offer clear frameworks for managing AI risks, ensuring transparency, and maintaining accountability. One key standard is ISO/IEC 42001, the first international standard focused on AI management systems. It provides requirements and guidance for establishing, implementing, maintaining, and continually improving an AI management system within organizations.

By adopting ISO/IEC 42001, organizations can formalize their approach to AI governance, ensure responsible LLM development, and demonstrate compliance with global best practices. These standards support consistent risk management, improve decision-making, and help organizations align their AI systems with legal and ethical expectations across jurisdictions.

LLM Risk Management and Data Protection

LLM Risk Management Frameworks (AI RMF)

AI risk management frameworks offer structured approaches to identifying, assessing, and mitigating risks associated with LLM systems. These frameworks guide organizations in addressing technical, ethical, and operational risks, from inaccuracies to cybersecurity vulnerabilities.

Aligning AI RMFs with regulatory requirements ensures comprehensive risk mitigation. By integrating compliance checks into risk management processes, enterprises can anticipate regulatory scrutiny and respond swiftly to emerging threats.

Implementing and Monitoring AI Compliance

Organizations must implement structured compliance measures and maintain ongoing oversight through continuous audits and updates to ensure responsible LLM deployment.

Implementing LLM Compliance Measures

LLM compliance requires establishing precise controls across data usage, model training, testing, and deployment. These measures ensure systems operate responsibly and meet legal and ethical standards. Key best practices include:

- Enforcing data privacy and protection policies.

- Documenting model decisions, training data, and development processes.

- Implementing human-in-the-loop oversight to monitor and correct outputs when needed.

Regular Audits and Updates

Compliance is an ongoing process. Continuous monitoring and scheduled audits are critical to aligning with regulatory requirements and industry standards. Organizations must routinely review and update compliance protocols to reflect changes in regulations, emerging technologies, and operational insights.

Challenges of LLM Compliance and Risk Management

As large language models (LLMs) become increasingly embedded in enterprise workflows, organizations face several challenges in ensuring compliance and managing risk effectively.

1. Limited Visibility

LLMs process unstructured, context-dependent data such as natural language, making their outputs difficult to evaluate using traditional accuracy metrics. What appears correct in one context may be misleading or inappropriate in another. As a result, monitoring the quality, reliability, and safety of LLM outputs requires advanced observability tools and deeper contextual analysis.

2. Evolving Regulatory Landscape

Global AI regulations like the EU AI Act and proposed U.S. frameworks are rapidly evolving. Staying current with shifting compliance requirements is difficult, especially when deploying LLMs across multiple regions.

3. Risk of Privacy Violations

LLMs trained on vast datasets may inadvertently generate or leak sensitive or personal information, raising serious privacy and security risks. These issues can expose organizations to legal consequences, regulatory penalties, and significant reputational damage.

4. Content Safety

LLMs can perpetuate harmful outputs without rigorous oversight, leading to toxic and unsafe outcomes. Ensuring responsible LLM development requires continuous toxicity detection and mitigation.

5. Inadequate Monitoring and Auditing Tools

Many organizations lack the real-time visibility and monitoring infrastructure needed to proactively detect compliance gaps or model drift—putting their risk management efforts at a disadvantage.

Leveraging LLM Compliance and Risk Management with Fiddler

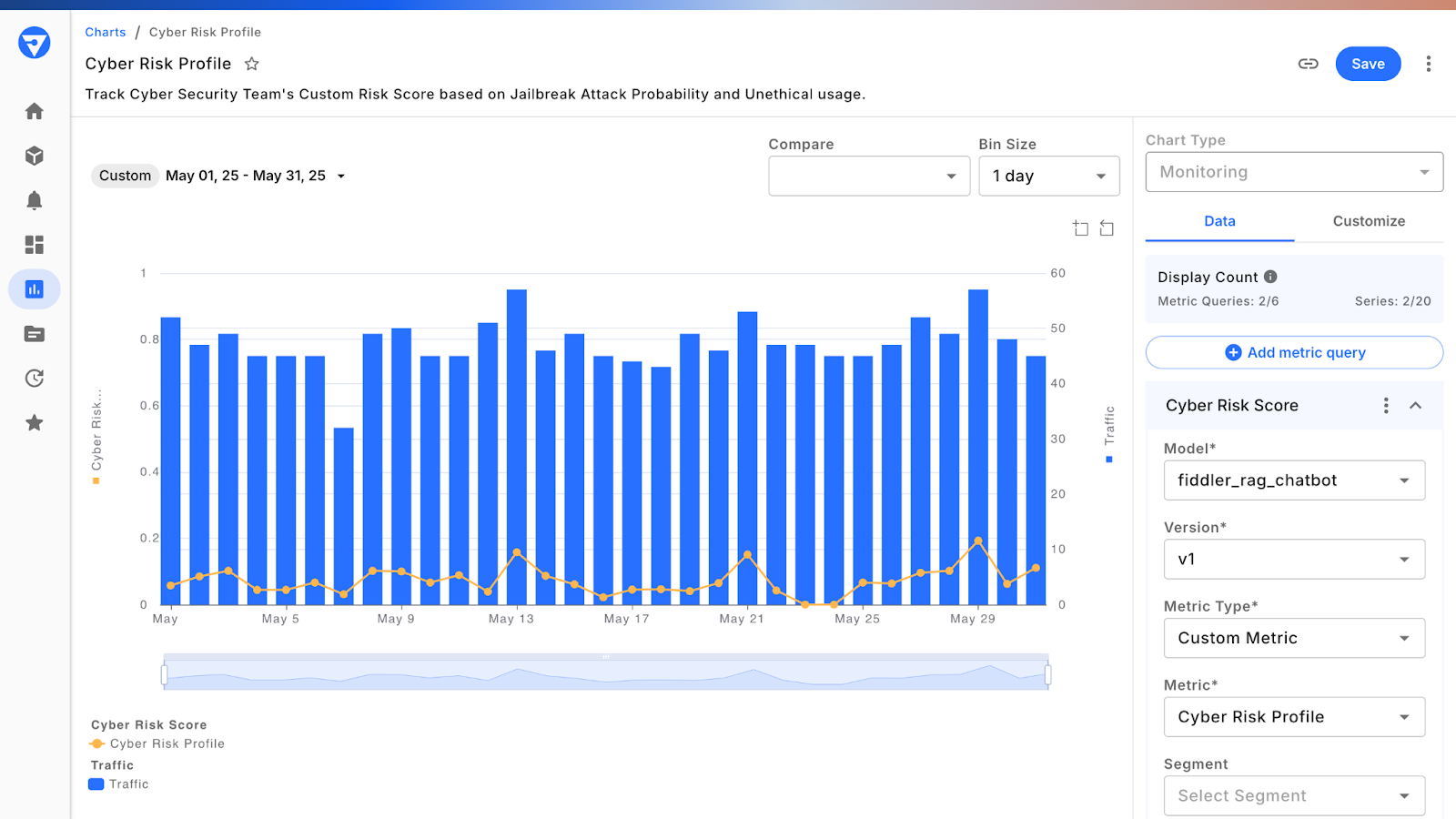

Fiddler helps organizations navigate the complexities of LLM compliance and risk management practices, particularly when deploying large language models. It provides real-time monitoring, explainability tools, and audit-ready evidence to demonstrate adherence to compliance requirements and regulatory standards.

Key benefits of using Fiddler include:

- Enhanced transparency: Understand LLM outputs and model behavior to identify harmful content, inaccuracies, or privacy risks.

- Automated risk detection: Continuously detect anomalies and potential compliance violations across generative AI workflows.

- Regulatory alignment: Stay current with evolving compliance requirements through built-in governance workflows.

- Scalable oversight: Apply effective risk management practices across diverse AI applications and production environments.

By integrating the Fiddler AI Observability and Security platform, organizations can confidently scale LLM-powered applications while meeting internal governance standards and external regulatory mandates—supporting accurate, safe, and responsible AI development.

Ready to enhance AI compliance and risk management? Explore how Fiddler’s security and compliance solutions can strengthen your LLMs today.

Frequently Asked Questions About AI Compliance and Risk Management

1. What is AI compliance?

AI compliance ensures that AI systems meet legal, ethical, and regulatory standards and operate responsibly and transparently.

2. How is AI used in risk management?

AI is used in risk management to help organizations identify, assess, and mitigate risks by analyzing data patterns and monitoring AI models in real time. It also flags potential issues such as content safety, system failures, or privacy concerns, enabling more secure and compliant AI operations.

3. How can AI identify risks?

AI can identify potential risks by detecting anomalies, tracking performance drift, and using predictive analytics to uncover failures or compliance breaches. It also plays a key role in monitoring data security, helping organizations prevent unauthorized access or data leaks.