What Is the difference between observability and monitoring?

4

Min Read

As machine learning (ML) and artificial intelligence (AI) become more ubiquitous, more and more companies have to put into place model monitoring procedures and data observability practices. But what do these terms mean? How are they similar or different?

What is monitoring and observability?

Monitoring and observability are two terms that are frequently used when talking about machine learning and artificial intelligence. Let’s dive into these two concepts to understand how they are similar and different.

What observability means in machine learning

“Observability” often refers to the statistics, performance data, and metrics from the entire machine learning lifecycle. Think of observability as a window into your data—without the ability to view the inputs, you won’t understand why you’re getting the outputs you receive. Once you have observability, your MLOps team will have actionable insights to improve your models.

What monitoring means in machine learning

Model monitoring is a term to describe the close tracking of the ML models with the goal of identifying potential issues and correcting them before poor model performance impacts the business. This monitoring can occur in any stage in the model life cycle, from identifying model bias in the early stages to spotting data drift after the model is implemented. One way to think about model monitoring is like a coach for an athlete. As the athlete is practicing, the coach notes how the athlete is performing and gives immediate feedback for corrections and tweaks to improve the overall output.

What is the difference between observability and monitoring?

You may be wondering, is observability the same as monitoring? These terms are often used interchangeably due to their similarities. In fact, when discussing observability vs. monitoring, the shades of difference don’t particularly matter—both terms encompass the same goal: better models.

Where does monitoring and observability fit into the MLOps lifecycle?

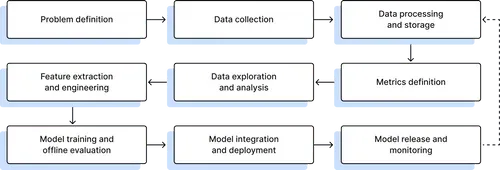

The MLOps lifecycle typically has nine stages:

- Problem definition: Developing a model begins with identifying the issue the model will address.

- Data collection: The model requires a large amount of data to train with, so relevant and usable data must be collected.

- Data processing or storage: Once the data is selected, it must be stored. Due to the vast amount of data needed, often it will be stored in a data lake or warehouse.

- Metrics definition: In order to properly evaluate the quality and success of the model, the key metrics must be chosen.

- Data exploration: Data scientists will analyze the data to develop hypotheses about what modeling techniques would be most useful.

- Feature extraction and engineering: The aspects of the data that will be used as inputs are selected by data scientists. An example of a feature could be the credit score of a user.

- Model training and offline evaluation: The data that has been collected and processed is now used to train the models and evaluate their performance in order to select the approach with the best results.

- Model integration and deployment: After the training and validation of the previous stage, models need to be integrated into the product. This may involve building new services or other digital infrastructure.

- Model release and monitoring: Models are not “set it and forget it.” Once the model is live, close monitoring for issues is vitally important in order to stay in compliance with regulatory requirements, fix drift, identify opportunities to retrain on new data, and a myriad of other situations.

As you can see, model monitoring is built into the last stage of the MLOps lifecycle and data observability is vital for every step of the way. Without this view into your data and close scrutiny of your models, your AI quickly becomes a black box with minimal insight into its operation. Observability and monitoring are key to explainable AI, making your black box more like a glass-box so you can always understand the ‘why’ behind model decisions.

What are observability and monitoring tools?

Observability and model monitoring tools for AI can come in many different forms. From dashboards to platforms, there are several ways you can attempt to build observability and monitoring into your MLOps process. One example is our AI observability platform. We have extended traditional model monitoring to provide in-depth model insights and actionable steps, giving detailed explanations and model analytics. Users can easily review real-time monitored output and dig into the “why” and “how” behind model behavior.

Try Fiddler for free to better understand how continuous model monitoring and explainable AI can help:

- Detect, understand, and remedy data drift issues.

- Illuminate the “why” behind decision-making to find and fix root causes.

- Ensure continued performance by monitoring for outliers.