Choosing the Right AI Approach: When to Use Agents Over Prompts or API Calls

Min Read

From automation to insight generation, Artificial Intelligence (AI) is reengineering how enterprises interact with data and make decisions. The AI toolkit has evolved from simple API calls to large language model (LLM) prompts and now to autonomous agents, each designed to handle different levels of complexity and control. Knowing which approach to use, and when, is essential for success.

As enterprise needs grow more complex, the demand increases for intelligent systems that can remember, reason, plan, and act. AI agents meet this need by offering capabilities that go far beyond what stateless prompts or deterministic APIs can provide.

For technical leaders, ML and LLM teams, and strategic decision-makers, understanding when to use prompts, APIs, or agents is key to building effective AI systems. With the right approach, your organization can unlock greater performance and flexibility. The Fiddler AI Observability and Security platform supports this evolution by providing the visibility, context, and controlAi teams need to deploy agentic AI at scale.

Key Takeaways

- AI agents outperform prompts and API calls in tasks requiring planning, memory, and dynamic decision-making across multiple steps or tools.

- Prompts and API calls remain ideal for simple, fast, or deterministic tasks that don’t require context retention or tool orchestration.

- Fiddler Agentic Observability enables scalable deployment of AI agents by offering complete visibility, from sessions to spans, contextual insights, diagnostics for root cause analysis.

What are AI Agents and Why do They Matter?

An AI agent is an autonomous system that interacts with its environment to achieve a defined objective. Unlike one-shot prompts or pre-coded API calls, agents retain memory, adapt to new inputs, and make decisions across multiple steps. Many rely on natural language processing to interpret user intent, extract context, and coordinate actions across systems.

Core Capabilities of Autonomous AI Agents

To fully understand how AI agents work, it’s essential to explore the core capabilities that enable them to operate autonomously and adapt to real-world complexity.

- Memory and state retention: Agents maintain context across user interactions, tasks, and tool usage, enabling continuity and coherence in dynamic workflows.

- Goal-driven planning: They interpret objectives, formulate strategies, and adapt actions based on evolving inputs or conditions.

- Multi-step reasoning: Agents can break down complex tasks into logical steps and execute them sequentially to achieve a desired outcome.

- Tool usage and orchestration: They can autonomously access and coordinate multiple systems, such as APIs, databases, and internal applications, to complete tasks.

These foundational capabilities are essential for building AI agents that can operate independently across complex enterprise workflows, adapting to dynamic input and coordinating across systems.

They help solve high-value enterprise challenges, such as:

- Automating complex workflows across multiple departments

- Enhancing customer support by resolving multi-intent queries

- Generating and executing multi-document knowledge synthesis

- Powering intelligent assistants for revenue operations or supply chain planning

Prompt vs Agent vs API Call: A Comparison

Here’s how the three approaches compare across key attributes:

When to Use AI Agents

AI agents are best suited for high-complexity, goal-driven tasks that require reasoning, tool orchestration, and autonomous adaptation. In the following scenarios, agents deliver clear advantages over simple prompts or API calls:

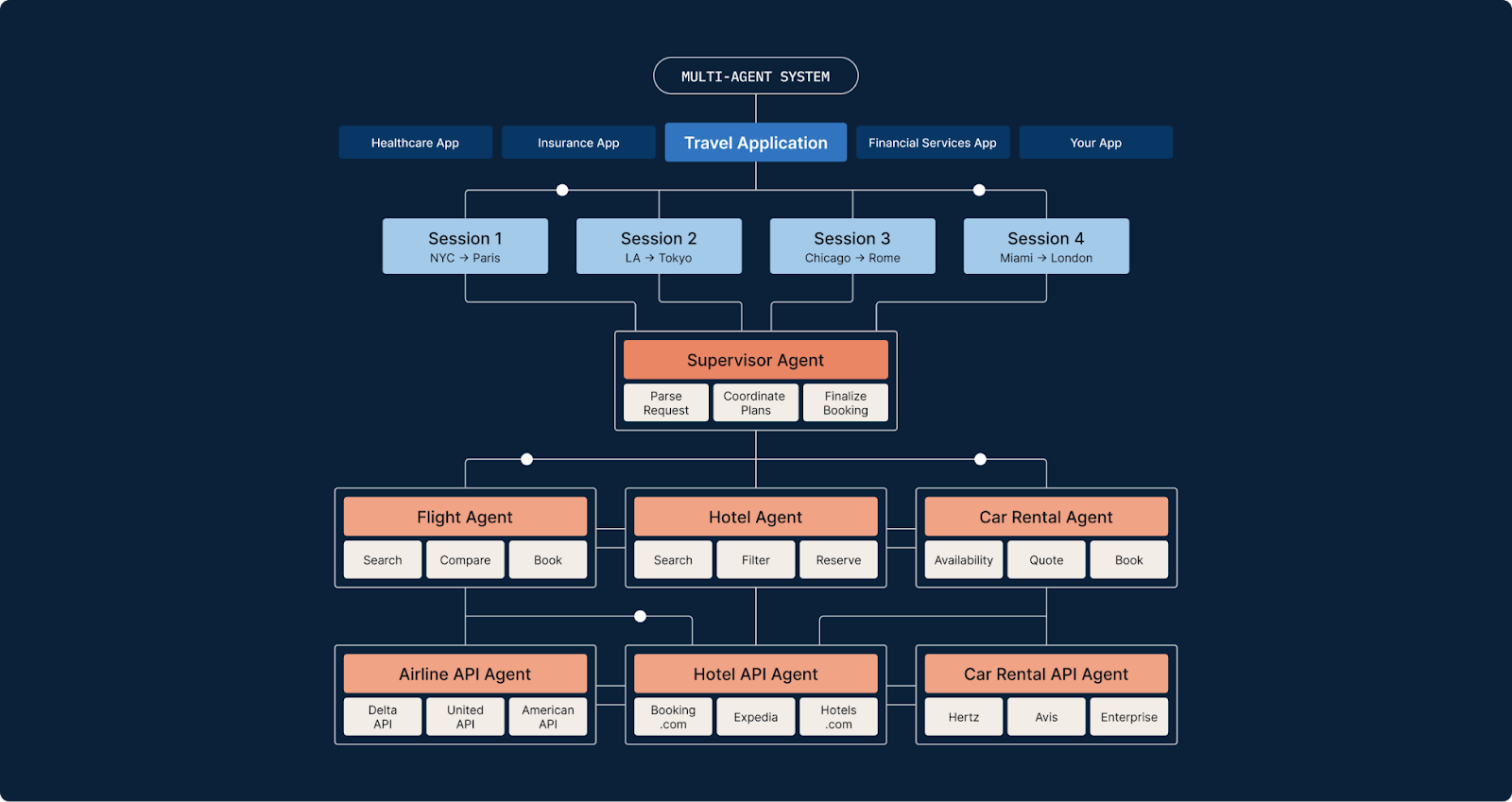

1. Complex, Multi-Step Workflows

An AI travel assistant that checks policy constraints, searches inventory, compares options, and books trips based on real-time traveler input and availability.

2. Decision-Making Under Uncertainty

A customer support agent who interprets ambiguous or emotionally nuanced queries and selects from multiple resolution paths, including escalation when necessary.

3. Tool Chaining and Integration

An AI system that retrieves documents via retrieval-augmented generation (RAG), synthesizes insights, and generates visualizations, seamlessly integrating AI agents into each stage of the workflow without requiring human prompts.

4. Autonomous Task Execution

A sales assistant who follows up with leads, updates CRM records, and produces opportunity forecasts using live pipeline data.

5. Cross-Agent Coordination

In complex environments such as trading or supply chain management, multiple AI agents collaborate to negotiate resources, adjust schedules, and respond to changing conditions in real time.

These use cases reflect real-world enterprise challenges where traditional prompt-response methods fall short. AI agents excel in dynamic, multi-layered environments that demand flexibility, memory, and strategic decision-making.

When Prompts or API Calls are Enough

For many straightforward applications (such as handling repetitive tasks like text formatting, summarization, or classification), simple prompts or API calls offer efficient, effective solutions without the need for agentic complexity.

Prompts and APIs are especially well-suited for:

- Single-step generation: For example, generating a product description or drafting a quick email response.

- Latency-sensitive applications: Such as real-time chatbots that require sub-second response times.

- Tightly controlled pipelines: Including workflows like summarizing legal documents, where predictability and consistency are critical.

- Security-critical operations: Such as audit logging, financial reporting, or compliance tasks that demand strict transparency and traceability.

In these scenarios, simpler approaches help reduce unpredictability, lower operational costs, and streamline governance.

How Fiddler Enables High-Performing, Scalable Agentic AI

Deploying AI agents in production introduces real challenges, including observability, risk mitigation, latency tracking, and regulatory compliance. The Fiddler AI Observability and Security platform addresses these complexities with Agentic Observability, purpose-built for today’s multi-agent systems.

What Differentiates Fiddler:

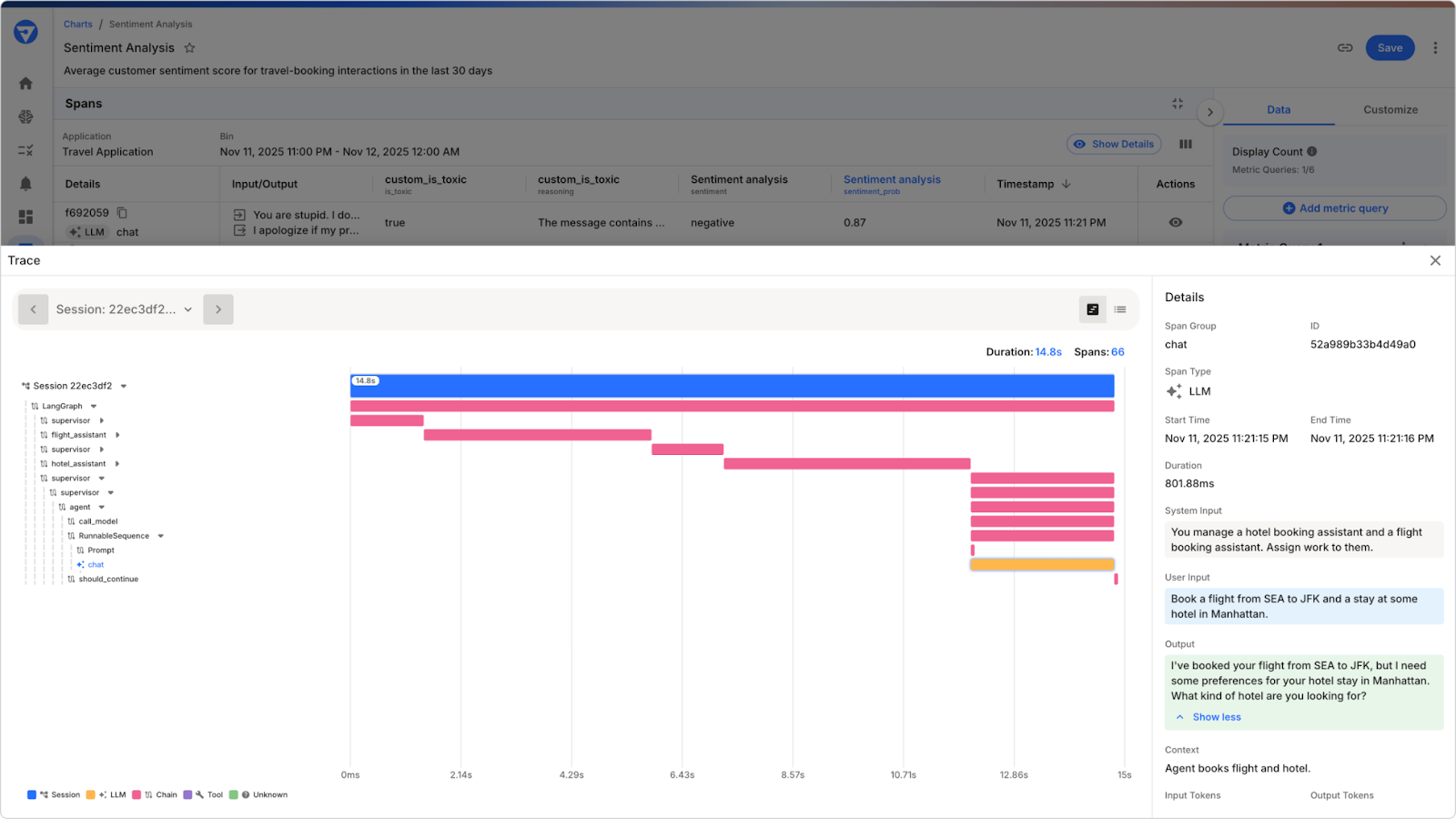

End-to-End Agentic Observability

Monitor and analyze agent behavior across the entire lifecycle, from evaluation in pre-production and monitoring in production.

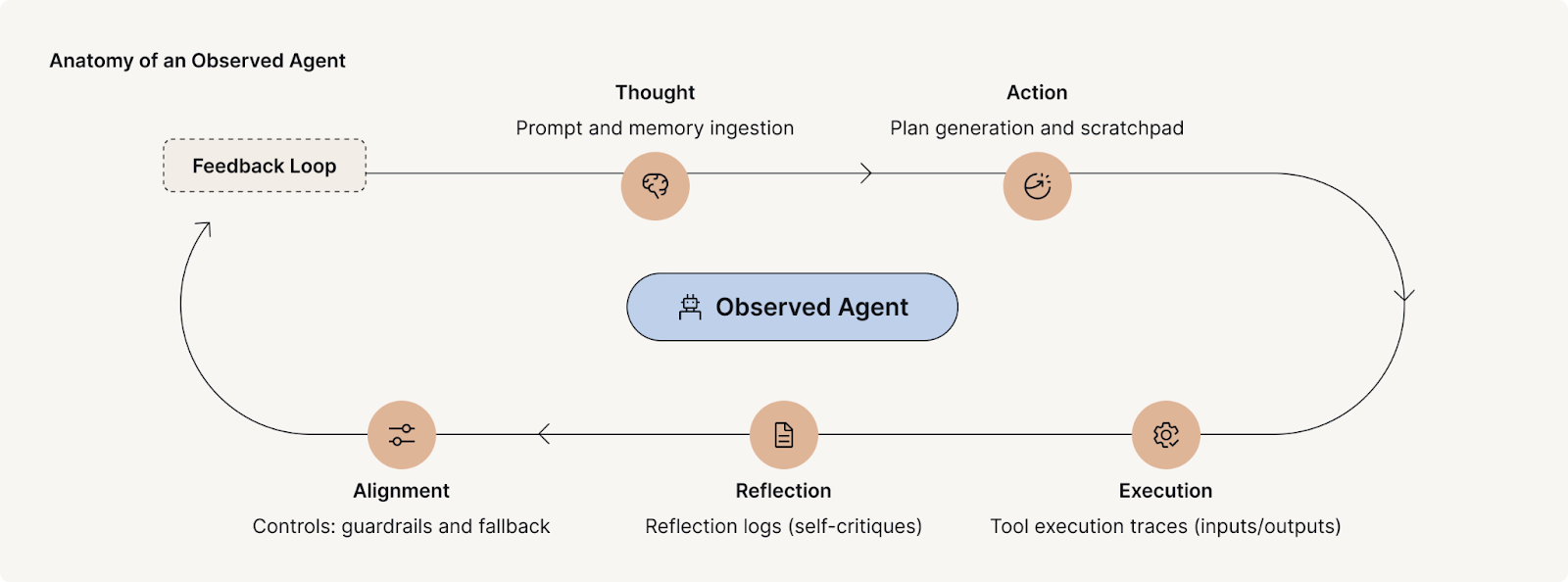

Visibility into Reasoning

Gain insight into why an agent made a particular decision, with detailed span-level and tool-level tracking.

Performance Attribution

Identify performance bottlenecks and reduce both Mean Time to Identify (MTTI) and Mean Time to Resolution (MTTR) for operational issues.

Flexible Framework Support

Integrates seamlessly with LangGraph, Amazon Bedrock, CrewAI, Google ADK, and other leading frameworks, along with support for custom agents and proprietary stacks tailored to enterprise workflows.

At the core of these capabilities are Fiddler Trust Models. These models score inputs and outputs for risk, bias, and quality in real time — entirely within your environment. Fiddler delivers:

- In-environment scoring, no external calls

- Less than 100ms latency

- No hidden costs

In short, Fiddler enables enterprise-grade observability for agentic AI at scale, without compromising on speed, security, or cost control.

Choosing the Right AI Path: Agent, Prompt, or API?

Selecting the proper AI method is about what fits. Use the following decision framework to guide your process:

Agentic applications unlock immense value, but they require observability, and risk management to function safely in the real world. That’s why Fiddler Agentic Observability, built on top of the Fiddler Trust Service, is purpose-built to give you confidence at every layer; session, agent, span, and beyond.

Summary:

- If your system needs to think, remember, and act, use an agent.

- If it only needs to respond or return, stick with a prompt or API.

Ready to Deploy AI Agents with Confidence?

As AI systems shift from reactive tools to proactive problem solvers, selecting the right architecture is more important than ever. AI agents offer tremendous potential, but they require robust observability, strong guardrails, and a foundation of trust to operate safely and effectively.

The Fiddler AI Observability and Security Platform delivers full-stack visibility, context, and control, ensuring your AI agents perform not only with intelligence, but with accountability and scale. Whether you’re experimenting in development or deploying agents into production, Fiddler provides the observability and trust layer required for enterprise readiness.

Ready to deploy AI agents with confidence? Fiddler Agentic Observability gives you complete visibility across every layer of your agentic system, empowering you to build smarter, faster, and more responsibly.

Frequently Asked Questions

1. What is the difference between an API and an AI agent?

An API is a rule-based interface that enables software components to exchange data in a predictable and structured manner. An AI agent, by contrast, is an autonomous system capable of reasoning, retaining memory, and integrating with tools to carry out complex, multi-step tasks.

Some types of AI agents, such as model-based reflex agents, utilize internal representations of the world to make decisions based not only on current inputs but also on learned context, making them particularly effective in dynamic or evolving environments.

2. When should you use an AI agent?

AI agents are best suited to perform tasks that require planning, adaptability, tool orchestration, or multi-stage decision-making — particularly in dynamic or unpredictable environments.

3. What’s the difference between AI agents and LLM prompts?

LLM prompts generate single-turn responses without retaining memory or maintaining task continuity. In contrast, AI agents engage in broader, goal-driven processes that retain context, plan actions, and execute tasks autonomously. Among the various types of AI agents, these systems excel in advanced, multi-step use cases that demand reasoning, adaptability, and seamless interaction across tools and environments.